New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

starting U-net #91

Comments

|

Hmm this is rather vage... ;-) Some error message would certainly help. Make sure you have all dependencies installed

To look at the demo notebooks you will also need |

|

Yes, I have all dependencies installed. |

|

This sounds like your interpreter is crashing. I doubt that this is unet specific. Can you import tensorflow without the crash? |

|

Yes that is no problem, also the test version TestTf_unet.py runs without errors. |

|

Ok, I am not sure if this is the issue but now i changed my trainingdata from the .png file format to .tiff. Anyway now I get the error: "Could not broadcast input array from shape <3600,1400,4> into shape <3600,1400>... Do you know what i have to change? Aren't you using the .tiff format aswell? |

|

Ok thats better. tf_unet uses the |

|

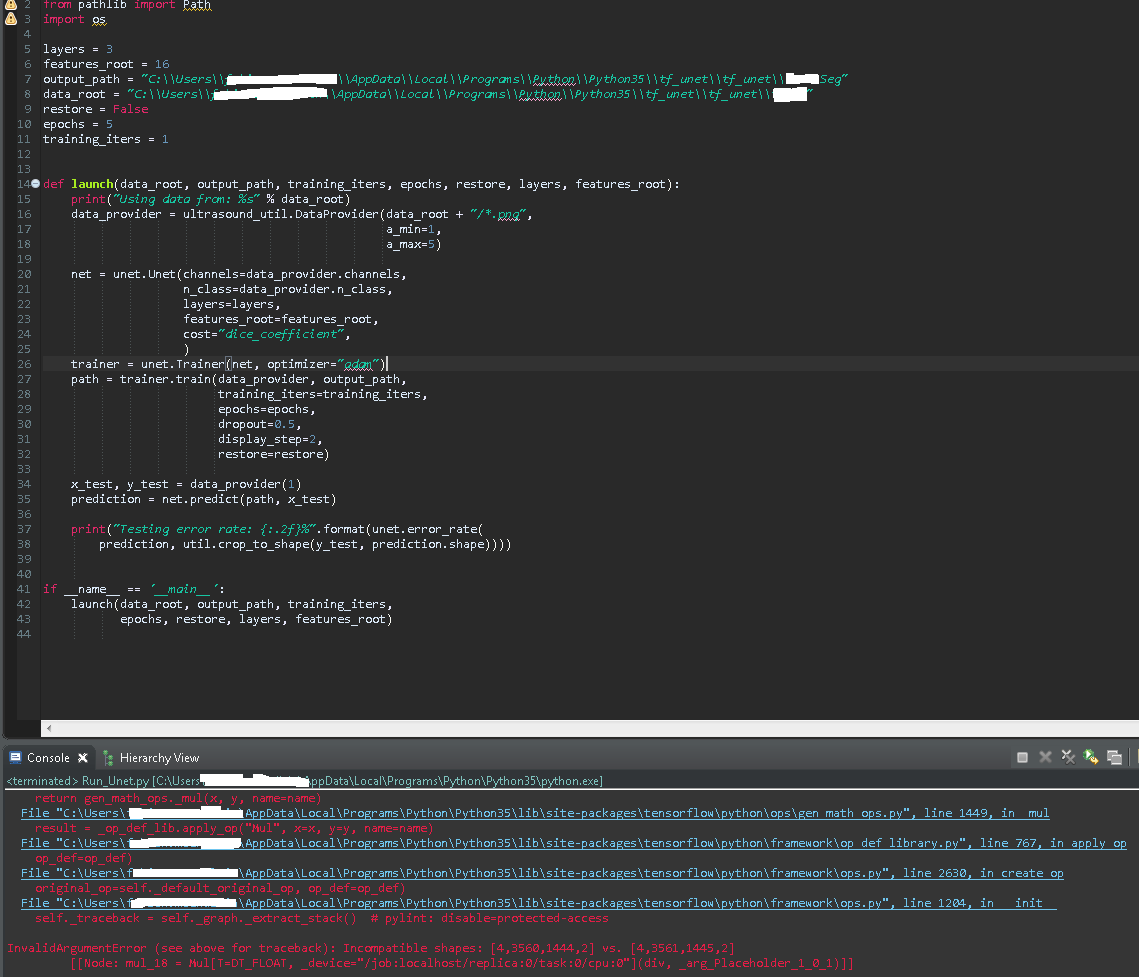

Not 100% sure (because the relevant bit of the traceback is missing) but this might be due a known, unresolved bug in package (#6, #89): The problem could be the uneven number of pixels (1445) Could you try to crop the input images to event pixel counts? As a side note: The input images seem rather large. In the original paper 576*576 was used |

|

This is true but it is mentioned that this network can applied to images with |

|

Well it's a bug.. In principle you can increase the size of the input image by mirroring the borders. There are some examples on how to do this in the issues |

|

Thanks for your help! I was looking through the issues, and there were a couple of issues about that. The propse of mirroring appeared very often, but how can I code that in Tensor Flow? |

|

you don't have to do this with tensorflow. People usually use opencv for that |

|

see #41 |

|

But with this method the pixels of output_image isn't 1:1 the input_image, is it? |

|

??? |

|

Not quite sure if I understand what you mean |

|

I guess you mean that output image is slightly smaller than the input

images.

Refer to past issues for more details and examples on how to handle this.

…On Sep 11, 2017 18:09, "Joel Akeret" ***@***.***> wrote:

Not quite sure if I understand what you mean

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#91 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AHw_2Y0S2pHN7sVXBYL3k9cbH_3q3J-Wks5shWkXgaJpZM4PK1zn>

.

|

|

Thanks for your answer. I was looking through the issues, and the only one solution I found was was the proposed adding of borders to the input image. I was just wondering if then I still have an exact pixel correspondence of the input image and the prediction image. And if I make my input image larger via mirroring the borders, I have to remove and change all the crop and rehape functions in the code, is that right? |

|

Just restore coordinates by multiplying them to inputres/outputres.

…On Sep 12, 2017 11:27, "Fab1900" ***@***.***> wrote:

Thanks for your answer. I was looking through the issues, and the only one

solution I found was was the proposed adding of borders to the input image.

I was just wondering if then I still have an exact pixel correspondence of

the input image and the prediction image. And if I make my input image

larger via mirroring the borders, I have to remove and change all the crop

and rehape functions in the code, is that right?

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#91 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AHw_2d0XbF1-x9ysbCf11iDvjlCgBPNpks5shlyHgaJpZM4PK1zn>

.

|

|

I am sry but I don't understand what you mean. |

|

Ok, say you have input pictures of 1000x1000 resolution and your output pictures are 250x250. You need to find out how output pixels relate to the original ones and just multiply the coordinates accordingly. |

|

Ah ok I understand, i will try this. Thanks for your help! |

|

Unfortunatley I have now another issue with training, I hope it is ok if I share it with you? |

|

I suspect that this happens when some of the gradients explode for whatever reason. So far I haven't found a definitve answer to that. |

|

Ok thanks I will try. Traceback (most recent call last): Unforunately I couldn't figure out what to change in the code and I don't want to zero-pad the images to the same input size. |

|

Frankly, this is something I have never tried. Probably the easiest is to reshape all the inputs to the same size |

|

I have the exact same problem. |

|

Which of the discussed problems do you mean in particular? |

|

I also meet the problem of invalidArgumentError, the logits and labels must have the same size. |

|

I resized all input images to the same dimensions. |

|

Would suggest look into imagemagik tool to get images transformed in bulk.

So I rescale images into squares, get them through the unet and rescale the

output back to the original proportions.

…On Jan 30, 2018 10:12 AM, "chutongz" ***@***.***> wrote:

Thanks a lot for your explanation! But I only use one input image since I

try to make the net work first. Do you mean I should make sure the size of

the input image and its corresponding mask image are the same?

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#91 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AHw_2ULj8u8PgaMYP0vpWjHliAkmpjlTks5tPrLFgaJpZM4PK1zn>

.

|

hey, I have only one image and both mask and image are same size so why am I still getting "InvalidArgumentError: logits and labels must be broadcastable: logits_size=[1140224,2] labels_size=[1142400,2]" eorror? Note that these are not obviously the size of input image and mask. |

Hey,

I would like to use the proposed U-net for my work but I am still a beginner with Python and Tensorflow. My current problem that I cant really start the code in general because python crashes already at the start. I think the issue is that I don't set the required parameters right at the beginning, I read the documentation but it is not clear for me yet which parameters I have to define and how (for example output path).

Can someone help me please?

Kind regards,

Fabian

The text was updated successfully, but these errors were encountered: