This is the official reference implementation of

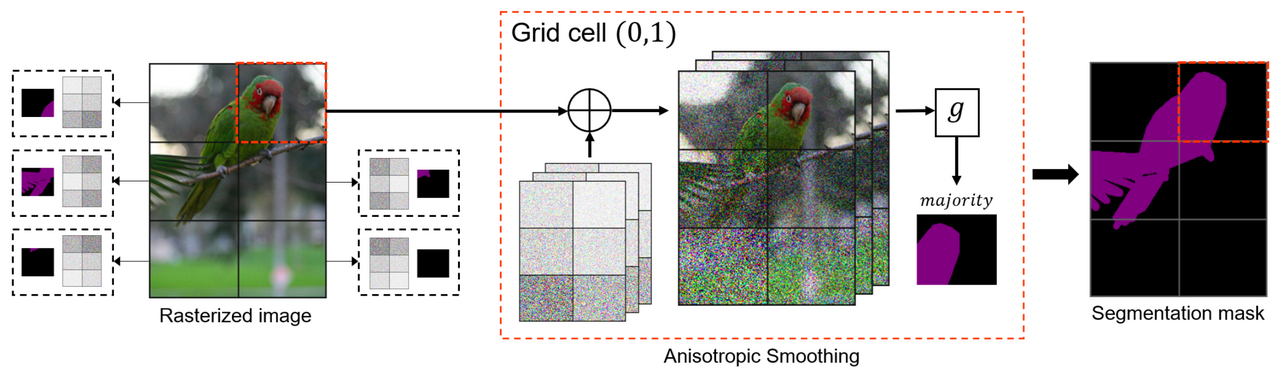

"Localized Randomized Smoothing"

Jan Schuchardt*, Tom Wollschläger*, Aleksandar Bojchevski, and Stephan Günnemann, ICLR 2023.

To install the requirements, execute

conda env create -f image_environment.yaml

conda env create -f graph_environment.yaml

This will create two separate conda environments (localized_smoothing_images and localized_smoothing_graphs).

You can install this package via pip install -e .

We use four different datasets: Pascal VOC (2012), Cityscapes, CiteSeer, and Cora_ML.

First download the main Pascal VOC (2012) dataset via torchvision.

Then, include the trainaug annotations, as explained in Section 2.2 of the DeepLabV3 README.

Finally, place data/trainaug.txt in .../pascal_voc/VOCdevkit/VOC2012/ImageSets/Segmentation.

Download the gtFine_trainvaltest dataset from the official CityScapes website.

Citeseer and Cora_ML can be found in .npz format within the data folder.

In order to reproduce all experiments, you will need need to execute the scripts in seml/scripts using the config files provided in seml/configs.

We use the SLURM Experiment Management Library, but the scripts are just standard sacred experiments that can also be run without a MongoDB and SLURM installation.

After computing all certificates, you can use the notebooks in plots to recreate the figures from the paper.

In case you do not want to run all experiments yourself, you can just run the notebooks while keeping the flag overwrite=False (our results are then loaded from the respective data subfolders).

For more details on which config files and plotting notebooks to use for recreating which figure from the paper, please consult REPRODUCE_IMAGES.MD and REPRODUCE_GRAPHS.MD

Please cite our paper if you use this code in your own work:

@InProceedings{Schuchardt2023_Localized,

author = {Schuchardt, Jan and Wollschl{\"a}ger, Tom and Bojchevski, Aleksandar and G{\"u}nnemann, Stephan},

title = {Localized Randomized Smoothing for Collective Robustness Certification},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2023}

}