-

Notifications

You must be signed in to change notification settings - Fork 38

Support for TensorFlow (python) inference should be added #24

Comments

|

Yes sir, Similarly, the image had to be converted to C++ PyObject PyArray. But, I haven't updated the documentation to display tensorflow support. Is that right? Thanks |

|

Hi @vinay0410, great. The DetectionSuite's github is the reference place for documentation, and also the wiki at the JdeRobot web page. Please feel free to update both, do not feel embarrassed. Current documentation is kind of minimal, but it will grow and we will improve it in the following months, just to make easy to other developers to use this tool and replicate our (future) tutorials. |

|

Hello Vinay! I have worked on the ObjectDetector output. The network (both TF and Keras versions) "returns" on each prediction the bounding boxes already scaled to the input image size, I already handled that. In addition, it returns the class(directly the string, instead of an index) and the corresponding score. I said "returns" because it is not the return of a call. As the network is an object ( Feel free to contact me for anything, or any kind of help. |

|

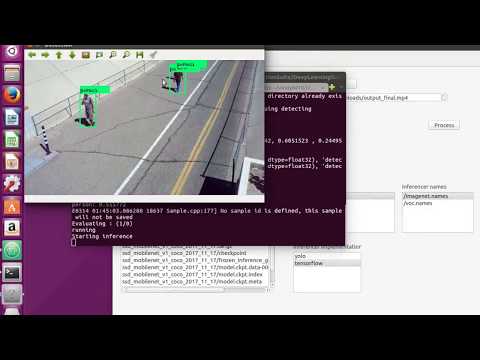

Hi @naxvm, I have also made a video testing the same in DetectionSuite, metioned below: It uses frozen inference graphs, just like dl-objectdetector to load the model and run inferences. The config file isn't necessary because of the same reason, and any file can be selected, just as depicted in the video. You can try running an inference, and please do share your valuable suggestions. Only, thing left for this issue, is to update the documentation regarding tensorflow support. Thanks! |

|

Here’s the pull request updating documentation and minor improvements for the inferencer. |

|

Hello Vinay, Your TF implementation seems fantastic for me, as we only need the frozen graph file, as you mention. The video inference seems promising, I was wondering why it takes that much time to make the prediction on the image. Is it because of the Python/C++ conversion? It should take far less time. Regards! |

|

Hi @naxvm , Though, I will update my python file for major speed improvements in a couple of days. Thanks for the feedback! |

So DetectionSuite, which is written in C++, may invoke inference of a neural network from TensorFlow-python for detection.

Maybe some pre-processing of the images can be required before injecting them into the TensorFlow network.

Maybe some post-processing of the network results (bounding boxes...) can be required to deliver them in the right structure to compute statistics.

The text was updated successfully, but these errors were encountered: