New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Report no results as error #144

Report no results as error #144

Conversation

|

Hello! As you noted in your description this is a very long running problem and one that I would love to solve. I see two things that come to mind when reading through this PR:

I do really like the idea you present of this being an opt-in setting. My ideal solution to this problem would be for jest-junit to possibly maintain a local cache of filepath->test suite(s) lookup table. So then if we detect an entire test file wasn't able to run due to an error we could fill in test suites and test cases and properly mark them as errors instead of failures. So while I REALLY appreciate the time you put into this PR I think I would prefer not to merge this into the main branch and I would suggest you fork jest-junit if you require this particular implementation. |

Hi!

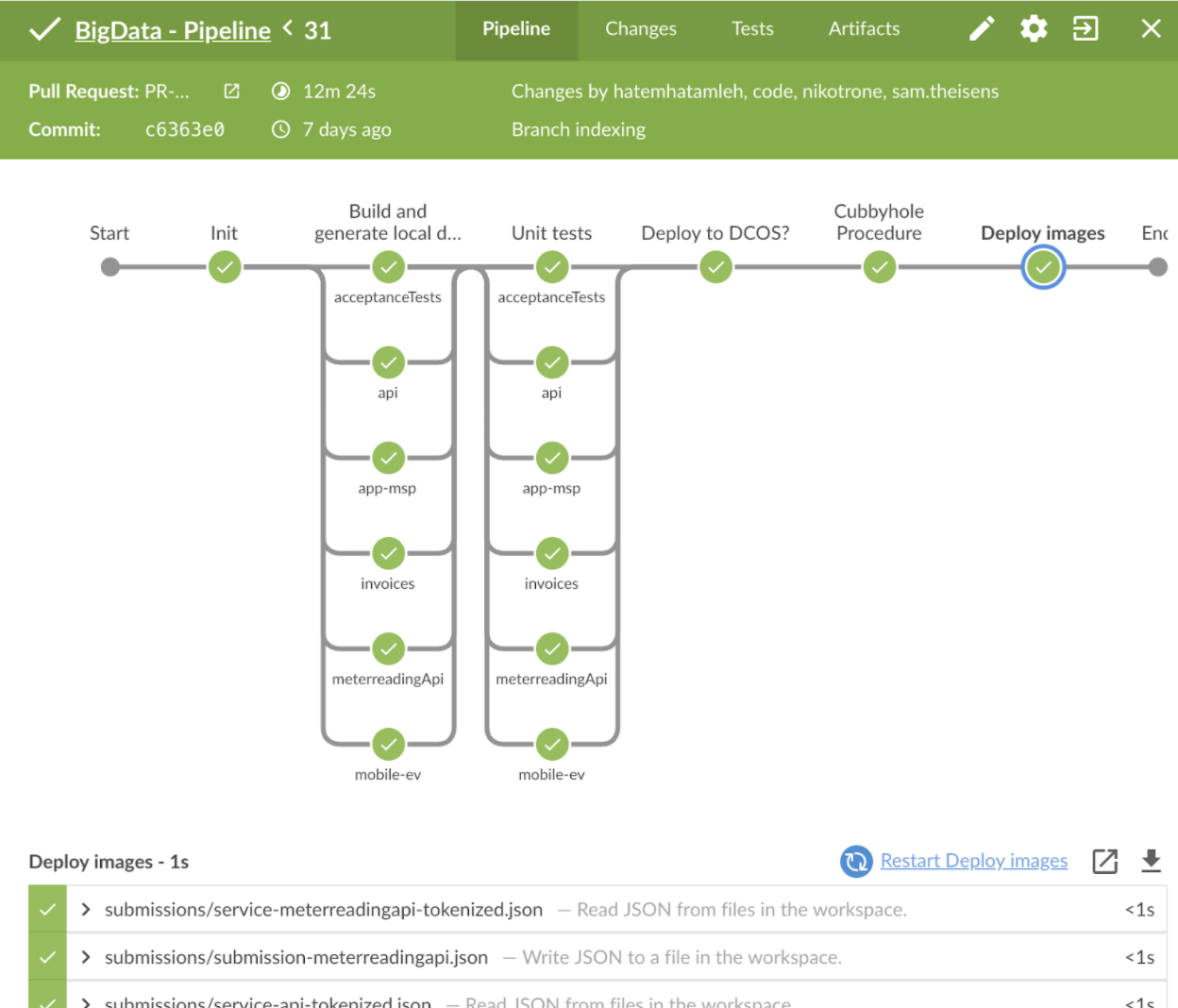

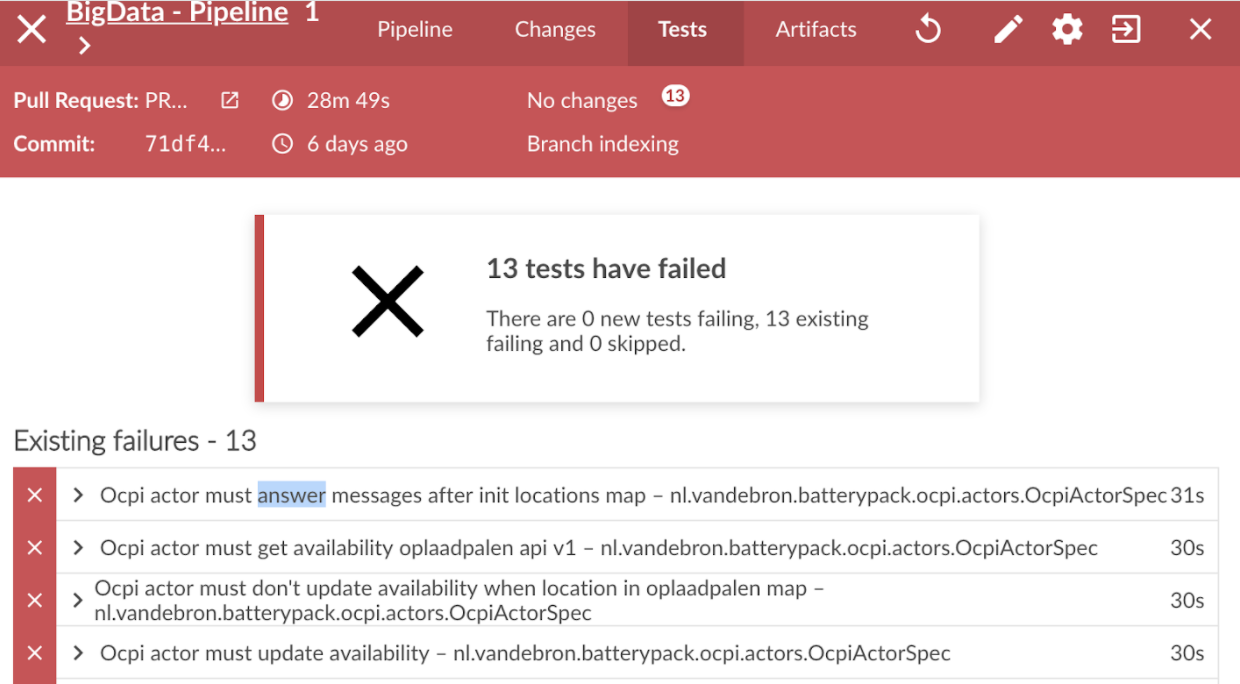

Jest-junit no longer filtering out errored suites is indeed the main use-case for me. At my company, we rely entirely on the junit test results to decide whether to fail or pass the test stage of a build.

I'm sorry, the example In our use-case, having stable suite and/or test names is really not very critical at all. After all, suites, files and tests can be renamed anyway. My guess is that most

I guess that would allow you to deal with the suite error cases more independently?

I am not attached to my particular implementation, but I am rather attached to being able to continue using this great library! |

I'm really curious why you would do this and not just rely on exit codes for pass/fail like most/all do?

Yeah something like I suggested would still allow for stable between builds test suite and test case names AND properly mark them as errors. The only annoying part is it would require a file to be checked in with the repo (the cache) and I imagine some folks only invoke jest-junit on master builds. |

|

One additional thing to note is an important distinction. Jest itself considers each individual test file a test suite. But jest-junit does the correct thing by treating each describe block as a test suite. Given that you can have multiple adjacent describe blocks per file: using just the filepath as the test suite name in order to report errors would not work for those who decide to have multiple describe blocks per test file. |

Probably most, but definitely not all rely on exit codes #26 (comment) :-) Our situation is as follows: All of these projects produce the same type of artifact: a docker container. After the test stage, the docker container will contain the test results, which will then be extracted from it by the pipeline and filed by Jenkins. The fact that we can't rely on the exit code from jest is not the biggest issue though. We work around it by adding a dummy suite as described before. The main problem is that without |

|

Gotcha this is helpful. I'm happy to chat about this somehow via hangout/zoom/etc. I would say if you want the current implementation I would suggest forking the repo. But if you're willing to invest the time to develop a solution that will work for all/most that use jest-junit I can chat in more detail about this. |

|

Hi, I was about to try make a PR when I saw this one

Similar to @SamTheisens's case, we use jest-junit in gitlab, which shows which tests failed from the PR view. However, it doesn't show test suites that failed because nothing is generated for those files.

In our use case with GitLab, this doesn't matter since we just need something to show that a particular test suite failed. GitLab doesn't care if the test suite names change; it just checks whether anything in the output has failed and lists those. So we would really appreciate if this could get merged as an opt-in feature. Maintaining a separate fork seems counter productive if this could simply be opt-in, and perhaps labelled as an "experimental" feature. Happy to help in anyway I can (: |

|

This is all great feedback, thank you. Given that a solution hasn't been found (by me or others) for a few years now on this issue I think I'm ok with moving forward and implementing a change that would:

If anyone wants to take a crack at doing all of that I'm happy to review the PR. |

|

That is great news! We are using the fork with this PR in our infrastructure, but it would be nice if we (and others) don't need to rely on a fork. Run time suite level failures are reported like this: <testsuite name="integration-tests/reporter/__tests__/simple.test.js" errors="1" failures="0" skipped="0" timestamp="1970-01-01T00:00:00" time="0" tests="0">

<properties>

<property name="best-tester" value="Jason Palmer"/>

</properties>

<testcase classname="Test suite failed to run" name="integration-tests/reporter/__tests__/simple.test.js" time="0">

<error> ● Test suite failed to run

None shall pass!

7 | });

8 | });

> 9 | throw new Error("None shall pass!")

| ^

at Object.<anonymous> (__tests__/simple.test.js:9:7)

</error>

</testcase>

</testsuite>For syntax errors, Jest adds quite an extensive message: <testsuite name="integration-tests/reporter/__tests__/simple.test.js" errors="1" failures="0" skipped="0" timestamp="1970-01-01T00:00:00" time="0" tests="0">

<properties>

<property name="best-tester" value="Jason Palmer"/>

</properties>

<testcase classname="Test suite failed to run" name="integration-tests/reporter/__tests__/simple.test.js" time="0">

<error> ● Test suite failed to run

Jest encountered an unexpected token

This usually means that you are trying to import a file which Jest cannot parse, e.g. it's not plain JavaScript.

By default, if Jest sees a Babel config, it will use that to transform your files, ignoring "node_modules".

Here's what you can do:

• To have some of your "node_modules" files transformed, you can specify a custom "transformIgnorePatterns" in your config.

• If you need a custom transformation specify a "transform" option in your config.

• If you simply want to mock your non-JS modules (e.g. binary assets) you can stub them out with the "moduleNameMapper" config option.

You'll find more details and examples of these config options in the docs:

https://jestjs.io/docs/en/configuration.html

Details:

SyntaxError: /Users/samtheisens/IdeaProjects/tmp/jest-junit/integration-tests/reporter/__tests__/simple.test.js: Unexpected token (2:0)

1 | import something

> 2 | describe('foo', () => {

| ^

3 | it('should pass', () => {

4 | expect(true).toEqual(true);

5 | });

at Parser._raise (../../node_modules/@babel/parser/src/parser/error.js:60:45)

</error>

</testcase>

</testsuite>@palmerj3 I think this PR meets the 4 requirements you listed now. |

|

Very cool! I'm going to take some time and review this. But just wanted to leave a note here so you don't think I forgot! |

|

It looks good to me for the most part. Just see if you can detect a suite with zero tests (that works fine otherwise) vs a test suite that has an uncaught error and cannot be parsed/executed. Depending on the outcome let's rename the opt-in option and make it consistent between the environment variable and reporter option. |

|

Also do me a favor and please rebase. I just pushed a change which will help me review PRs more effectively moving forward (sorry about that). It just validates that the outputted junit is valid according to the jenkins spec. |

because the template configuration applies to the entire report

to prevent potential setting overrides from spilling over to other suites.

- adds `reportNoResultsAsError` configuration parameter. Default is false - when top level test result (suite) has no children, a stub suite is added with a single test with `error` status. The exception is displayed at test level. - any potential suite or title templates are overridden in order to guarantee that the file path is displayed. Since besides the `testExecError`, this is the only information we have.

and the file path is used as suite title.

and explains in the README that file path will be used as suite name in these cases

so that the validity of the resulting xml can be verified

to ensure that it is handled as suite error

because `suite.testExecError` is a varying complex type and the information is also reflected in `suite.failureMessage` anyway.

It looks like empty suites are not accepted by Jest and reported as suite errors. describe('foo', () => {

});I get this result: <testsuite name="integration-tests/reporter/__tests__/simple.test.js" errors="1" failures="0" skipped="0" timestamp="1970-01-01T00:00:00" time="0" tests="0">

<properties>

<property name="best-tester" value="Jason Palmer"/>

</properties>

<testcase classname="Test suite failed to run" name="integration-tests/reporter/__tests__/simple.test.js" time="0">

<error> ● Test suite failed to run

Your test suite must contain at least one test.

at onResult (../../node_modules/@jest/core/build/TestScheduler.js:175:18)

at Array.map (<anonymous>)

</error>

</testcase>

</testsuite>Given this fact, I guess I have removed the fallback from |

|

This looks good to me! I did some testing locally and it's unfortunate that jest doesn't even pull a suite name from a functioning suite with no tests. I was thinking in that case we could still reuse at least some of the template variables. But it seems best to do as is done in this PR. Treat an uncaught error and a suite with no tests the same. Are there any other changes you'd like to make before merging this? |

Yeah, that's too bad. Although I guess it's a pretty rare case that you'd want to address immediately anyway.

Thank you for asking! But no, I'm happy. |

|

Thanks for all the hard work on this! Merging now and will cut a new major release including this and the change I made earlier. |

|

Should |

|

If I were building jest-junit from scratch today I would have it on by default. But millions of teams rely on it now every day and turning that on can have some side effects. If teams read the junit.xml files and create a database/index out of them then the new blank test suites this feature creates in certain cases could be confusing. |

|

Understood, thanks. Especially tricky considering |

|

It does use Semver and we have introduced breaking changes in the past. But this is one case where I've been extra cautious. It's an option folks can turn on and the burden is not high. |

Feature proposal

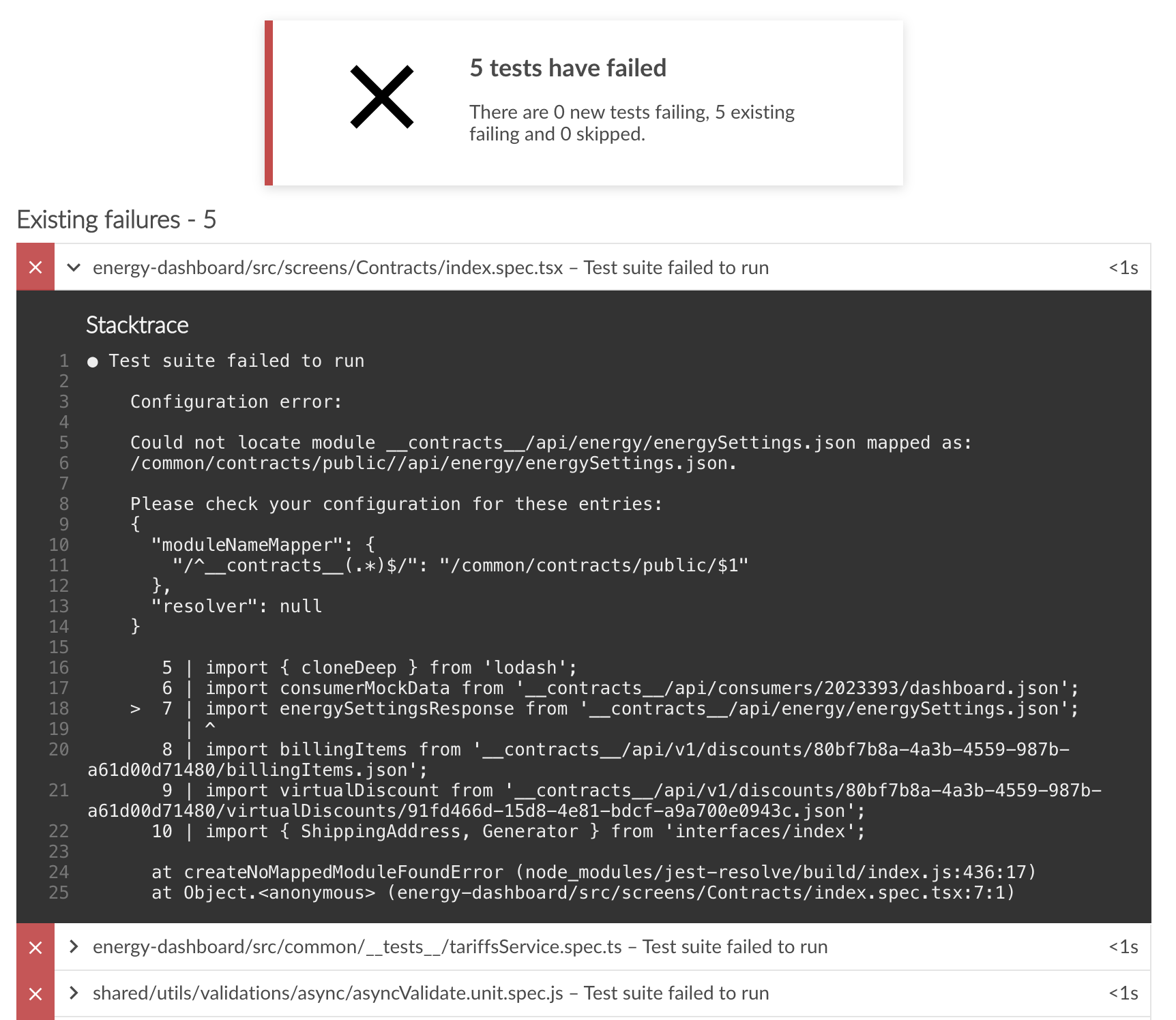

Test suites that fail to run altogether due to a syntax error, failed import, compilation error, etc. are currently not reported in the

junit.xmlresults.This is a problem in CI scenarios where the test process exit code cannot be relied on for whatever reason.

I realize this feature has been requested in #116, #46 and #26, so I'm sorry bring this up again :-)

Jest's test reports do not contain any information about the suite and tests that may be present in the test file, but failed to load. On the other hand it isn't clear to me how Jest could reliably obtain such information from an invalid javascript file.

For our use case, the fact that all error suites are reported is much more important than having stable test (suite) names.

But there may indeed be scenarios in which these priorities are reversed. In such cases users can choose not to use

this feature.

The report when

reportNoResultsAsErroris (actively) set totruelooks as follows: