Unofficial implementation of the paper[1]: Multiple People Tracking by Lifted Multicut and Person Re-identification

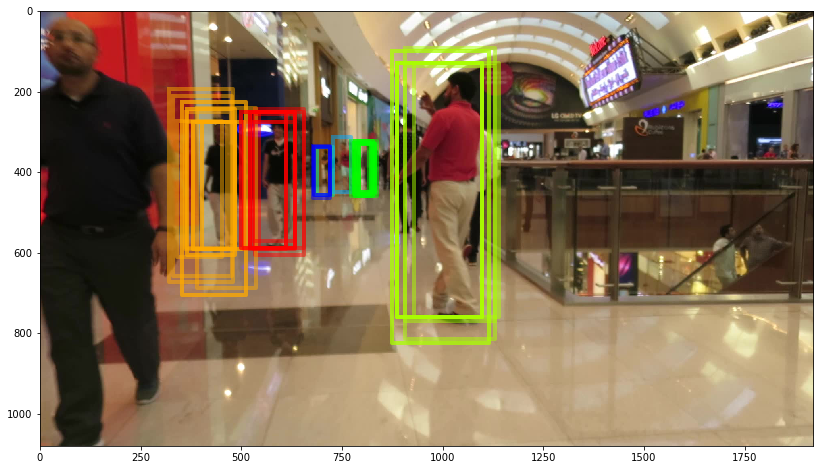

Tracking calculated by this library on the MOT16-11 video using dmax=100 over 10 frames

Tracking calculated by this library on the MOT16-11 video using dmax=100 over 10 frames

The software is developed using Ubuntu 16.04 and OSX with Python 3.5. The following libraries and tools are needed for this software to work correctly:

- tensorflow (1.x+)

- Keras (2.x+)

Download the source code and its submodules using

git clone --recursive https://github.com/justayak/cabbage.gitWhen the above criterias are met a simple install routine can be called inside the source root

bash install.shThis script will create a text file called settings.txt. You will need this file when you are using the end-to-end algorithm.

Follow this steps to do an end-to-end run on a video:

import numpy as np

from cabbage.MultiplePeopleTracking import execute_multiple_people_tracking

video_name = 'the_video_name'

X = np.zeros((n, h, w, 3)) # the whole video loaded as np array

dmax = 100

Dt = np.zeros((m, 6)) # m=number of detections

video_loc = '/path/to/video/imgs' # the video must be stored as a folder with the individual frames

settings_loc = '/path/to/settings.txt' # generated by the install.sh script

execute_multiple_people_tracking(video_loc, X, Dt, video_name, dmax, settings_loc)

# after the program has finished you can find a text file at the settings.data_root location

# called 'output.txt'. It is structured as follows:

# id1, id2, 0 (has an edge) OR 1 (has no edge)

# sample:

# 0, 1, 0

# 0, 2, 0

# 0, 3, 1

# ...

# the ids correspond with the positions of the first axis of the Dt-matrixIcon made by Smashicons from www.flaticon.com

[1] Tang, Siyu, et al. "Multiple people tracking by lifted multicut and person re-identification." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.