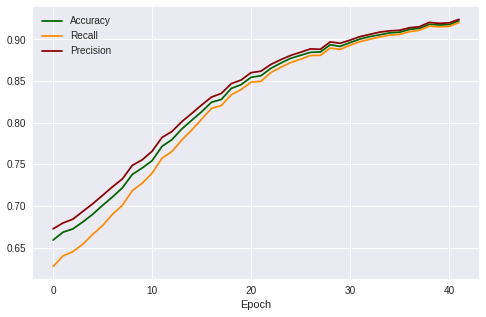

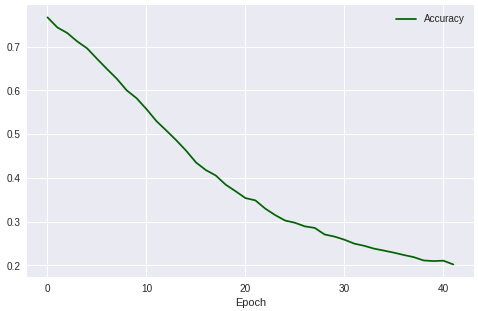

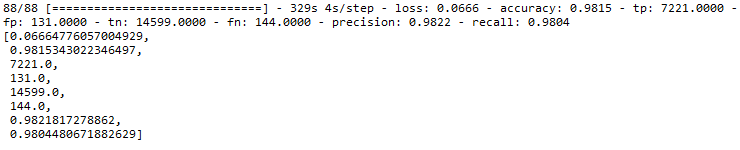

Suggesting a neural network architecture for analyzing and recognizing texts, where transformers were used through a pre-trained BERT model, in addition to its integration with the LSTM layer with the Global Pooling layers, in order to reach a model capable of analyzing texts.

-

Notifications

You must be signed in to change notification settings - Fork 0

Suggesting a neural network architecture for analyzing and recognizing texts, where transformers were used through a pre-trained BERT model, in addition to its integration with the LSTM layer with the Global Pooling layers, in order to reach a model capable of analyzing texts.

kaledhoshme123/Transformers-Text-Classification

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

About

Suggesting a neural network architecture for analyzing and recognizing texts, where transformers were used through a pre-trained BERT model, in addition to its integration with the LSTM layer with the Global Pooling layers, in order to reach a model capable of analyzing texts.

Topics

Resources

Stars

Watchers

Forks

Releases

No releases published

Packages 0

No packages published