-

-

Notifications

You must be signed in to change notification settings - Fork 943

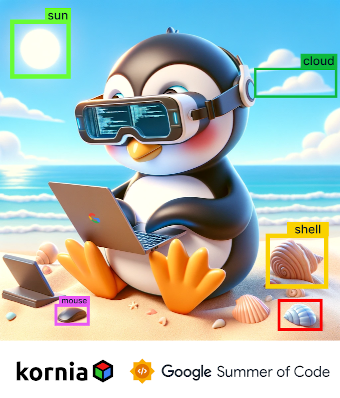

Kornia @ Google Summer of Code 2024

Welcome to the Google Summer of Code 2024 @ Kornia

👩💻 GO TO Ideas List

- What is Google Summer of Code 🌞 👩💻

- How to apply ? 🧑🎓

- Project Ideas List 💡 💡

- Timeline 📆

- How do I pass the GSoC evaluations ? 🚀

Google Summer of Code is a global, online program focused on bringing new contributors into open source software development. GSoC Contributors work with an open source organization on a 12+ week programming project under the guidance of mentors. https://summerofcode.withgoogle.com

- Are you a >=18; oss beginner or student in an eligible country ? [ READ ] 🧑🎓 🗺️

- GO through the Project Ideas List list below 📄

- Pre-Apply to this [ FORM ] AND join Kornia Slack [ JOIN ]

- IF you are contacted by a mentor THEN write the project proposal ✍️

- ELSE improve your skills and try next year 🔁

-

SUBMIT your project proposal through GSoC website !! (VERY IMPORTANT)

⚠️ ⚠️ - The project admins will balance the applications

⚖️

⚖️ - IF you passed this process THEN Congratulations!! You're in !! 🎉 🎆

- GO TO How do I pass the GSoC evaluations ? ➡️ 🚀

DISCLAIMERS:

- We won't consider any application from a student that hasn't been contacted by a mentor.

- Projects without a detailed schedule won't be considered. 📆

- The GSoC is a full-time internship; do not expect being contacted or if you are already working.

- A Project failure is not an option; we won't take that risk.

- The final application is on the GSoC site; otherwise your are out.

- Do not open useless pull requests to increase your git history; we know how to detect fake profiles.

- Pre-selected students might expect a screen interview.

- Google pays to the student; not kornia.org.

- If you are not notified by Google; you are not in.

- Read the GSoC student GUIDELINES & FAQ

- Expand low-level Kornia Rust library

- Support multi-backend using Keras 3.0

- Interactive Documentation and Examples

- Update Face and People Detection models

- Models for Monocular Depth Estimation and Optical Flow

- Object Detection and Instance Segmentation support

- Add Point Cloud modality support and augmentations

- Monocular Visual Odometry

** projects ideas are ordered by soft priority

-

- Description: The Kornia library has long served as a valuable tool for research and prototyping. However, when it comes to creating applications that are both real-time and secure, especially in sectors like agricultural technology and space, Python often falls short. To address this, we started a re-implementation written in Rust (via ndarray) – kornia-rs. This version aims to enhance safety in production environments and offer greater framework neutrality. Rust's gradual expansion into various domains, including the AI and ML sectors, makes this a timely development.

-

Expected Outcomes:

- Enhance the Kornia Rust library by adding essential low-level features tailored for computer vision and AI professionals, primarily focusing on the Rust ndarray crate for multidimensional/tensor operations.

- Improve the

ImageAPI and io interfaces to support more image and video format and Camera streaming pipelines. - Weekly deliverables of pull requests including small functionalities with unittest, doctests, and benchmarks (includes exploration of Candle tensor library from Huggin Face)

- Sets of small examples showing integration also with Rerun

- Python bindings of the implemented functionality via PyO3

- Optional, integration with sophus-rs to support camera calibration routines.

- Resources: Rust kornia; rust ndarray; Kornia IO.

-

Skills Required:

- Knowledge of Rust and the PyO3 bindings library

- Knowledge of the ndarray crate for multidimensional array manipulation (and tokio for parallelization)

- Knowledge of low-level computer vision, image processing, and machine learning.

- Optional, experience with SIMD instructions.

- Optional, experience with image codecs and camera drivers

- Possible Mentors: Edgar Riba, Farm-ng, Inc; Hauke S. / S. Lovegrove.

- Difficulty: Hard

- Duration: 350 hours

-

- Description: Since its creation, Kornia has only had PyTorch as its backend. With Keras 3.0, we have the possibility of supporting either JAX, TensorFlow. This project would aim to check the feasibility of using Keras on top of Kornia, by: 1) Migrating basic functions, like color space conversion, image resize, and image crop, among others; 2) Creating a test suite and ensuring functionality in all frameworks; 3) [Optional] Migrate a more complex neural network model.

-

Expected Outcomes:

- A side Kornia project keras-based, well documented, and with a test suite for all the backends

- A document and/or YouTube video showing how to migrate the main Kornia to a Keras-based project

- Resources: Project POC; Grayscale implementation v0; Test suite example v0; Test suite v0

-

Skills Required:

- Python (advanced) - To write the Kornia functions to be keras-based.

- Computer vision (intermediary) - to understand the algorithms, and be able to rewrite them to be keras-based

- Pytorch and Keras (intermediary/advanced) - to migrate the current Kornia (pytorch-based) to be based on Keras 3.0

- Pytest/testing (intermediary) - set up a testing suite reliable. A good test base is excellent and important for a project to be reliable.

- Possible Mentors: Edgar Riba, Farm-ng; Eduard Trulls; Dmytro Mishkin; Jian Shi; João Amorim.

- Difficulty: Hard

- Duration: 350 hours

-

- Description: Kornia has more than 700 ops, and we would like to improve the documentation for the computer vision and artificial intelligence community. As a computer vision-oriented library, an interactive interface further allows users to understand the outcome of each transformation and neural network quickly. To do this, we will need to rewrite the description of some ops, improve the general organization of the website, and add new features to the documentation and tutorials website such as: Adding dynamic examples (and plots) and ops results; Adding GitHub discussion integration; This project involves: 1) Improve general documentation and tutorial texts and organization; 2) Create Gradio-based demos for the image transformation method; 3) Create Gradio-based demos for neural network models in the library; 4) Deploy the Gradio-based demos on Hugging Face spaces, then embed them into the documentation and tutorials website; 5) [Optional] Adding dynamic/interactive plots support on sphinx documentation using Plotly API; 6) [Optional] Integrate an additional search plugin assistant on Kornia documentation with integration with Bard/Gemini.

-

Expected Outcomes:

- A series of patches with improvements for improved documentation writing, organization, and UI/UX for the kornia repository.

- Add support to GH discussions on the documentation website.

- Add support for dynamic examples or plots instead of static images on the documentation.

- Resources: Kornia documentation; Kornia tutorials; Kornia HF spaces; Dynamic docs issue; gh-docs integration issue.

-

Skills Required:

- Python (Intermediary) - To use Kornia, and be able to work with sphinx.

- Web development (basics) - HTML, CSS, JS - To improve the documentation website.

- Computer vision (basics) - to improve the organization of the documentation, as well as its content

- Writing (Intermediary) - to write well and produce engaging written material. With the ability to construct grammatically correct and easy-to-read sentences and choose the appropriate wording for each concept.

- Possible Mentors: Edgar Riba, Farm-ng; Christie Jacob; Dmytro Mishkin; Jian Shi; João Amorim; Luis Ferraz.

- Difficulty: Easy

- Duration: 175 hours

-

- Description: Kornia already has an efficient face detection API, but the model used is outdated and could be updated to improve performance and accuracy. Kornia also lacks an easy-to-use API for people detection, we would like a model to be selected for this task. The model should be as lightweight as possible as well as providing good accuracy. This project involves 1) Research for state-of-the-art models for people detection and face detection, being lightweight with good accuracy, and task-specific models; 2) Implementing the face detection model and updating the existing face-detection high-level API; 3) Implementing the people detection model; 4) Implement a high-level easy to use API for people detection; 5) [Optional] create and provide benchmarks for these high-level API’s.

-

Expected Outcomes:

- Research and implement/adapt a newer model for Face detection, providing a better accuracy model and/or better performance.

- Update tutorial and documentation example using the new Face detection API; and a New tutorial/documentation example for the people detection API

- [Optional] A tutorial showing how to finetune the people and/or face detection models.

- Resources: Kornia; Face detection tutorial; Face detection example.

-

Skills Required:

- Python+Pytorch (intermediary) - To write the models and the high-level APIs

- Computer vision and Machine learning(intermediary) - to research and implement the models.

- Possible Mentors: Christie Jacob; João Amorim.

- Difficulty: Intermediate

- Duration: 175 hours

-

-

Description: Kornia is a powerful library that provides state-of-the-art methods for numerous computer vision tasks. This project aims to broaden its application by integrating pre-trained models for monocular depth estimation and optical flow, which are crucial for understanding scene geometry and motion in various applications such as autonomous driving, augmented reality, and video analysis. This project involves: 1) Researching and selecting state-of-the-art models for monocular depth estimation and optical flow that can be integrated into Kornia; 2) Implementing these models in PyTorch, ensuring they are compatible with Kornia's design and architecture; 3) Developing a comprehensive suite of tests to verify the accuracy and performance of the implemented models across various datasets; 4) Creating detailed documentation and tutorials to assist users in utilizing these new features effectively.

-

Expected Outcomes:

- Integration of at least two state-of-the-art models for monocular depth estimation and optical flow into the Kornia library.

- A robust set of tests ensuring the models' performance and reliability.

- Extensive documentation and tutorials for users to easily adopt these new features.

- Demonstration of the models' capabilities through real-world applications or datasets.

-

Resources: Kornia documentation; Kornia tutorials; Pytorch Documentation; Research papers on the latest monocular depth estimation and optical flow models.

-

Skills Required:

- Python (basic) - for implementing and integrating models into the Kornia library.

- PyTorch (basic) - for model development and understanding of deep learning workflows.

- Computer vision (advances) - for a deep understanding of depth estimation and optical flow algorithms.

- Documentation and tutorial creation (intermediary) - for creating clear, instructive content for users.

-

Possible Mentors: Dmytro Mishkin; Eduard Trulls.

-

Difficulty: Intermediate

-

Duration: 175 hours

-

-

- Description: Object detection and instance segmentation are fundamental tasks in computer vision. Instead of batched tensors, those tasks input a list of tensors for processing. This project first adds support for list-based image augmentation pipelines to Kornia, then implements example training and inference code for object detection models (e.g. RCNN) and instance segmentation models (e.g. Mask-RCNN). Optionally, a test-time augmentation container is also included.

-

Expected Outcomes:

- A series of patches on the core project implementing list-based image augmentation, along with training and inference pipelines. Well documented for ease of users.

- Update the Kornia augmentation and training tutorials with these new features.

- Resources: Kornia; Implementation init.

-

Skills Required:

- Computer vision and Deep learning(intermediary)

- Possible Mentors: Jian Shi; João Amorim.

- Difficulty: Intermediate

- Duration: 175 hours

-

- Description: Point cloud is one of the dominant data in the world of 3D. We would like to have further support for point cloud augmentation and model training/inference abilities. Different from image augmentations, point cloud augmentations involve a scene-based method that transforms the whole point clouds and an object-based method that transforms the individual object as per the provided ground truth information. Before that, a proper point cloud data structure needs to be designed to fit most scenarios.

-

Expected Outcomes:

- Implement a point cloud data structure, and the scene-based and object-based transformation/augmentation methods. Those augmentations can plug and play for classical point cloud deep learning models.

- A tutorial and/or YouTube video showing the augmentation methods.

- Skills Required: Computer vision; Deep learning; Point cloud deep learning models; Python;

- Possible Mentors: Edgar Riba, Farm-ng; Christie Jacob; Dmytro Mishkin.

- Difficulty: Hard

- Duration: 350 hours

-

-

Description: This project aims to develop a streamlined monocular visual odometry system leveraging Kornia's feature detection and matching API (

kornia.feature), pose representations (kornia.geometry.liegroups), camera operations (kornia.geometry.camera) and the non-linear optimization module (kornia.optim). Askornia.optimis currently under active development by our core developers and may not be present in the main Kornia repository, the participant will have a unique opportunity to contribute to its development and integration. The participant may use concepts from ORB-SLAM for reference. -

Expected Outcomes:

- Research and implement a functional visual odometry method capable of processing the KITTI dataset.

- Visualization of odometry results with rerun for clear and interactive presentation of outcomes.

- Contribution to the development of the

kornia.optimmodule with some method for non-linear optimization e.g GaussNewton or Levenberg-Marquardt, enhancing Kornia's toolkit for computer vision applications and camera calibration.

-

Skills Required:

- Understanding of SLAM (Simultaneous Localization and Mapping) principles.

- Proficiency in multi-view geometry concepts.

- Experience with non-linear optimization techniques.

- Familiarity with PyTorch and the Kornia library is advantageous but not required.

-

Possible Mentors: Christie Jacob; Dmytro Mishkin; Jian Shi;

-

Difficulty: Hard

-

Duration: 350 hours

-

The program duration is ~2months; we will be flexible but there are some major RULES.

- Google pays you IF ONLY IF you pass the evaluations

- The mentors will evaluate you based on the performance during the project.

The Full Program Timeline: https://summerofcode.withgoogle.com/programs/2024

- Org Applications Open - [ January 22, 2024 ] 🎬 ✔️

- Org Application Deadline - [ February 07 2024 ] 📅

- Org Notification Date - [ February 21, 2024 ] 🙏

The Kornia.org team