-

Notifications

You must be signed in to change notification settings - Fork 708

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error log in pod kubeapps-apprepository-controller #577

Comments

|

@obeyler are you able to get apprepositories using the CLI ( |

|

@prydonius kubectl get crds |

|

$ kubectl get all -n kubeapps NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE NAME DESIRED SUCCESSFUL AGE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE NAME DESIRED CURRENT READY AGE |

|

I've activated the OIDC on kube-api. May it can be the root cause of this. I remove it to check if the trouble persist without it. I'll keep you in touch |

|

It looks like the CustomResourceDefinitions feature got disabled in your cluster. What about |

|

here is the result : |

|

I desactivate the OIDCwithout any success :-( |

|

I see that kubeapps deployment for mongodb doesn't success to scale the pod: |

|

It seems that there are two issues here:

|

|

@andresmgot Do you know what kind of property should i use to enable RunAsUser ? |

|

It seems that that is a flag in the Kubernetes API server: https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#securitycontextdeny |

|

In fact the cluster need to add PodSecurityPolicy in enable-admission-plugins option of kube-api to use the security-context |

|

Anyway, the |

|

with set mongodb.securityContext.enabled=false I've got this kind of error: |

|

I see, so that seems to be this issue: https://github.com/bitnami/bitnami-docker-mongodb/issues/108 IPv6 is enabled by default in the mongodb image, if you cluster doesn't support IPv6 you need to disable it. Again that should be something doable using another flag: |

|

--set mongodb.mongodbEnableIPv6=false has no effect. |

|

in fact in some version this flag exist but as there is no version specified as dependency the kubapps deployment takes the first mongodb version found when I do command on Kubeapps/chart/kubeapps even the kubeapps version is fixed, the deployment can works of failed depending the evolution of mongodb. You should fixed the dependency |

|

@obeyler if you're installing the chart from this git repo and not using the Bitnami charts repo, you will need to run |

|

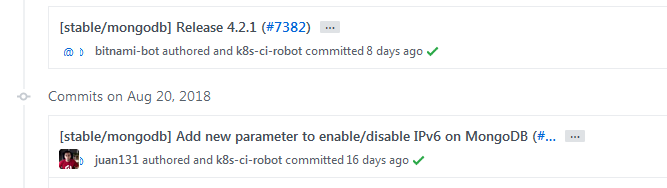

@prydonius @andresmgot and the ipv6 desactivator had beed added on mongodb chart on 4.2.2 |

|

Hi @obeyler, it's true that the flag |

|

Thanks for all @andresmgot |

|

Hi @obeyler We just updated the MongoDB chart. Could you please try using the latest version of the MongoDB chart (4.2.3)? |

|

@juan131 are you sure that is --set mongodb.mongodbEnableIPv6=false |

|

Hi @obeyler It's "false" for sure. Please note the boolean is transformed into a string. Regarding the commands I suggested, if you're using KubeApps, you should use: |

|

Ok thanks I'll try |

|

@juan131 May I can do same for monocular ? |

|

@juan31 I do log from pods : |

|

@obeyler as far as I can see monocular Monocular pines a specific version of Mongodb in the requirements.yaml: https://github.com/helm/monocular/blob/master/deployment/monocular/requirements.yaml#L3 so that should be updated |

|

@obeyler you are right, we should update the chart in |

|

no problem @andresmgot I'm happy to test it and find some issue :-) |

|

@obeyler you should be able to install the new chart now. You can specify the flag |

|

@andresmgot |

|

@obeyler it's not critical that all the In any case, are you seeing the |

|

nothing revelant into tiller proxy |

|

@prydonius @andresmgot |

|

This is strange, @obeyler is there any errors in the JS console, and can you show us the response of any failing calls in the Network tab of your browser inspector? |

|

@prydonius I've also install the new chart 0.4.0 from scratch with same result |

|

@prydonius @andresmgot If you want I can create a zoom conference to share with you my screen and talk about it |

|

That's weird. From that view Apart from that, does the |

|

@prydonius @andresmgot |

|

We released a new MongoDB chart version ( In the same way, a new kubeapps chart version ( Please, feel free to ping us if you find some issues with the new approach. |

kubernetes 1.11.2

Hello,

Do you know what can be this trouble ?

E0831 16:04:40.671935 1 reflector.go:205] github.com/kubeapps/kubeapps/cmd/apprepository-controller/pkg/client/informers/externalversions/factory.go:74: Failed to list *v1alpha1.AppRepository: the server could not find the requested resource (get apprepositories.kubeapps.com)

UI doesn't show any cartrige anymore and "only loading" is present on home page

The text was updated successfully, but these errors were encountered: