-

Notifications

You must be signed in to change notification settings - Fork 39k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

application crash due to k8s 1.9.x open the kernel memory accounting by default #61937

Comments

|

Use below test case can reproduce this error: then release 99 cgroup memory that can be used next to create: second, create a new pod on this node. So when we recreate 100 cgroup memory directory, there will be 4 item failed: third, delete the test pod. Recreate 100 cgroup memory directory before confirm all test pod's container are already destroy. But the incorrect result is all cgroup memory directory created by pod are leaked: Notice that cgroup memory count already reduce 3 , but they occupy space not release. |

|

/sig container |

|

@kubernetes/sig-cluster-container-bugs |

|

This bug seems to be related: opencontainers/runc#1725 Which docker version are you using? |

|

@feellifexp with docker 1.13.1 |

|

There is indeed a kernel memory leak up to 4.0 kernel release. You can follow this link for details: moby/moby#6479 (comment) |

|

@feellifexp the kernel log also have this message after upgrade to k8s 1.9.x

|

|

I want to know why k8s 1.9 delete this line |

|

I am not an expert here, but this seems to be change from runc, and the change was introduced to k8s since v1.8. |

|

/sig node |

I guess opencontainers/runc#1350 is the one you are looking for, which is actually an upstream change. /cc @hqhq |

|

thanks @kevin-wangzefeng , the runc upstream had changed, I know why now , the change is `hqhq/runc@fe898e7 , but enable kernel memory accounting on root by default , the child cgroup will enable also, this will cause cgroup memory leak on kernel 3.10.0, @hqhq , is there any way to let us enable or disable kernel memory by ourself? or get the warning log when the kernel < 4.0 |

|

@wzhx78 The root cause is there are kernel memory limit bugs in 3.10, if you don't want to use kernel memory limit because it's not stable on your kernel, the best solution would be to disable kernel memory limit on your kernel. I can't think of a way to workaround this on runc side without causing issues like opencontainers/runc#1083 and opencontainers/runc#1347 , unless we add some ugly logic like do different things for different kernel versions, I'm afraid that won't be an option. |

|

@hqhq it's exactly kernel 3.10's bug, but we spent more time to found it and it brought us big trouble on production environment, since we only upgrade k8s version from 1.6.x to 1.9.x. In k8x 1.6.x version , it doesn't open the kernel memory by default since runc had if condition. but after 1.9.x, runc open it by default. we don't want others who upgrade the k8s 1.9.x version had this big trouble. And runc is popular container solution, we think it need to consider different kernel version, at least, if runc can report the error message in kubelet error log when the kernel is not suitable for open kernel memory by default |

|

@hqhq any comments ? |

|

Maybe you can add an option like |

|

v1.8 and all later versions will be affected by this. |

|

@warmchang yes. Is this reasonable to add --disable-kmem-limit flag in k8s ? anyone can discuss this with us ? |

|

I don't find there is a config named disable-kmem-limit for k8s. How to add this flag? @wzhx78 |

|

k8s doesn't support now, we need to discuss with community whether is reasonable to add this flag in kubelet start option |

|

Not only 1.9, but also 1.10 and master have same issue. This is a very serious issue for production, I think providing a parameter to disable kmem limit is good. /cc @dchen1107 @thockin any comments for this? Thanks. |

|

@thockin @dchen1107 any comments for this? |

|

@dashpole any reason to update |

|

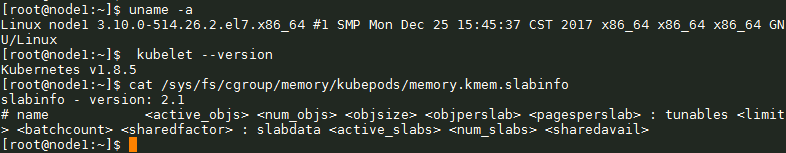

This issue is still reproduced in the following environment without kernel parameter xref: docker/for-linux#841 /remove-lifecycle rotten |

|

/reopen |

|

@gjkim42: Reopened this issue. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

@wzhx78: This issue is currently awaiting triage. If a SIG or subproject determines this is a relevant issue, they will accept it by applying the The Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

CentOS 7.6 went EOL in 2019. Since the main fix for this problem was in CentOS 7.8, please check with a more recent version. |

|

Also reproduced in the following environment. |

There may be other bugs. |

|

this problem caused by OS and Docker so you should upgrade centos and docker |

|

@JoshuaAndrew |

|

@gjkim42 |

|

Actually, i am using containerd directly(not by docker). |

|

Also reproduced in the following environment. |

I am not sure what they fixed at CentOS 7.8, but it didn't solve the problem. According to docker-archive/engine@8486ea1, |

|

We do not see the issue anymore since Centos 7.7. We set the kernel options: Our current environment is as following: |

|

Thanks @chilicat HOWEVER, I am wondering if it is safe to set kernel parameter |

|

I misread your post @gjkim42 , sorry please ignore. |

|

Does sig-node aware of this issue? |

|

CentOS 7 is a much older kernel than what we test CI on in SIG Node/upstream Kubernetes (currently the 5.4.x series). People are welcome to experiment with kernel parameters and share workarounds for their own distributions/deployments but any support will be best effort. |

|

I strongly suggest employing a workaround described at #61937 (comment) Also, since runc v1.0.0-rc94 runc never sets kernel memory (so upgrading to runc >= v1.0.0-rc94 should solve the problem). |

|

Kubernetes does not use issues on this repo for support requests. If you have a question on how to use Kubernetes or to debug a specific issue, please visit our forums. /remove-kind bug Extra rationale: this issue affects Centos 7, which is indeed much older than what we test in CI, and because workaround exists (see runc v1.0.0-rc94): |

|

@fromanirh: Closing this issue. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

yes, i also want to know cgroup.memory=nokmem will cause bad results? and cgroup.kmem desgins |

when we upgrade the k8s from 1.6.4 to 1.9.0, after a few days, the product environment report the machine is hang and jvm crash in container randomly , we found the cgroup memory css id is not release, when cgroup css id is large than 65535, the machine is hang, we must restart the machine.

we had found runc/libcontainers/memory.go in k8s 1.9.0 had delete the if condition, which cause the kernel memory open by default, but we are using kernel 3.10.0-514.16.1.el7.x86_64, on this version, kernel memory limit is not stable, which leak the cgroup memory leak and application crash randomly

when we run "docker run -d --name test001 --kernel-memory 100M " , docker report

WARNING: You specified a kernel memory limit on a kernel older than 4.0. Kernel memory limits are experimental on older kernels, it won't work as expected and can cause your system to be unstable.

I want to know why kernel memory open by default? can k8s consider the different kernel version?

Is this a BUG REPORT or FEATURE REQUEST?: BUG REPORT

What happened:

application crash and cgroup memory leak

What you expected to happen:

application stable and cgroup memory doesn't leak

How to reproduce it (as minimally and precisely as possible):

install k8s 1.9.x on kernel 3.10.0-514.16.1.el7.x86_64 machine, and create and delete pod repeatedly, when create more than 65535/3 times , the kubelet report "cgroup no space left on device" error, when the cluster run a few days , the container will crash.

Anything else we need to know?:

Environment: kernel 3.10.0-514.16.1.el7.x86_64

kubectl version): k8s 1.9.xuname -a): 3.10.0-514.16.1.el7.x86_64The text was updated successfully, but these errors were encountered: