It shows a simplified object localization algorithm using multiple cameras. This use a parallel projection model which supports both zooming and panning of the imaging devices. The algorithm is based on a virtual viewable plane for creating a relationship between an object position and a reference coordinate. The reference point is obtained from a rough estimate which may be obtained from the preestimation process. The algorithm minimizes localization error through the iterative process with relatively low-computational complexity. In addition, nonlinearity distortion of the digital image devices is compensated during the iterative process. Finally, the performances of several scenarios are evaluated and analyzed in both indoor and outdoor environments.

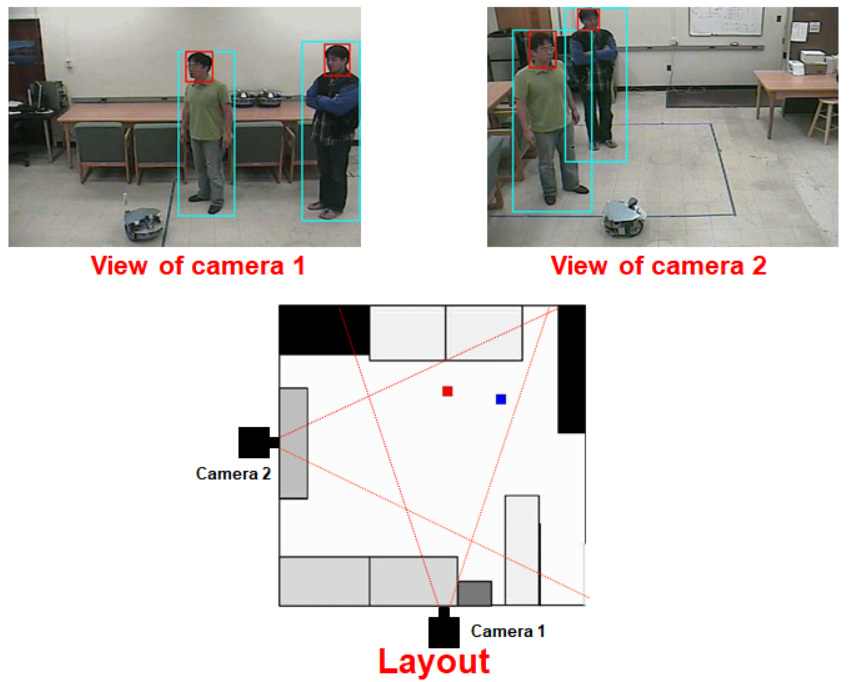

Ths picture shown as bellow illustrates the application model where multiple people are localized in a multiple-camera environment. The cameras can freely move with zooming and panning capabilities. Within a tracking environment, the method uses detected object points to find object location.

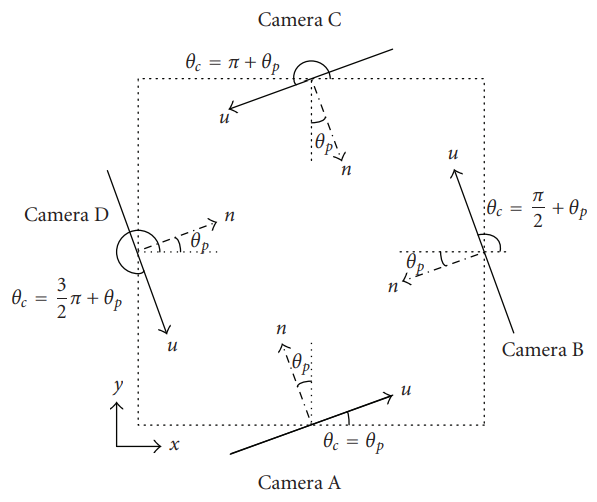

The individual panning factors is respected to a global coordinate.

When each cameras are detecting objects, the location was localized based on this algorithm then displayed in the layout diagram.

https://link.springer.com/content/pdf/10.1155/2008/256896.pdf