This is Team 6's final project git repository for EECS 568: Mobile Robotics course at University of Michigan. The title of our project is Masked ORB-SLAM3: Dynamic Element Exclusion for Autonomous Driving Scenarios Using Masked R-CNN for Increased Localization Accuracy.

The masked ORB-SLAM3 dynamic object localization pipeline architecture involves using Mask generated from masked R-CNN for a chosen dynamic on-road driving as opposed to the typical usage of semantic masks in ORB-SLAM for localization.

First, we recommend you read through our paper uploaded on this repository/docs. Next, read the two directly related works: ORB-SLAM3 and DynaSLAM..

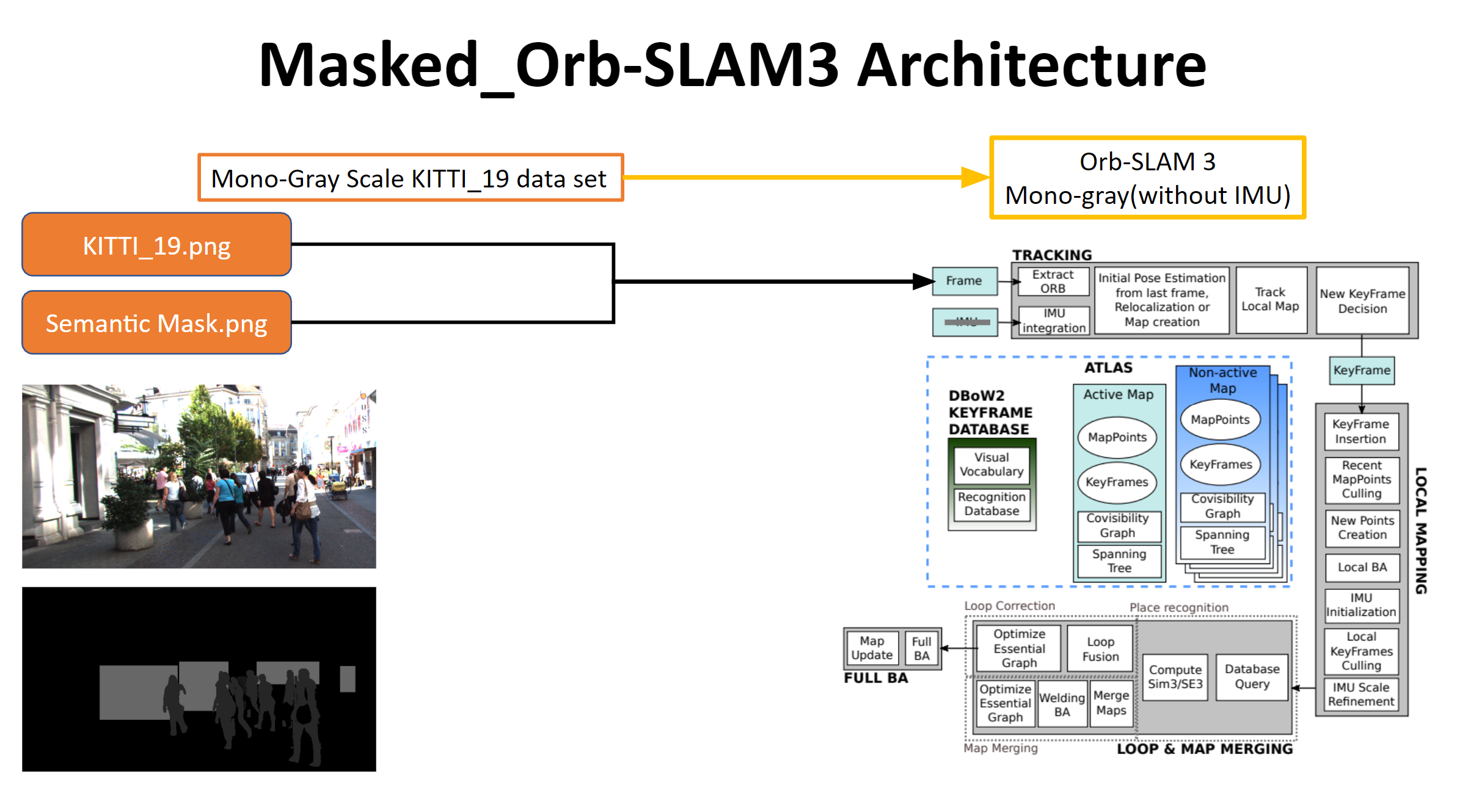

Thus, based off the obvious inaccuracy in semantic masks for the ORB-SLAM, we propose the following architecture for our "Masked ORB-SLAM3 pipeline":

The visualization of masks used for our framework can be seen below:

A visualization of our implementation can finally be seen below:

To observe and remove the impact of dynamic objects in ORB-SLAM3 we selected one of the most dynamic datasets from the KITTI datasets. We observed that the ORB-SLAM 3 performs better if the dynamic content from the image frames is removed. We remove dynamic objects from the images by performing instance segmentation and then passing binary masks into the ORB-SLAM3 pipeline. We then use these masks to remove tracking points that overlap with our masks.

This docker is based on ros melodic ubuntu 18.

There are two versions available:

- CPU based (Xorg Nouveau display)

- Nvidia Cuda based.

To check if you are running the nvidia driver, simply run nvidia-smi and see if get anything.

Based on which graphic driver you are running, you should choose the proper docker. For cuda version, you need to have nvidia-docker setup on your machine.

Steps to compile the Orbslam3 on the sample dataset:

./download_dataset_sample.shbuild_container_cpu.shorbuild_container_cuda.shdepending on your machine.

Now you should see ORB_SLAM3 is compiling. To run a test example:

docker exec -it orbslam3 bashcd /ORB_SLAM3/Examples&&./euroc_examples.sh

You can use vscode remote development (recommended) or sublime to change codes.

docker exec -it orbslam3 bashsubl /ORB_SLAM3

Once the container can run the sample data from the instructions, you may add your own data (for example from the KITTI dataset) into the Datasets folder with the same hierarchy. To use our masked implementation, clone our repository and run the build.sh command. Then run mono_kitti (looking at euroc_examples.sh can show examples of how the original implementation is run) with an additional command line argument appended at the end containing the directory of the segmentation masks. If you are using WSL make sure you are running an x-server so you can see the GUI.

Our team members are:

We had divided the work responsibility amongst ourselves in the following manner:

| S. No. | Work Item | Done By |

|---|---|---|

| 1. | KITTI Run in ORB-SLAM | All |

| 2. | Choosing right data (KITTI / semantic data set) | All |

| 3. | GT Semantic + Mask GT data + ORB-SLAM | Kyle, Ping-Hua, Zhuowen |

| 4. | Mask-RCNN+ Mask RCNN data + ORB-SLAM | Kyle, Aditya, Aman |

| 5. | Bench-marking research & Comparison | Aditya, Ping-Hua |

| 6. | Documentation | All |

| 7. | Project website and Git | Kyle, Aditya |

We express our sincere thanks to our EECS 568 instructor Prof. Maani Ghaffari Jadidi for his guidance, as well as the GSIs Tzu-Yuan (Justin) Lin and Jingyu (JY) Song for all the support they provided this semester. The link to our course website can be found here.

We would like to also acknowledge our profound gratitude towards Carlos Campos, Richard Elvira, Juan J. Gómez Rodríguez, José M. M. Montiel, Juan D. Tardos., for their seminal work in ORB-SLAM3, as well as Berta Bescos, José M. Fácil, Javier Civera and José Neira for their work in DynaSLAM.

The papers that we have referred for ideation, pipeline architecture and bench-marking of results against already existing localization results can be found here.