New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

recipe for using the Lightly Docker as API worker #630

recipe for using the Lightly Docker as API worker #630

Conversation

Codecov Report

@@ Coverage Diff @@

## master #630 +/- ##

=======================================

Coverage 87.70% 87.70%

=======================================

Files 89 89

Lines 3433 3433

=======================================

Hits 3011 3011

Misses 422 422 Continue to review full report at Codecov.

|

1b3887d

to

23776f9

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

some comments. I am not sure if the title of the tutorial is correct as this tutorial should be more about directly using a datasource instead of needing the data locally

|

Just had a quick look and the "setting up" is too minimal and is missing a few points:

|

The setting up of the S3 datasource is covered in the dataset creation with AWS recipe: https://docs.lightly.ai/getting_started/dataset_creation/dataset_creation_aws_bucket.html |

All I'm saying is that if I follow the tutorial now it will not work as expected. |

I adapted the tutorial of setting up the S3 bucket accordingly. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I left a few comments. It worked well and is CRAZY SUPER COOL!!! :D

No, it's really awesome to see that this is working. Sooooo convenient.

Could you also add a line here on top? https://docs.lightly.ai/docker/overview.html

Maybe mark the active learning part not as new anymore.

| This recipe requires that you already have a dataset in the Lightly Platform | ||

| configured to use the data in your AWS S3 bucket. | ||

|

|

||

| Follow the steps on how to `create a Lightly dataset connected to your S3 bucket <https://docs.lightly.ai/getting_started/dataset_creation/dataset_creation_aws_bucket.html>`_. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would assume at this part that there is an S3 bucket. The user should go to the other link to setup s3 properly or have a look if there are any questions.

However, for the sake of this tutorial, I would suggest adding all the elements starting from the dataset creation in the UI here as well. So we guide the user from creating a dataset in Lightly and connecting it with the S3 bucket to running the docker.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please also tell the user here that he/she needs to pick images or videos depending on the dataset type he/she wants to use

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would not add all the dataset creation elements here for two reasons:

- It would mean duplicating lots of stuff.

- It does not work well when we also have support for GC storage and azure storage.

| Use your subsampled dataset | ||

| --------------------------- | ||

|

|

||

| Once the docker run has finished, you can use your subsampled dataset as you like: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would add a screenshot here of the UI home screen for the dataset (e.g. how should it look like)

| subsample it further, or export it for labeling. | ||

|

|

||

| .. _ref-docker-with-datasource-datapool: | ||

| Process new data in your S3 bucket using a datapool |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

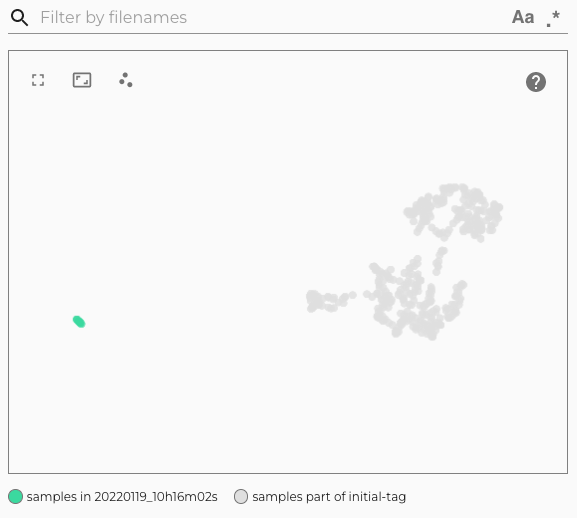

This is sort of the killer feature that appears in the end and it goes under because it's just lots of text. I would try to use bullet points or images/ screenshots to help. E.g.

Running the docker for the first time:

Running the docker again with a new video (we see a new cluster because the new video is very different):

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added much more content and screenshots.

|

Ah, and one other thing:

|

I added which features don't work yet but will be implemented soon. |

44bf89d

to

bf6c66a

Compare

|

|

||

| .. code-block:: console | ||

|

|

||

| docker run --gpus all --rm -it \ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would try to give a more complete docker run command. Maybe add the stopping condition?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the changes. It already looks much better. I would still add a more detailed instruction on the s3 setup. Just mention the steps:

- create dataset (use videos or images depending on the data)

- edit dataset and click on s3

- fill out (here I would add a screenshot, we can even recycle the one we already have :))

The reason why I would add this is to give the user a good end-to-end workflow. Otherwise, he/she has to jump around in our docs and search the various parts together.

I have some questions regarding your proposal:

However, I get that the S3 tutorial is not ideal for that, thus I created a new issue: https://linear.app/lightly/issue/LIG-548/datasource-setup-recipes-use-order-fitting-milestone-0 |

6260a46

to

9a51601

Compare

Description

links to the docs of setting up the S3 bucket

links to the docs of installing the docker

links to the docs of the first steps with the docker

changes the tutorial of setting up the S3 bucket to include distinguishing between Images/Videos

Generated report

Using the docker with an S3 bucket as remote datasource. — lightly 1.2.3 documentation.pdf