New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

cifar10 result not good as expect ! #62

Comments

|

The samples you’re showing seem to be from super early in training (3000

iterations). Do you have samples from later on?

…On Fri, Sep 21, 2018 at 8:23 AM zyoohv ***@***.***> wrote:

I run your code in cifar10, but the result seems not as good as our

expected.

- system information:

system: debian 8

python: python2

pytorch: torch==0.3.1

- run command:

$python main.py --dataset cifar10 --dataroot ~/.torch/datasets --cuda

- output part:

[24/25][735/782][3335] Loss_D: -1.287177 Loss_G: 0.642245 Loss_D_real: -0.651701 Loss_D_fake 0.635477

[24/25][740/782][3336] Loss_D: -1.269792 Loss_G: 0.621307 Loss_D_real: -0.657210 Loss_D_fake 0.612582

[24/25][745/782][3337] Loss_D: -1.250543 Loss_G: 0.636843 Loss_D_real: -0.667046 Loss_D_fake 0.583497

[24/25][750/782][3338] Loss_D: -1.196252 Loss_G: 0.589907 Loss_D_real: -0.606480 Loss_D_fake 0.589772

[24/25][755/782][3339] Loss_D: -1.189609 Loss_G: 0.564263 Loss_D_real: -0.612895 Loss_D_fake 0.576714

[24/25][760/782][3340] Loss_D: -1.178156 Loss_G: 0.586755 Loss_D_real: -0.600268 Loss_D_fake 0.577888

[24/25][765/782][3341] Loss_D: -1.087157 Loss_G: 0.508717 Loss_D_real: -0.522565 Loss_D_fake 0.564592

[24/25][770/782][3342] Loss_D: -1.092081 Loss_G: 0.674212 Loss_D_real: -0.657483 Loss_D_fake 0.434598

[24/25][775/782][3343] Loss_D: -0.937950 Loss_G: 0.209016 Loss_D_real: -0.310877 Loss_D_fake 0.627073

[24/25][780/782][3344] Loss_D: -1.316574 Loss_G: 0.653665 Loss_D_real: -0.693675 Loss_D_fake 0.622899

[24/25][782/782][3345] Loss_D: -1.222763 Loss_G: 0.558372 Loss_D_real: -0.567426 Loss_D_fake 0.655337

fake_samples_500.png

[image: fake_samples_500]

<https://user-images.githubusercontent.com/16134679/45865905-9a46ea80-bdb1-11e8-99c5-7ee2c8432cf6.png>

fake_samples_1000.png

[image: fake_samples_1000]

<https://user-images.githubusercontent.com/16134679/45865910-9c10ae00-bdb1-11e8-8158-acc3f2e42146.png>

fake_samples_1500.png

[image: fake_samples_1500]

<https://user-images.githubusercontent.com/16134679/45865913-9dda7180-bdb1-11e8-9a41-0cd490c124f7.png>

fake_samples_2000.png

[image: fake_samples_2000]

<https://user-images.githubusercontent.com/16134679/45865917-9f0b9e80-bdb1-11e8-8f02-a4ddb21c2f85.png>

fake_samples_2500.png

[image: fake_samples_2500]

<https://user-images.githubusercontent.com/16134679/45865921-a0d56200-bdb1-11e8-9e2c-101644e49cda.png>

fake_samples_3000.png

[image: fake_samples_3000]

<https://user-images.githubusercontent.com/16134679/45865924-a29f2580-bdb1-11e8-9a03-f61b328b2d87.png>

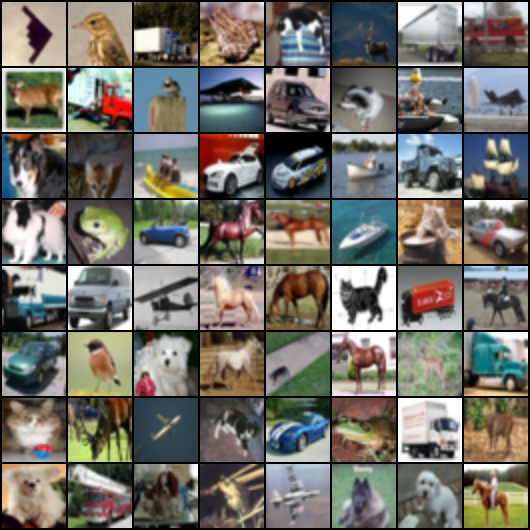

Note that this is real_samples.png!!!

[image: real_samples]

<https://user-images.githubusercontent.com/16134679/45865928-a468e900-bdb1-11e8-8c26-5eca7b41bdfa.png>

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#62>, or mute the

thread

<https://github.com/notifications/unsubscribe-auth/AFB0kjsndk_WrLq8waluc-aO9ktV8o1Rks5udJQEgaJpZM4Wzl0Y>

.

|

|

The running of the code has finished ! |

|

loss log I train it 25000 iters, but the result seems still not right. |

|

I think you can try small images such as 3232 or 6464. The method work well in all dataset with small image size in my experiment. good luck. |

|

@zyoohv Have you got good results for CIFAR10 data with default parameter settings? How many epochs have you run? Thanks! |

|

I haven't run the code in cifar 10. You may want to take a look at https://github.com/igul222/improved_wgan_training where we provide a very good cifar10 model. Cheers :) |

I run your code in cifar10, but the result seems not as good as our expected.

system: debian 8

python: python2

pytorch: torch==0.3.1

fake_samples_500.png

fake_samples_1000.png

fake_samples_1500.png

fake_samples_2000.png

fake_samples_2500.png

fake_samples_3000.png

Note that this is real_samples.png!!!

The text was updated successfully, but these errors were encountered: