-

Notifications

You must be signed in to change notification settings - Fork 156

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

The pixel value of captured depth sensor data (pgm files) #56

Comments

|

I find that in 'HoloLensForCV\Samples\SensorStreamViewer\FrameRender.cpp' the code takes pixel data in the range of 0 to 4000 as valid and takes each pixel data divided by 1000 as depth. And for short throw depth data, the valid depth range is 0.2 to 1.0. However, note that data in pgm has issue of endian, and you should interchange high 8 bits with low 8 bits to get the right data according to the comment of jiying61306 in issue #19. Maybe this is the result of storing 16 bits data with uint8_t vector in the code of HoloLensForCV\Shared\HoloLensForCV\SensorFrameRecorderSink.cpp. |

|

@zwz14 Thank you for the information. I will investigate it based on your comment and post the result. Thanks! |

|

YES!! It worked! The captured sensor data is following Little-Endian. It is required to swap byte order to get correct intensity, e.g. from 0xFF00 to 0x00FF. Now, I got the picture as below. The farther distance got higher intensity. Thank you very much @zwz14 ! |

|

In case people still have questions with this issue, please refer to here for an example script that solve the problem. |

|

@Joon-Jung to calculate the distance from object why we divide by 1000 ? |

|

@cyrineee , as @Huangying-Zhan referred above, the raw pixel value after the endian correction is representing the distance between the depth camera and the object taken in the depth map in millimeters. Since the HoloLens coordinate unit uses the meter as the default (to sure, Unity-based does), we divide the value by 1000 to convert the millimeter to meter. |

|

@Joon-Jung Thaaaaaaanks a lot for your response. Another question if it's possible to get the distance of rgb images ?(like we launch the streamerPV on hololens and we receive with sensor_receiver.py the images (i want to calculate the distance of the objects from hololens in this images). Thanks in advance . |

|

Hi @cyrineee, For your second question, it would be hard to get distance solely based on one RGB image, unless you have something to reference, such as markers (aruco, artoolkit etc). If you want to go with markerless, you might need to (1) align depth camera and RGB camera through coordinate transformation; or (2) use stereo camera method through two VLCs to triangulate the distance like this project's aruco example. |

I am doing some works with captured short throw depth sensors (including reflectivity) data from recorder program in this project.

I am curious about what each pixel value in pgm files indicates of. It seems like the value is not related to distance from the device, for example, lower value is further and higher value is closer or vice versa. I googled about this but any useful information didn't come out.

Does anybody know about this? and Could you let me know what it is indicating of?

Thank you in advance.

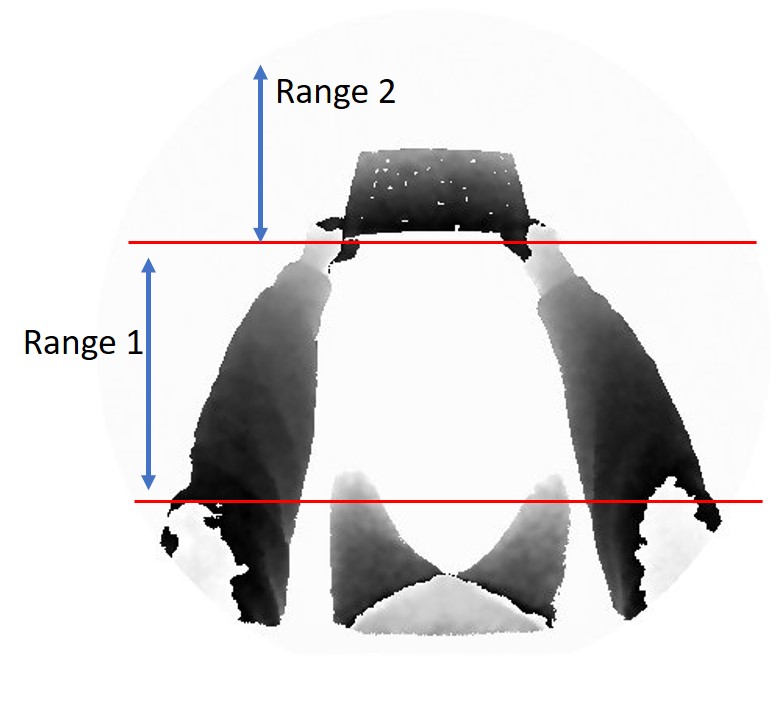

edit 1: I found that the intensity decrease if I goes further, but if the distance becomes further than certain point, the intensity has certain drop. I attached the one example at the bottom (Gray Look up table - black to white). I separated ranges (1 and 2) by that certain point. There is clear view of boundary (starting of range 2). It seems like the intensity starts as new point and increases again when the distance goes further than start of range 2. The intensity closer than range 1 seems not right as well. I watched CVPR2018 HoloLens research mode tutorial video and short throw catches depth up to 1 meter. The boundary can be 1 meter point, but it would be great to know what those intensity really stand for and how they are calculated. Thanks.

The text was updated successfully, but these errors were encountered: