-

Notifications

You must be signed in to change notification settings - Fork 2.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Benchmark metrics #331

Closed

Closed

Benchmark metrics #331

Changes from all commits

Commits

Show all changes

8 commits

Select commit

Hold shift + click to select a range

a9eadfc

high frequency examolke

javaThonc dc5dd0d

format

javaThonc 2e87411

360 example

javaThonc fdec411

tabnet fix bug and adding alph360

javaThonc d499962

high freq demo

javaThonc 029d4e0

update sfm and tabnet benchmark

javaThonc 22a110b

update tabnet metrics

javaThonc c9b68a5

Merge branch 'main' into benchmark_metrics

javaThonc File filter

Filter by extension

Conversations

Failed to load comments.

Jump to

Jump to file

Failed to load files.

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

81 changes: 81 additions & 0 deletions

81

examples/benchmarks/TabNet/workflow_config_TabNet_Alpha360.yaml

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,81 @@ | ||

| qlib_init: | ||

| provider_uri: "~/.qlib/qlib_data/cn_data" | ||

| region: cn | ||

| market: &market csi300 | ||

| benchmark: &benchmark SH000300 | ||

| data_handler_config: &data_handler_config | ||

| start_time: 2008-01-01 | ||

| end_time: 2020-08-01 | ||

| fit_start_time: 2008-01-01 | ||

| fit_end_time: 2014-12-31 | ||

| instruments: *market | ||

| infer_processors: | ||

| - class: RobustZScoreNorm | ||

| kwargs: | ||

| fields_group: feature | ||

| clip_outlier: true | ||

| - class: Fillna | ||

| kwargs: | ||

| fields_group: feature | ||

| learn_processors: | ||

| - class: DropnaLabel | ||

| - class: CSRankNorm | ||

| kwargs: | ||

| fields_group: label | ||

| label: ["Ref($close, -2) / Ref($close, -1) - 1"] | ||

| port_analysis_config: &port_analysis_config | ||

| strategy: | ||

| class: TopkDropoutStrategy | ||

| module_path: qlib.contrib.strategy.strategy | ||

| kwargs: | ||

| topk: 50 | ||

| n_drop: 5 | ||

| backtest: | ||

| verbose: False | ||

| limit_threshold: 0.095 | ||

| account: 100000000 | ||

| benchmark: *benchmark | ||

| deal_price: close | ||

| open_cost: 0.0005 | ||

| close_cost: 0.0015 | ||

| min_cost: 5 | ||

| task: | ||

| model: | ||

| class: TabnetModel | ||

| module_path: qlib.contrib.model.pytorch_tabnet | ||

| kwargs: | ||

| pretrain: True | ||

| d_feat: 360 | ||

| n_d: 8 | ||

| n_a: 8 | ||

| n_shared: 2 | ||

| n_ind: 2 | ||

| n_steps: 3 | ||

| GPU: "2" | ||

| dataset: | ||

| class: DatasetH | ||

| module_path: qlib.data.dataset | ||

| kwargs: | ||

| handler: | ||

| class: Alpha360 | ||

| module_path: qlib.contrib.data.handler | ||

| kwargs: *data_handler_config | ||

| segments: | ||

| pretrain: [2008-01-01, 2014-12-31] | ||

| pretrain_validation: [2015-01-01, 2020-08-01] | ||

| train: [2008-01-01, 2014-12-31] | ||

| valid: [2015-01-01, 2016-12-31] | ||

| test: [2017-01-01, 2020-08-01] | ||

| record: | ||

| - class: SignalRecord | ||

| module_path: qlib.workflow.record_temp | ||

| kwargs: {} | ||

| - class: SigAnaRecord | ||

| module_path: qlib.workflow.record_temp | ||

| kwargs: | ||

| ana_long_short: False | ||

| ann_scaler: 252 | ||

| - class: PortAnaRecord | ||

| module_path: qlib.workflow.record_temp | ||

| kwargs: | ||

| config: *port_analysis_config |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,192 @@ | ||

| { | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Why is this file necessary in this PR? |

||

| "cells": [ | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# Copyright (c) Microsoft Corporation.\n", | ||

| "# Licensed under the MIT License.\n", | ||

| "import qlib\n", | ||

| "import os\n", | ||

| "import random\n", | ||

| "import pandas as pd\n", | ||

| "import numpy as np\n", | ||

| "from multiprocessing import Pool\n", | ||

| "from qlib.config import REG_CN, HIGH_FREQ_CONFIG\n", | ||

| "from qlib.contrib.model.gbdt import LGBModel\n", | ||

| "from qlib.contrib.data.handler import Alpha158\n", | ||

| "from qlib.contrib.strategy.strategy import TopkDropoutStrategy\n", | ||

| "from qlib.contrib.evaluate import (\n", | ||

| " backtest as normal_backtest,\n", | ||

| " risk_analysis,\n", | ||

| ")\n", | ||

| "from qlib.utils import exists_qlib_data, init_instance_by_config\n", | ||

| "from qlib.workflow import R\n", | ||

| "from qlib.data import D\n", | ||

| "from qlib.data.filter import NameDFilter\n", | ||

| "from qlib.workflow.record_temp import SignalRecord, PortAnaRecord\n", | ||

| "from qlib.data.dataset.handler import DataHandlerLP\n", | ||

| "from qlib.utils import flatten_dict\n", | ||

| "import lightgbm as lgb" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# Qlib configuration" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "QLIB_INIT_CONFIG = {**HIGH_FREQ_CONFIG}\n", | ||

| "qlib.init(**QLIB_INIT_CONFIG)\n", | ||

| "instruments = D.instruments(market='all')\n", | ||

| "random.seed(710)\n", | ||

| "instruments = D.list_instruments(instruments=instruments, freq = '1min', as_list=True)\n", | ||

| "# Randomly select instruments to boost the training efficiency\n", | ||

| "instruments = random.sample(instruments, 150)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# train model configuration\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "MARKET = 'ALL'\n", | ||

| "BENCHMARK = \"SH000300\"\n", | ||

| "\n", | ||

| "start_time = \"2020-09-15 00:00:00\"\n", | ||

| "end_time = \"2021-01-18 16:00:00\"\n", | ||

| "train_end_time = \"2020-11-15 16:00:00\"\n", | ||

| "valid_start_time = \"2020-11-16 00:00:00\"\n", | ||

| "valid_end_time = \"2020-11-30 16:00:00\"\n", | ||

| "test_start_time = \"2020-12-01 00:00:00\"\n", | ||

| "\n", | ||

| "data_handler_config = {\n", | ||

| " \"start_time\": start_time,\n", | ||

| " \"end_time\": end_time,\n", | ||

| " \"fit_start_time\": start_time,\n", | ||

| " \"fit_end_time\": train_end_time,\n", | ||

| " \"freq\": \"1min\",\n", | ||

| " \"instruments\": instruments,\n", | ||

| " \"learn_processors\":[\n", | ||

| " {\"class\": \"DropnaLabel\"}\n", | ||

| " ],\n", | ||

| " \"infer_processors\": [ \n", | ||

| " {\"class\": \"RobustZScoreNorm\",\n", | ||

| " \"kwargs\": {\n", | ||

| " \"fields_group\": \"feature\",\n", | ||

| " \"clip_outlier\": True,\n", | ||

| " }},\n", | ||

| " {\"class\": \"Fillna\",\n", | ||

| " \"kwargs\": {\n", | ||

| " \"fields_group\": \"feature\",\n", | ||

| " }},],\n", | ||

| " \"label\": [\"Ref($close, -1) / $close - 1\"],\n", | ||

| "}\n", | ||

| "\n", | ||

| "\n", | ||

| "task = {\n", | ||

| " \"model\": {\n", | ||

| " \"class\": \"HF_LGBModel\",\n", | ||

| " \"module_path\": \"highfreq_gdbt_model.py\",\n", | ||

| " \"kwargs\": {\n", | ||

| " \"objective\": 'binary', \n", | ||

| " \"metric\": ['binary_logloss','auc'],\n", | ||

| " \"verbosity\": -1,\n", | ||

| " \"learning_rate\": 0.01,\n", | ||

| " \"max_depth\": 8,\n", | ||

| " \"num_leaves\": 150, \n", | ||

| " \"lambda_l1\": 1.5,\n", | ||

| " \"lambda_l2\": 1,\n", | ||

| " \"num_threads\": 20\n", | ||

| " },\n", | ||

| " },\n", | ||

| " \"dataset\": {\n", | ||

| " \"class\": \"DatasetH\",\n", | ||

| " \"module_path\": \"qlib.data.dataset\",\n", | ||

| " \"kwargs\": {\n", | ||

| " \"handler\": {\n", | ||

| " \"class\": \"Alpha158\",\n", | ||

| " \"module_path\": \"qlib.contrib.data.handler\",\n", | ||

| " \"kwargs\": data_handler_config,\n", | ||

| " },\n", | ||

| " \"segments\": {\n", | ||

| " \"train\": (start_time, train_end_time),\n", | ||

| " \"valid\": (train_end_time, valid_end_time),\n", | ||

| " \"test\": (\n", | ||

| " test_start_time,\n", | ||

| " end_time,\n", | ||

| " ),\n", | ||

| " },\n", | ||

| " },\n", | ||

| " },\n", | ||

| "}\n", | ||

| "\n", | ||

| "provider_uri = QLIB_INIT_CONFIG.get(\"provider_uri\")\n", | ||

| "if not exists_qlib_data(provider_uri):\n", | ||

| " print(f\"Qlib data is not found in {provider_uri}\")\n", | ||

| " GetData().qlib_data(target_dir=provider_uri, interval=\"1min\", region=REG_CN)\n", | ||

| "\n", | ||

| "dataset = init_instance_by_config(task[\"dataset\"])\n", | ||

| "model = init_instance_by_config(task[\"model\"])" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# train model and back test\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# start exp to train model with signal test\n", | ||

| "with R.start(experiment_name=\"train_model\"):\n", | ||

| " R.log_params(**flatten_dict(task))\n", | ||

| " model.fit(dataset)\n", | ||

| " model.hf_signal_test(dataset, 0.1)" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": "Python [conda env:trade]", | ||

| "language": "python", | ||

| "name": "conda-env-trade-py" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.8.5" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 4 | ||

| } | ||

Oops, something went wrong.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

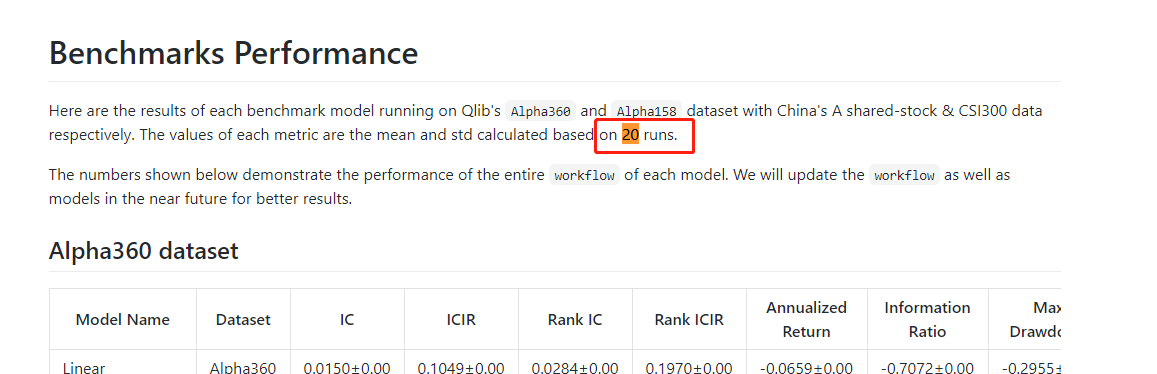

How many experiments have been run before you get this result ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I ran it 5 times. I will run the model again and update it.