New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Startup performance regression during June #101685

Comments

|

Well, we always did load One idea that circulated was to concatenate the scripts we need on startup into one before minification and thus only have one script tag instead of 4. This would mean we need a |

|

Also adding @deepak1556 for more insights if he is aware of script loading is slow compared to node.js require. |

|

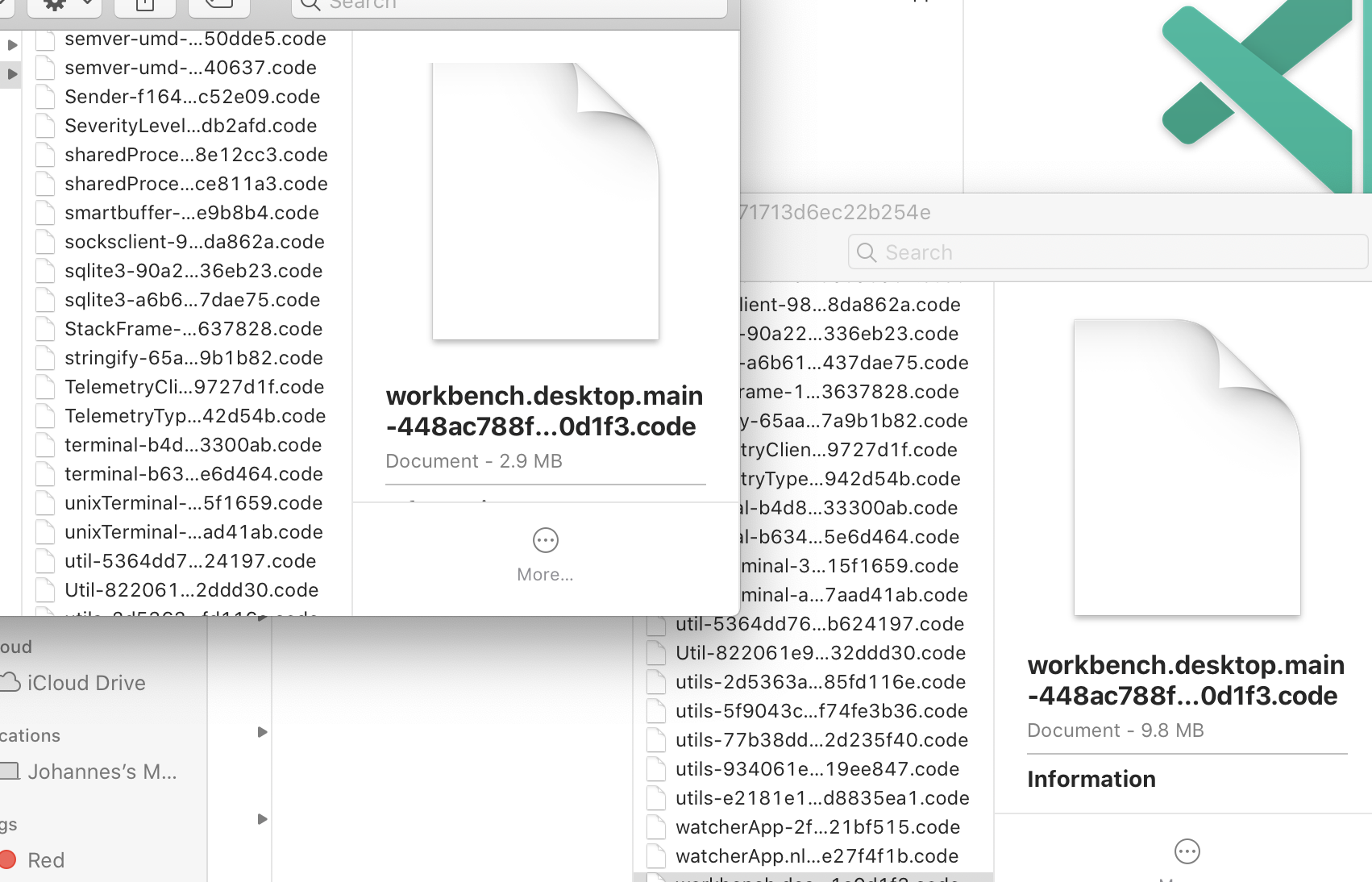

There seems to be a severe problem with cached data. We seem to generate a lot less with recent insiders and that has a severe impact on code loading. With The theory is that we generate cached data in multiple rounds. The more of the code has been executed the more cached data is created. This process stops once no increase in cached data is detected. Something there must have stopped working. |

|

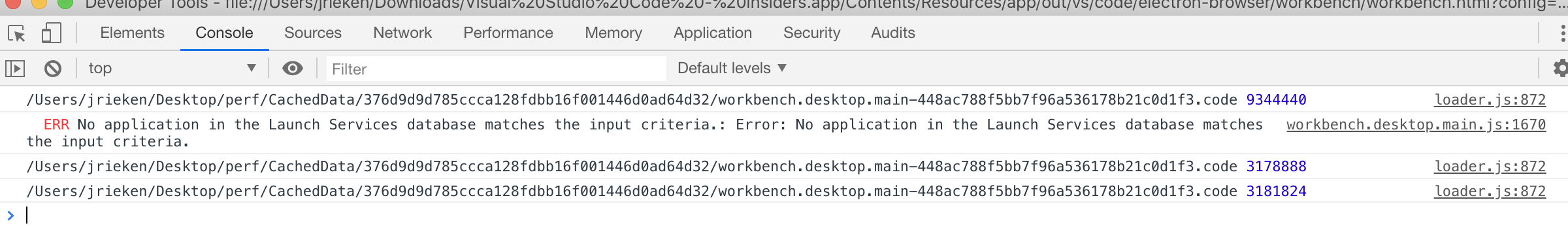

Ok, turns out that subsequent calls to However, this isn't a regression. I can see the same behaviour with the insider versions from above as well as with stable. That doesn't make this less severe tho... |

The windows perf bot shows a slight regression during the June iteration. For instance version

376d9d9d785ccca128fdbb16f001446d0ad64d32is fastest 1862ms whereas version8cd3fe080bee38d28ba01d0d71713d6ec22b254eis faster with 1666ms. There is surely some 10% fluctuation but there might be more to it. I have measured both versions by hand and code loading shows a slight slowdown (~50ms) tho code size our increased a little (~50kb).One theory is that we now load all "init scripts" via script tags and not via nodejs anymore and the network-tag shows some request stalling. We should investigate and understand that while we also drill into other potential causes.

The text was updated successfully, but these errors were encountered: