Deep learning for sign language recognition using MNIST image dataset

This is my own exercise resulted from Coursera's Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization which I have passed (https://www.coursera.org/account/accomplishments/records/KDWADX7V43SS).

I strongly believe the best way to learn something is to put it into practice, hence I did this exercise to solidify my knowledge.

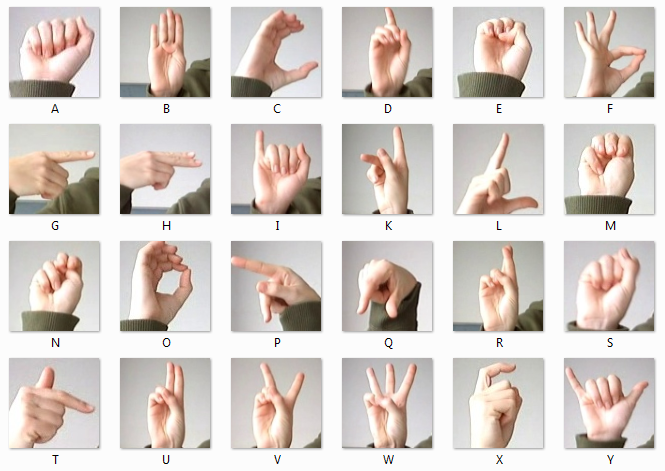

In the course, the programming assignment was to implement a deep neural network model to recognize numbers from 0 to 5 in sign language. Whereas, in this exercise I adapted the model to recognize 24 classes of letters (excluding J and Z) in American Sign Language.

The dataset is obtained from Kaggle (https://www.kaggle.com/datamunge/sign-language-mnist). The training data has 27,455 examples and the test data has 7172 examples. Each example is a 28x28=784 pixel vector with grayscale values between 0-255.

An illustration of the sign language is shown here (courtesy of Kaggle):

Grayscale images with (0-255) pixel values:

One example in the MNIST dataset:

In this exercise, I keep the same network architecture as the one used in the course. The model is as follows LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SOFTMAX.

So there are two hidden layers and one output layer. The architecture is depicted below:

The neural network is implemented in Python and Tensorflow 1.x

I keep all the default hyperparameter values (learning_rate = 0.0001, num_epochs = 1500, minibatch_size = 32, etc.)

This is the result:

Train Accuracy: 1.0

Test Accuracy: 0.61503065

So you can see that the model is clearly overfitting. Adding regularization methods such as L2 regularization or dropout can help reduce overfitting, but that's out of the scope of this exercise. I've also done another neural network for this task, but this time it uses Convolution Neural Network (CNN) which you can find here https://github.com/minhthangdang/SignLanguageRecognitionCNN

This is obviously a simple neural network, but it's a great introduction to Tensorflow. I've also learned many other things from the Coursera course, thanks to the brilliant teaching of Professor Andrew Ng.

Should you have any questions, please contact me via Linkedin: https://www.linkedin.com/in/minh-thang-dang/