New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

SWARM IP overlapping during a new container creation in overlay network #41576

Comments

|

Well, that's a fancy visualization for

Do you mean you have created a swarm global service attaching all pre-created networks to avoid IP duplication due to node LB not cleaned up in some corner cases? I have an enhanced |

Yeah, I wrote it to monitor changes in bitseq without a pain, bash script which prints state based on the last log message - https://gist.github.com/IMMORTALxJO/22784991ad3011f6ac0fc0eb687faeb0

Yes, I have created swarm global services with all networks attached, as easy workaround for avoiding Network not found issue during a deployment process ( got it periodically ) and to make it impossible to get IP overlap mentioned in #40989

Nice improvements, btw, I've already checked node attachments in raft via |

|

@IMMORTALxJO

Do you mean the node LB IP in node attachments is shown as released (unallocated) IP in libnetwork bitseq? |

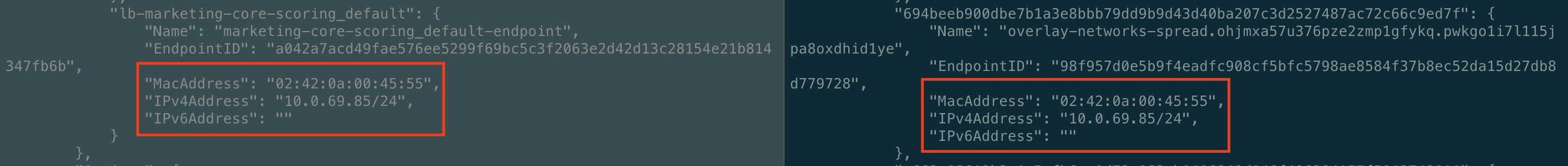

Yes, I meant that. As example :

|

I meet similar issue with overlapping IP for service and LB. Can you share your script to fix and recreate LB-endpoints? |

In your case, I guess if you run |

Description

Good day!

My SWARM cluster has ip overlaps during a new container allocation process. The worst case is then container takes address of lb endpoint, this causes container unreachability for other containers in network.

I've investigated the issue for a while and found my managers has corrupted libnetwork bitseq. Cluster thinks that 1-2 addresses per each networks are unallocated, but they should be since they have been attached to lb endpoints.

What's the right and safe way to fix a corrupted bitseq? And how to avoid corruption in a future?

Describe the results you received:

Sometimes new container receives IP address which has already been allocated to other swarm component ( usually overlay lb endpoint ). That causes network unreachability of a new container to other containers of a network.

Describe the results you expected:

Cluster has correct information about allocated ip addresses, there is no possibility of ip overlapping.

Output of

docker version:Output of

docker info:Additional environment details (AWS, VirtualBox, physical, etc.):

physical ( 3 managers + 17 workers )

The text was updated successfully, but these errors were encountered: