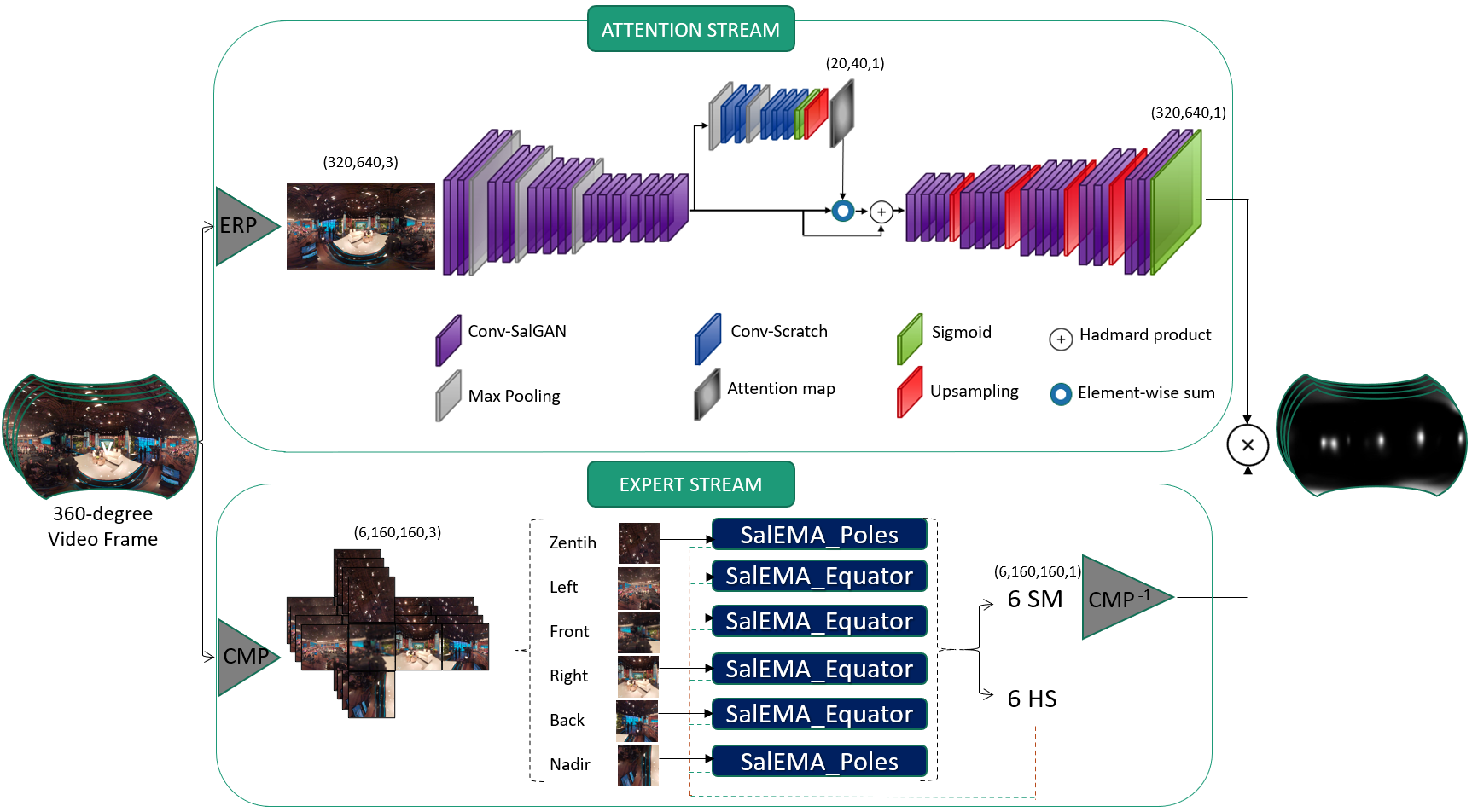

The spherical domain representation of 360◦ video/image presents many challenges related to the storage, processing, transmission and rendering of omnidirectional videos (ODV). Models of human visual attention can be used so that only a single viewport is rendered at a time, which is important when developing systems that allow users to explore ODV with head mounted displays (HMD). Accordingly, researchers have proposed various saliency models for 360◦ video/images. This paper proposes ATSal, a novel attention based (head-eye) saliency model for 360◦ videos. The attention mechanism explicitly encodes global static visual attention allowing expert models to focus on learning the saliency on local patches throughout consecutive frames. We compare the proposed approach to other state-ofthe-art saliency models on two datasets: Salient360! and VREyeTracking. Experimental results on over 80 ODV videos (75K+ frames) show that the proposed method outperforms the existing state-of-the-art.

Find the extended pre-print version of our work on arXiv .

Is the task that aims to model the gaze fixation distribution patterns of humans on static and dynamic omnidirectional scenes, due to the predicted saliency map which defined as a heatmap of probabilities, where every probability corresponds to how likely it is that the corresponding pixel will attract human attention, so it could be used to prioritize the information across space and time for videos, this task is quite beneficial in a variety of computer vision applications including image and video compression, image segmentation, object recognition, etc.

ATSal attention model initialization :

ATSal attention model trained on Salient360! and Sitzman image dataset:

ATSal attention model trained on Salient360! and VR-EyeTracking video dataset:

ATSal expert models trained on Salient360! and VR-EyeTracking video dataset:

saliency prediction studies in 360◦images are still limited. The absence of common head and eye-gaze datasets for 360◦content and difficulties of their reproducibility compared with publicly provided 2D stimuli dataset could be one of the reasons that have hindered progress in the development of computational saliency models on this front so that here we are providing a reproduced version of VR- EyeTracking Dataset with 215 videos, and an augmented version of Sitzmann_TVCG_VR dataset with 440 images.

-

link to augmented Salient360! and Sitzman Image DATASETS: augmented-static-dataset

-

link to reproduced VR-EYETRACKING DATASETS: VR-EYETRACKING

To test a pre-trained model on video data and produce saliency maps, execute the following command:

cd test/weight

weight.sh

cd ..

python test -'path to your video dataset' -'output path'

Here we are providing a comparison between attention stream, expert stream, and our final model ATSal, as shown bellow the attention stream overestimate salient area where it predicts the static global attention, the expert models predict dynamic saliency on each viewport independently based on its content and location but still introduce artifact on viewports boundaries and ignore the global attention statistic, unlike the fusion of both streams 'ATSal model' that is better at attending salient information distribution over space and time.

For questions, bug reports, and suggestions about this work, please create an issue in this repository or send an email to mtliba@inttic.dz .

.gif)