Code for National Data Science Bowl at Kaggle. Ranked 10th/1049.

Ensemble Deep CNNs trained with real-time data augmentation.

| Preprocessing |

centering, convert to a square image with padding, convert to a negative.

|

||||||||||||||||

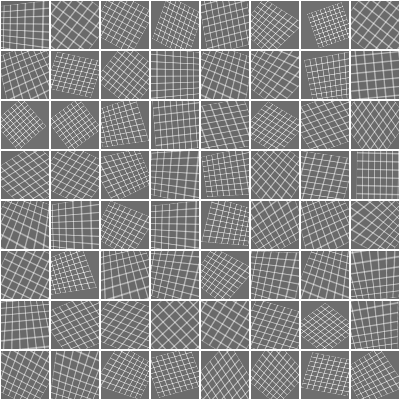

| Data augmentation |

real-time data agumentation (apply the random transformation each minibatchs).

transformation method includes translation, scaling, rotation, perspective cropping and contrast scaling.

|

||||||||||||||||

| Neural Network Architecture | Three CNN architectures for different rescaling inputs. cnn_96x96, cnn_72x72, cnn_48x48 | ||||||||||||||||

| Normalization | Global Contrast Normalization (GCN) | ||||||||||||||||

| Optimization method | minibatch-SGD with Nesterov momentum. | ||||||||||||||||

| Results |

|

- Ubuntu 14.04

- 16GB RAM

- GPU & CUDA (I used EC2 g2.2xlarge instance)

- Torch7

- NVIDIA CuDNN

- cudnn.torch

Install CUDA, Torch7, NVIDIA CuDNN, cudnn.torch.

th cuda_test.lua

Please check your Torch7/CUDA environment when this code fails.

Place the data files into a subfolder ./data.

ls ./data

test train train.txt test.txt classess.txt

- th convert_data.lua

training & validate single cnn_48x48 model.

th train.lua -model 48 -seed 101

ls -la models/cnn*.t7

make submission file.

th predict.lua -model 48 -seed 101

ls -la models/submission*.txt

when use cnn_72x72 model.

th train.lua -model 72 -seed 101

th predict.lua -model 72 -seed 101

when use cnn_96x96 model.

th train.lua -model 96 -seed 101

th predict.lua -model 96 -seed 101

This task is very heavy. I used x20 g2.xlarge instances for this task and it's takes 4 days.

(helper tool can be found at ./appendix folder.)

th train.lua -model 48 -seed 101

th train.lua -model 48 -seed 102

th train.lua -model 48 -seed 103

th train.lua -model 48 -seed 104

th train.lua -model 48 -seed 105

th train.lua -model 48 -seed 106

th train.lua -model 48 -seed 107

th train.lua -model 48 -seed 108

th train.lua -model 72 -seed 101

th train.lua -model 72 -seed 102

th train.lua -model 72 -seed 103

th train.lua -model 72 -seed 104

th train.lua -model 72 -seed 105

th train.lua -model 72 -seed 106

th train.lua -model 72 -seed 107

th train.lua -model 72 -seed 108

th train.lua -model 96 -seed 101

th train.lua -model 96 -seed 102

th train.lua -model 96 -seed 103

th train.lua -model 96 -seed 104

th train.lua -model 96 -seed 105

th train.lua -model 96 -seed 106

th train.lua -model 96 -seed 107

th train.lua -model 96 -seed 108

th predict.lua -model 48 -seed 101

th predict.lua -model 48 -seed 102

th predict.lua -model 48 -seed 103

th predict.lua -model 48 -seed 104

th predict.lua -model 48 -seed 105

th predict.lua -model 48 -seed 106

th predict.lua -model 48 -seed 107

th predict.lua -model 48 -seed 108

th predict.lua -model 72 -seed 101

th predict.lua -model 72 -seed 102

th predict.lua -model 72 -seed 103

th predict.lua -model 72 -seed 104

th predict.lua -model 72 -seed 105

th predict.lua -model 72 -seed 106

th predict.lua -model 72 -seed 107

th predict.lua -model 72 -seed 108

th predict.lua -model 96 -seed 101

th predict.lua -model 96 -seed 102

th predict.lua -model 96 -seed 103

th predict.lua -model 96 -seed 104

th predict.lua -model 96 -seed 105

th predict.lua -model 96 -seed 106

th predict.lua -model 96 -seed 107

th predict.lua -model 96 -seed 108

th ensemble.lua > submission.txt