1. Introduction

4. Results

5. Demo

6. References

- AutoML aims to enable developers with very little experience in ML, to make use of ML models and techniques.

- It tries to automate the end-to-end process of ML in order to proceed simple solutions and create those solutions faster.

- Sometimes, discovered models even outperform fine-tuned models.

- AutoML consists of almost every aspect in making a machine learning pipeline, from drawing dataset to deploying final models.

- NAS is a subset of AutoML in which pays much attention to automatically design powerful neural architectures (with one or more constraints, e.g., performance, latency) towards a certain problem formulation.

- Motivation:

- Human-design architectures are time-consuming and require specified knowledge from experts.

- Achieve superior performance on various tasks.

- Higher performance often means higher complexity.

- In this work, we utilise multi-objective evolutionary algorithm (MOEA) to solve NAS problem as a multi-objective problem to accomplish an optimal front trade-off between performance and complexity.

- We also work out the bottleneck issue in the architectures' performance estimation phase with two objectives:

- Validation accuracy at epoch 12

- The floating point operations per second (FLOPs).

- The experiment conducted in 21 runs.

- NSGA-II is applied with the population size of 20, the number of generations of 50, two points crossover, tournament selection, polynomial mutation.

- We perform our algorithm on multi-objectives which are minimizing valid accuracy at epoch 12/epoch 200 and FLOPs.

- Inverted Generational Distance (IGD) is served as the metric to measure the performance of our MOEA algorithm.

- Experimentally, using early stopping helps to have an acceptable IGD.

- In terms of accuracy, using valid accuracy at epoch 12 instead of epoch 200 gives similar results. Validating at epoch 12 has an even higher accuracy on CIFAR-10.

- Multi-objectives including performance and complexities enable us to find the trade-off front with miscellaneous architectures.

- Early stopping reduces significantly computational cost, and hence would let NAS be able to apply on diverse datasets.

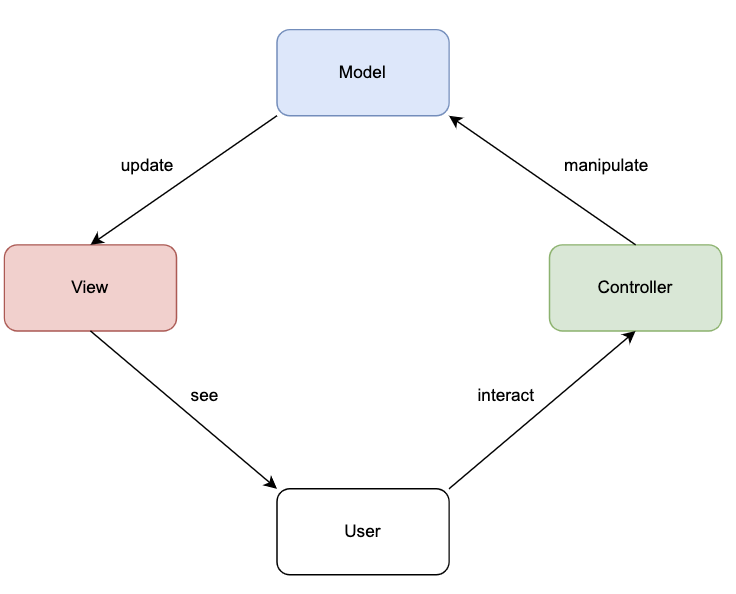

- To build a simple yet effective mobile application, we use MVC model:

- Our application takes an image containing the object to-be-classified as the input and returns the class of object after being classified with its probability.

- View is constructed using Javascript-based React Native

- React Native is a JavaScript framework for writing real, natively rendering mobile applications for iOS and Android.

- It’s based on React, Facebook’s JavaScript library for building user interfaces, but instead of targeting the browser as it is first introduced, it targets mobile platforms.

- The controller for the application is built beneath PyTorch framework to realize our aforementioned contribution to the work.

- To make a connection between View and Controller and serve as the middle component for the final application, we use Flask - a micro Python-based web framework due to it flexibility, lightweight, and beginner-friendliness.

[1] Elsken, T., Metzen, J. H., & Hutter, F. (2019). Neural architecture search: A survey. The Journal of Machine Learning Research, 20(1), 1997-2017.

[2] Deb, K., Pratap, A., Agarwal, S., & Meyarivan, T. A. M. T. (2002). A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE transactions on evolutionary computation , 6(2), 182-197.

[3] Zoph, B., & Le, Q. V. (2016). Neural architecture search with reinforcement learning. arXiv preprint arXiv:1611.01578.

[4] Real, E., Aggarwal, A., Huang, Y., & Le, Q. V. (2019, July). Regularized evolution for image classifier architecture search. In Proceedings of the AAAI conference on artificial intelligence (Vol. 33, No. 01, pp. 4780-4789).

[5] Dong, X., Liu, L., Musial, K., & Gabrys, B. (2021). Nats-bench: Benchmarking nas algorithms for architecture topology and size. IEEE transactions on pattern analysis and machine intelligence.

[6] Coello, C. A. C., & Sierra, M. R. (2004, April). A study of the parallelization of a coevolutionary multi-objective evolutionary algorithm. In Mexican international conference on artificial intelligence (pp. 688-697). Springer, Berlin, Heidelberg.