-

Notifications

You must be signed in to change notification settings - Fork 96

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Possibility to Customize Conversion Based on Operator #451

Comments

|

It is doable by using the option mechanism. I added the option 'score_samples' for gaussian_mixture, another one can be added to tell the converter not to use these operators. 'combined_reducesum' True by default and set to False in your case. What do you think? |

|

@xadupre Wow that kind of great idea, So it will be reduced like this: Thank you very much for your help. I really appreciate it. |

|

Would you like to contribute? Otherwise, i'll try to do it next week or the week after. |

|

@xadupre I will happy to help too if I could, but I need to get used to with this sklearn-onnx. Is there basic approach that you could show to modify the converson? and here is attached onnx operator that currently supported by Baracuda: |

|

Looking at this list, I'm thinking about something else since we could end up with many options in many converters to prevent a converter from using a specific list of operators. I'll do something about it next week then. The converter code would have to be updated the same way but you would know at converting time if a converter fails using only operators in the list. |

|

@xadupre Okay, thank you for already put this in the to do list. I will try to find a way to have more knowledge about this conversion. I will tell you more if I find new information regarding this. Thank you very much, I think that more people will use onnx model because its capabilites to cross many platform. Please put any reply here if anything already changed. |

|

@xadupre I already forked the library and modified the library, It's worked. But my modified library maybe is not good for public use, since I just modified what I need. I think this will be a great feature to make this library support Barracuda Unity. Barracuda Unity also tries to add more operators in ONNX so they could support another operator too, but I think it will not happen soon. |

|

I just finished the PR. I reused part of your code but I did not create a new input, I just modified the conversion for the third one. |

|

@xadupre I forgot to say that argmax actually also not allowed in barracuda and I still not found the replacement for that using supported operator for Barracuda, so in my code I totally disabled argmax. But this is totally not a good approach because maybe others will need argmax for label while I don't need label for now. |

|

@xadupre and what Barracuda do is, they will read the whole model first, so although I didn't use the label and probabilities as long it still there, Barracuda will not load the model. |

|

I replaced argmax by something using ReduceMax. It is difficult to have a converter produce an incomplete model. I prefer to add a function which removes a specific node in a pipeline and all unnecessary nodes associated to it. |

|

Yeah, I think that's better solution for public uses. Thank you for help a lot to improve this library |

|

@xadupre How I could add blacklists to operators? now I used your PR and how to add my blacklist, it using option or something? Thank You |

|

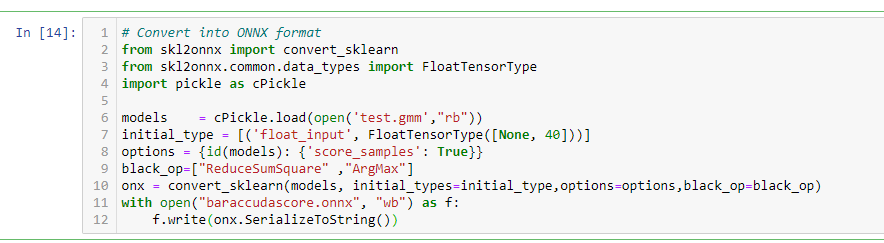

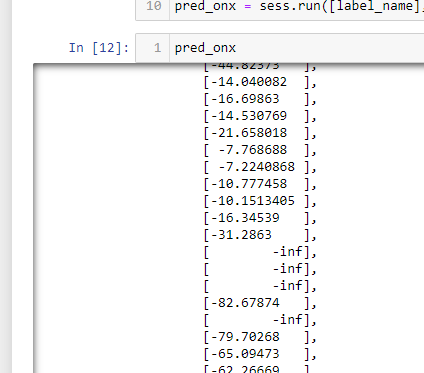

@xadupre Oh I already found how to use it, it is kind like this: and I started to realize my wrong approach in my codes because just realize that some of the result become -inf like this: |

|

I just realized it today, but if we don't use black_op, the result is not -inf |

|

I checked the model using black_op produce the same results as scikit-learn but there was no -inf in the output. Any dataset to recommend to add in a unit test? |

|

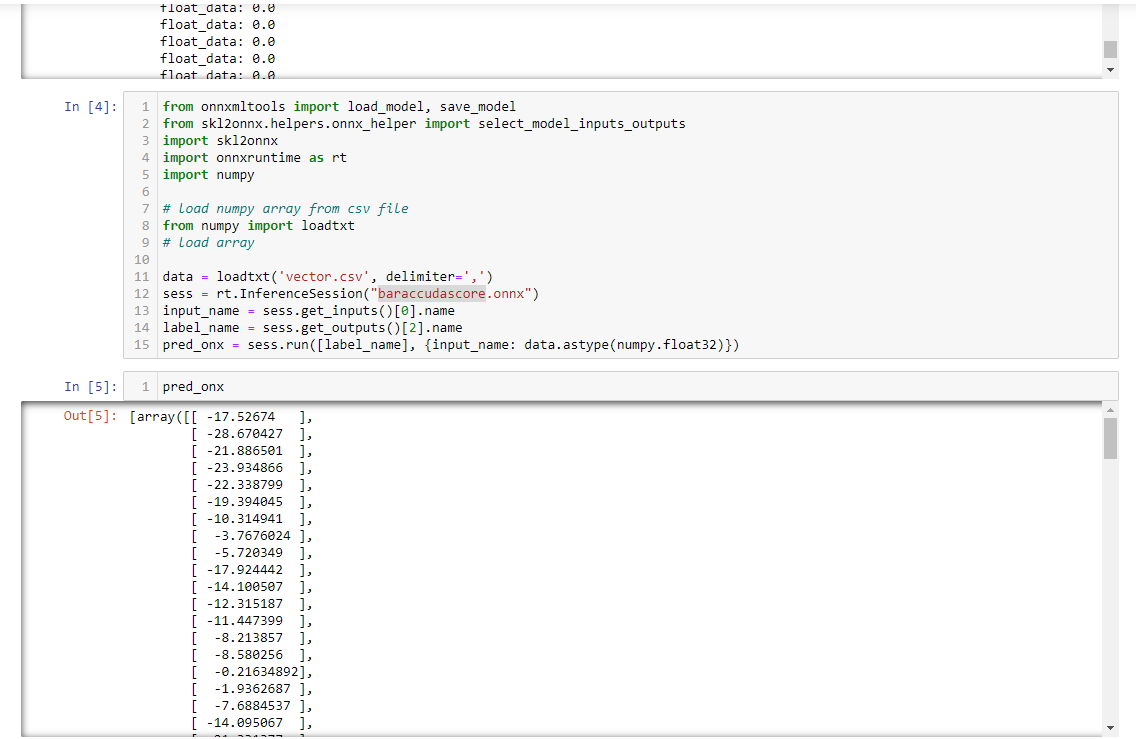

Here is the vector.csv Here is the model |

It did not happen if we don't use black_op in our model |

|

Here is the model without black_ops |

|

The logarithm fails. I need to do in onnx something similar to the implementation of ReduceLogSumExp. |

|

After trying to trace the equation, I think it will became -infinite if OnnxReduceSum(OnnxExp(weighted_log_prob)) resulting exactly 0.00000 so the OnnxLog can't make the equation and resulting infinity |

|

The problem should be fixed now. See https://github.com/xadupre/sklearn-onnx/blob/i451wb/skl2onnx/operator_converters/gaussian_mixture.py#L200. |

|

Thank you @xadupre It worked very well |

|

@xadupre Hi I just want to ask a question :

But my question why it resulting in the same score_samples without mean. It still the array of scores_sample. am I wrong to modify the code like that? I opened my model in Neutron but it already has a mean node but why the result is not from mean? I still get this kind of result: But my model already looked like this: Sorry for such a question after this issue already closed, because of this one also one of the method in GMM it called gmm.scores comes from literally just mean of the gmm.score_sample. |

|

sorry for the delay. I missed it. OnnxMean won't work that way: it computes the mean accross several inputs, not Inside the first input. That's why you get the same result. You have to use OnnxReduceMean in your case. |

|

@xadupre Thank you, it worked now for onnx runtime using OnnxReduceMean. But now Barracuda don't want to load my model. But I am already sure that Barracuda support OnnxReduceMean. This is the error in Barracuda: It is like wrong type or something, or maybe I still do wrong when convert sklearn to onnx model? This is my model now: I already report this problem to Barracuda github issues also but not sure this problem is caused by their library or I got wrong way to convert the model. Thank you. |

|

The error message seems to say that parameter axes must be specified for node ReduceMean. You should write something like OnnxReduceMean(..., axes=[1], ...) |

|

@xadupre Thank you, yeah it worked after add axes=[1] with reducedmean. By the way, you could add this features actually so in the GMM sklearn there is gmm.scores_sample and gmm.scores. gmm.scores is only literally the mean of gmm.score_samples. So maybe we could add new output node and have label,probabilities,score_samples, and score. |

|

Thank you for all the help. I really appreciate all the developers of skl2onnx library. |

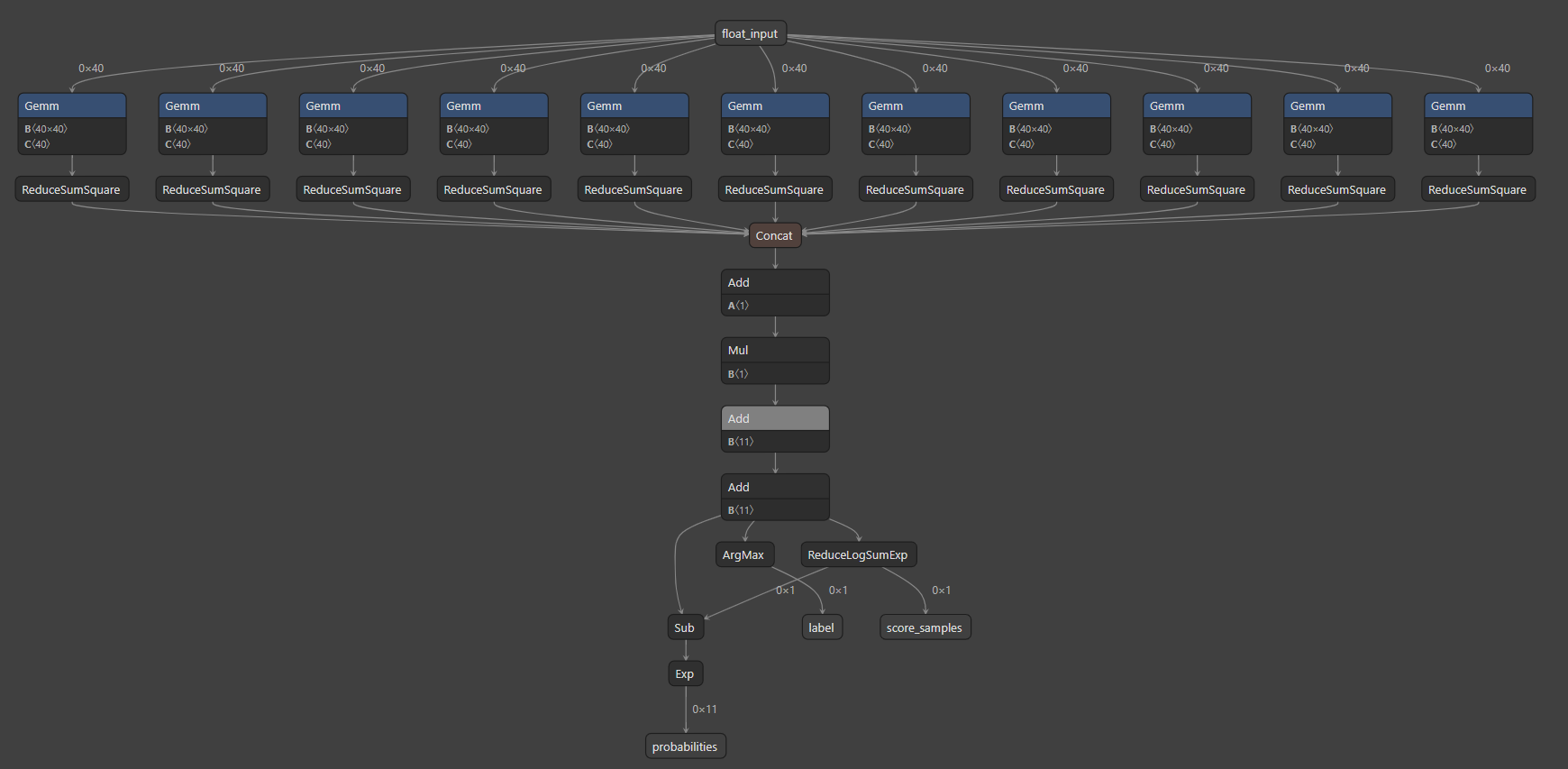

Hi, as you already add scoring method in GMM model for sklearn. The scoring method worked well when I used OnnxRuntime.

Now I got a new problem when I implemented my model to the new platform in this case Unity, where Unity could only load ONNX using Baraccuda with a limited type of operator.

Baraccuda in Unity doesn't contain operator of ReduceLogSumExp and ReduceSumSquare that appear in my model. For now, Baraccuda then suggest me to change this operator in onnx model to:

x -> y = exp(x) -> z = reduce_sum(y) -> log(z) for ReduceLogSumExp

and

x -> y = x^2 -> z = reduce_sum(y) for ReduceSumSquare

Could I customize the conversion so ReduceLogSumExp automatically just only used Exp and reduce_sum and reducesumsquare used square and reduce sum?

Thank You for your kind support.

And here I attached the visualization of my onnx model:

The text was updated successfully, but these errors were encountered: