New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

AOT compilation #935

Comments

|

We are waiting on a rewrite to be done See: #490 (comment) |

|

Nice! Do you have a rough estimation when it will be done? |

|

The rewrite will be done this months. There is some very basic aot that we made for unit testing purposes right now, but efforts on a more complex one will be able to resume after then. |

|

And what will AoT compilation generate, a C/C++ API plus source/.so? |

|

Great news, is there some branch/PR we can track the progress of this? |

|

@ptillet I am very keen to have a go at using this feature whatever state the code currently is in, even if it is only the unit test you mentioned previously (have a time sensitive project which could benefit from AOT functionality) |

|

We have a prototype that works with an old version of Triton. You might be able to hack it for your needs? |

For previous iterations we started with a C code that holds the kernels in source. |

|

@gaxler should there be a correlation between the triton |

You mean add grid size constrains at compile-time? In general I avoided dealing with anything related to kernel launches in the draft PR, its all just placeholders to make it run |

|

Great thanks! I now have it working but have noticed the performance is much worse than the JIT triton equivalent. From the profile trace I see large gaps between the triton kernel and the preceeding/successive kernels. I am aware you are not actively maintaining this but was just wondering if this was expected or had any hints? I am not that familiar with PTX but understand it is JIT compiled so was wondering if it was not being cached correctly or something like that. |

|

sorry that you have to bump into all those things. this is just a POC and in no way optimized. probably the worst thing for the C code performance is the PTX. it gets compiled to binary every time you call a kernel. this will be replaced by a cubin. another overhead might be the dispatch for different input sizes. not sure how significant it is for overall performance. perhaps you can use several cuda streams to bypass those issues? |

|

If I know my target hardware apriori is there any downside/gotchas to me dumping the ptx code to a file and compiling down to cubin and loading that instead? Could that potentially help with the overheads? |

|

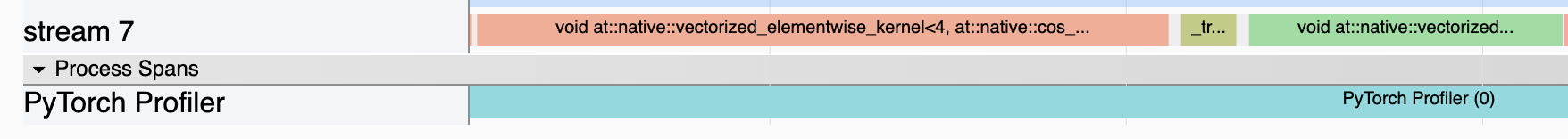

Converting to cubin has helped a lot! (in the trace the triton kernel is the one that sits between the orange and green) Whilst the overhead is now much smaller, there is still a gap in utilization before and after the AOT triton kernel is run (perhaps there is some implicit synchronisation happening). Regarding your suggestion about the dispatch time, I am guessing that could result in a delay on host thread but as long as it is launched sufficiently before the device is ready to execute the kernel (which we are pretty sure is the case here), that cost should be hidden? EDIT: I now think the overheads might be related to the module loading, need to confirm |

|

Assuming the I think you are correct. Thanks for doing this, this will be helpful when thinking about optimizing the generated code! |

|

Got a new prototype together, maybe this can help in some way: #1056 |

|

Thanks, will check it out |

|

Do you know how close it is to being merged? (just trying to gauge whether I should wait - or working from the branch) |

|

It's pretty close but there are other things that have priority over merging it. So branch will be better. I'm happy to help, it will be great to get user feedback |

|

@gaxler what is the relationship between this branch and |

Hi, I was just wondering if there had been any more thoughts on supporting AOT kernel compilation to allow execution outside of Python? Referencing #175

The text was updated successfully, but these errors were encountered: