You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Expect to get a similar number of scenes retrieved across a polygon area, when using a big polygon in the dc.load call vs using a small polygon in the dc.load retrieval.

Actual behaviour

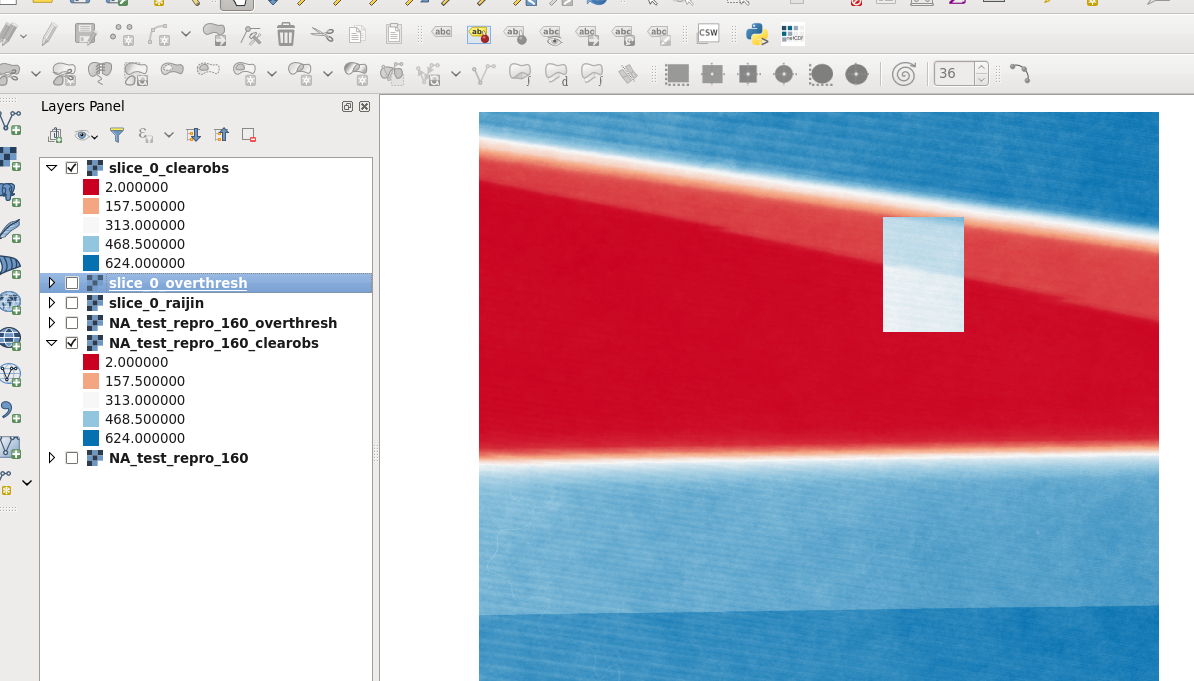

If I plot the scenes retrieved I get a triangle shape with about 2 observations in it in the big polygon, whereas in that area for the small polygon I get 300-600 scenes retrieved. (Over 30 yr epoch, ls5,7 and 8)

After some analysis error was identified in Datacube.load when using dask_chunks parameter, basically custom fuser function and skip_broken_datasets do not flow through to .

Missing fuse_func leads to using default fuser, which in the case of PQ masks is completely broken. This in turn leads to no data in the south part of the tile after masking is applied.

Bottom image should look like top-right, instead it's an exact copy of top-left.

Expected behaviour

Expect to get a similar number of scenes retrieved across a polygon area, when using a big polygon in the dc.load call vs using a small polygon in the dc.load retrieval.

Actual behaviour

If I plot the scenes retrieved I get a triangle shape with about 2 observations in it in the big polygon, whereas in that area for the small polygon I get 300-600 scenes retrieved. (Over 30 yr epoch, ls5,7 and 8)

Steps to reproduce the behaviour

https://github.com/rjdunn/GWnotebooks/blob/master/Dask_wetness_anomaly_debugger_040817-forDC.ipynb

The code above should run for either of the queries below. Obv you may wish to change the paths for the output files.

queries used:

Big polygon

Little polygon

Environment information

datacube --versionare you using?1.3.2

behavior same on raijin and on VDI

The text was updated successfully, but these errors were encountered: