See our end-to-end implementation in https://github.com/JiawangBian/sc_depth_pl

This page provides codes, models, and datasets in the paper:

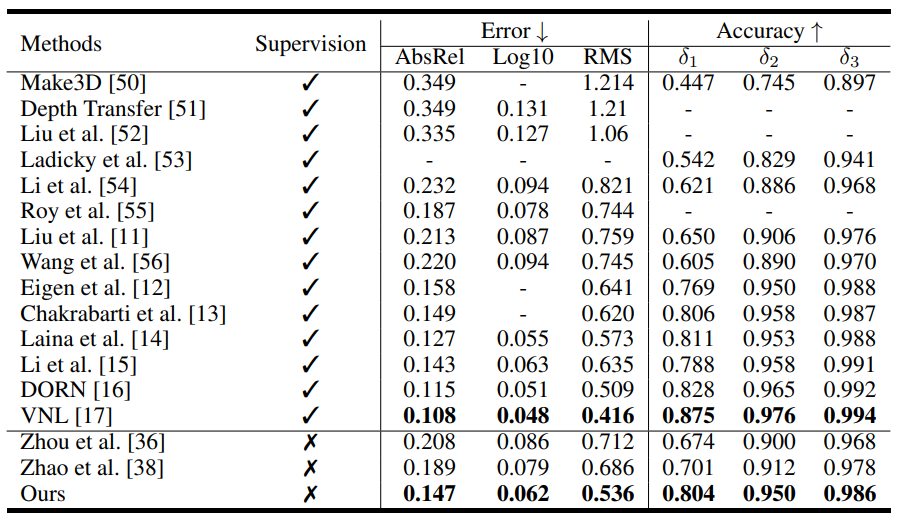

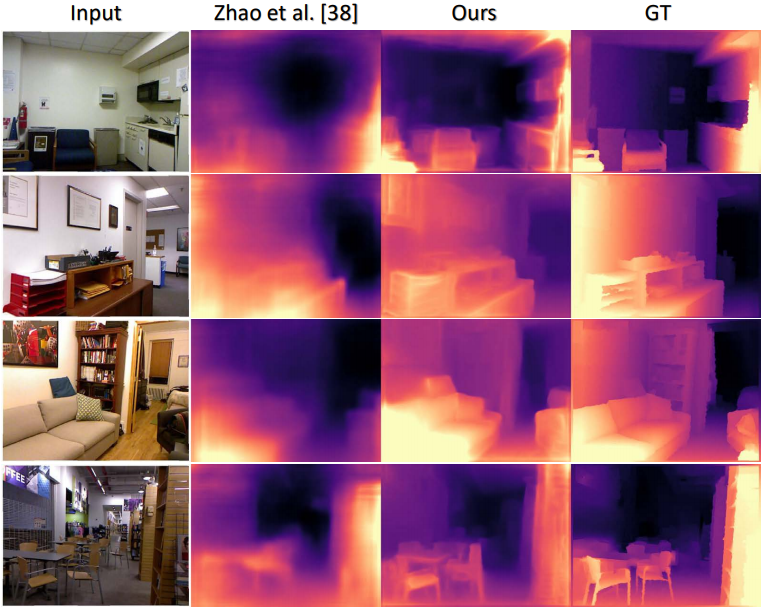

Unsupervised Depth Learning in Challenging Indoor Video: Weak Rectification to Rescue

Jia-Wang Bian, Huangying Zhan, Naiyan Wang, Tat-Jun Chin, Chunhua Shen, Ian Reid

@article{bian2021ijcv,

title={Unsupervised Scale-consistent Depth Learning from Video},

author={Bian, Jia-Wang and Zhan, Huangying and Wang, Naiyan and Li, Zhichao and Zhang, Le and Shen, Chunhua and Cheng, Ming-Ming and Reid, Ian},

journal= {International Journal of Computer Vision (IJCV)},

year={2021}

}

@article{bian2020depth,

title={Unsupervised Depth Learning in Challenging Indoor Video: Weak Rectification to Rescue},

author={Bian, Jia-Wang and Zhan, Huangying and Wang, Naiyan and Chin, Tat-Jun and Shen, Chunhua and Reid, Ian},

journal={arXiv preprint arXiv:2006.02708},

year={2020}

}

- We analyze the effects of complicated camera motions on unsupervised depth learning.

- We release an rectified NYUv2 dataset for unsupvised learning of single-view depth CNN.

Download our pre-processed dataset from the following link:

rectified_nyu (for training) | nyu_test (for evaluation)

- Download SC-SfMLearner-Release by

git clone https://github.com/JiawangBian/SC-SfMLearner-Release.git

- Run 'scripts/train_nyu.sh'

TRAIN_SET=/media/bjw/Disk/Dataset/rectified_nyu/

python train.py $TRAIN_SET \

--folder-type pair \

--resnet-layers 18 \

--num-scales 1 \

-b16 -s0.1 -c0.5 --epoch-size 0 --epochs 50 \

--with-ssim 1 \

--with-mask 1 \

--with-auto-mask 1 \

--with-pretrain 1 \

--log-output --with-gt \

--dataset nyu \

--name r18_rectified_nyu

-

Download Pretrained Models.

-

Run 'scripts/test_nyu.sh'

DISPNET=checkpoints/rectified_nyu_r18/dispnet_model_best.pth.tar

DATA_ROOT=/media/bjw/Disk/Dataset/nyu_test

RESULTS_DIR=results/nyu_self/

# test 256*320 images

python test_disp.py --resnet-layers 18 --img-height 256 --img-width 320 \

--pretrained-dispnet $DISPNET --dataset-dir $DATA_ROOT/color \

--output-dir $RESULTS_DIR

# evaluate

python eval_depth.py \

--dataset nyu \

--pred_depth=$RESULTS_DIR/predictions.npy \

--gt_depth=$DATA_ROOT/depth.npy

-

SC-SfMLearner (NeurIPS 2019, scale-consistent depth learning framework.)

-

Depth-VO-Feat (CVPR 2018, trained on stereo videos for depth and visual odometry)

-

DF-VO (ICRA 2020, use scale-consistent depth with optical flow for more accurate visual odometry)