-

Notifications

You must be signed in to change notification settings - Fork 56

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

MySQL Projections skipping events (SingleStreamStrategy) #189

Comments

|

that's a serious problem ... We had a similar issue with our mongoDB v6 event-store. Same symptoms:

Some background. We have an event sourced service that processes SAP changesets (IDOC XML files). A Java Middleware sits between our service and the SAP ERP. When there is a mass update in SAP (during data migration for example) the Java Middleware takes all updates and forwards them to us at once. This results in thousands of events within a couple of seconds. Under that load we experienced the same issue. Back in the days we used MongoDB 3.6. No auto increment fields and no transactions available. Our custom made projections used the aggregate version + timestamp to remember stream positions until we saw that events are being skipped if the write model is on fire. Replays worked just fine. Which made the problem hard to debug. We did exactly the same to investigate the issue: Log processing of events in the projections and compare the log with the event streams. We identified the problem: Events with higher versions became slightly faster visible for the projection. To be honest: We also discussed (me and @sandrokeil ) if this could happen with prooph v7 projections, too. But since they rely on transactions and a stream sequence we thought the problem is only related to our custom implementation for MongoDB .... Looks like we were wrong :( How did we solve the Problem? One option was to let projections detect "gaps" and if a gap is detected wait a moment and reread the stream. That's not a 100% guarantee but could work if the sleep time is high enough. Finally we went for a 100% save solution. It looks like this: First we thought that this will produce a lot of load on our MongoDB-Cluster but Mongo can handle this just fine. Only real bottleneck for us is when we want to deploy a new projection. In this case we need to add a new flag to each existing event in the stream (several millions) and mongo needs to update the index. But we don't deploy new projections that often .... Our final solution can't be implemented in prooph v7 projections, but maybe the gap detection is an acceptable workaround. It could be activated with an additional projection option. I'm 99% sure that your working theory is correct @fritz-gerneth

@prolic What do you think? Not sure if this is a MySql issue or could happen with Postgres, too. I don't want to bet against it .... |

|

@fritz-gerneth Axon supports any projection, as long as you can write the event handler for it. With the default |

|

I read a little about auto increments in MySQL. The problem is, that the auto increment is done on write, not on commit. F.e. Select * from ... You see a gap. Commit terminal one. Select * from ... Gap is closed. Probably the same is true for postgres as well. So in case there is a gap, we need to detect, back off and read again. Really painful, but I don't see a better solution. |

|

@codeliner your approach with using metadata for this should also work, but I prefer to keep the event immutable. |

|

Appreciate all your input! :) @mrook : from what I understand this solves a similiar but different problem: making sure all selected events arrive in sequence (which they do for projections here). The issue in here is more about making sure we don't skip events when querying the event bus. I suppose this guarantee is implemented quite differently in Axon. But then my knowledge about Axon is old and very basic :) @prolic : Do you have any resource detailing this behavior (for personal education :))? I managed to reproduce this scenario in a slightly different setup as well: a AR has to append 5 events in one call where the first event has a significantly larger payload then the others (e.g. large complex JSON object vs two small string keys). All events were published in the same Modifying events is something I neither want or can do. We are resetting / changing our projections quite rapidly, often for testing purposes only and many streams are used by many projections concurrently. The default one doing exactly the same as now. We then could add a second strategy supporting GAP detection and some (configureable) delay to retry. If the retry fails we assume a gap. That way I'd be backwards compatible and you can configure performance vs safety you won't miss an event. Am I thinking too naive here or missing any other vital parts or better approaches? A more definitive approach might be to look at aggregate versions. But this has its own downsides as well:

|

|

@fritz-gerneth researching for this problem I found this article https://www.matthewbilyeu.com/blog/auto-incremented-values-can-appear-out-of-order-if-inserted-in-transactions/ - I hope it helps. About adding an eventLoadStrategy: I think we should not do that, as it further complicates the usage of the event-store (we have already lots of configurable options, persistence strategies, ...). It's better to have a useful default value for sleep timeout and give a hint in the docs on why it was added and what impact you have changing the default. Another possible solution is a complete different way of inserting rows, namely without any autoincrement. But I didn't work this out yet, so I don't know if this is possible. |

|

@fritz-gerneth I see, and yes it's implemented differently. Projections (event handlers) in Axon typically do not directly query the event bus (they can, but there's really no point), but instead are fed events through a processor, which either follows the live stream or replays from a certain position. |

I would really appreciate that. We only need the autoincrement value to sort the events and read them forward and backward. If we use a stream/watch mechanism like in MongoDB or Postgres, events occur in the order they are saved to DB. As we can see, the autoincrement value is not really the order of events. I have discussed this a little with @codeliner and we have no really solution for the sort thing. Microseconds or Nanoseconds are not enough. The question is, how can we preserve the order of events when they are saved at the same time and how is it sortable ascending and descending? |

|

EventStore (http://eventstore.org/) also has incrementing event positions, starting from 0. So all its events are positioned as 0, 1, 2, 3, 4, ... and there are no gaps, never ever. I looked into this on how they solved this on their side (although they don't use SQL) and their solution (I don't know if that's the only reason why they do this, and I suppose that it's not the main reason) is to use a single writer thread only. All write operations will get sent to that writer queue so there is no possibility of any race conditions. As we don't have a server implementation here but merely a thin layer on top of a PDO connection, the closest we can do to get to the same behaviour is creating a lock for each write, so only one process can ever write and all others have to wait until it's finished. This will definitely reduce the write performance, but maybe that's even acceptable and definitely worth a try for the prooph/event-store v8 sql implementation. To reduce the time the lock is acquired, maybe the best way to do this is with a stored procedure, but this would be a BC break in v7. |

|

@mrook : I think Axon's processors are then pretty much the equivalent to projections here then (the TrackingEventProcessor to be precise). Think the Implementation of a TrackingToken that uses the global insertion sequence number of the event to determine tracking order and additionally stores a set of possible gaps that have been detected while tracking the event store.

Digging through the source code on GitHub my understanding on how Axon does this (with this token) is a more aggressive at-least-once approach:

Doing similiar on our end certainly would work as well but require a bit of a change in the event store api to allow multiple range-queries. As a side-note: dismissing my former approach. Giving it a second thought made it clear that this does not reliably solve the issue but only solve this for gaps starting at the start of my iteration but not in between. |

Performance impact of doing so would greatly depend on the stream strategy. Per aggregate should not be any major impact since Aggregates already kind of enforce this through event versions. But for for the others this would be a table lock affecting writes to either all aggregates of the same type or all aggregates on the system. Besides this I'd follow up on the gap-tracking stream positions. This might require a bit of work to actually track & store the gaps alongside the current position but would mitigate this issue too (the longer we allow gaps to be tracked the more safely it becomes). There're two major challenges I'd see for this though:

(To be clear: I'd be willing to implement both changes, for me that's merely a matter on if this is fixed in here or with a custom projection in our code-base) |

|

@fritz-gerneth if you have high write throughput right now, maybe you can test using "GET_LOCK" for a few hours in production and see if the impact is really that huge? Maybe it's still acceptable. The main problem that I see is the additional roundtrip which leads to higher locking times. That's why I think having stored procedures in place might be a better solution then sending multiple queries from PHP. |

|

Before testing this in production I wanted to see how this behaves and if it can actually solve the problem. I created a simple set of scripts to illustrate & test this issue:

The generator can be invoked like this: Arguments are:

Be sure to invoke the consumer before starting the generators though Interesstingly enough, this issue seems to decline as the amount of parallel producers increases (no locking): So my next test was wrapping the insert in a Now those values are to be taken with a (big) grain of salt as numbers have a great variance but they give at least some indication. The good news is: this does take care of any gaps in the consumer. Two things to note:

Pushing such a number of events on a single table concurrently is certainly not daily operations business so performance impact in any daily situation is proably (much) less if measurable at all. Summary: using |

|

As I thought already. Can you try the same with a stored procedure? So a) getlock, b) start transaction, c) write data, d) commit transaction and e) release lock is only one db roundtrip? |

|

Since the amount of rows we want to insert in one transaction varies I'm not entirely sure what would be the best way for stored procedures.. the only solutions I can find for this rely in passing in the data as a string and do some string-spliting afterwards.. which is a solution I hardy would want to start with even. but then i'm no expert with stored procedures and maybe there's a better solution? |

|

Using this stored procedure I did re-run the test-sequence again:

|

|

Thanks @fritz-gerneth.

…On Sun, Feb 3, 2019, 20:07 Fritz Gerneth ***@***.*** wrote:

Apparently it was too late yesterday, figured I did run the wrong scripts

to test the stored procedure :) Will re-run these on our staging servers

once we have the permissions set there to do so.

Meanwhile I ran the two other script versions there already (no locking,

locking) on a remote database server. Due to timings only for the two and

10 consumers:

Producers | Events Per Producer | Events/sec / producer | Events/sec total | Lock

---------------------------------------------------------------------------------

2 | 50005 | 224 | 448 | remote, no lock

2 | 50005 | 95 | 190 | remote, lock

10 | 50005 | 217 | 2170 | remote, no lock

10 | 50005 | 42 | 420 | remote, lock

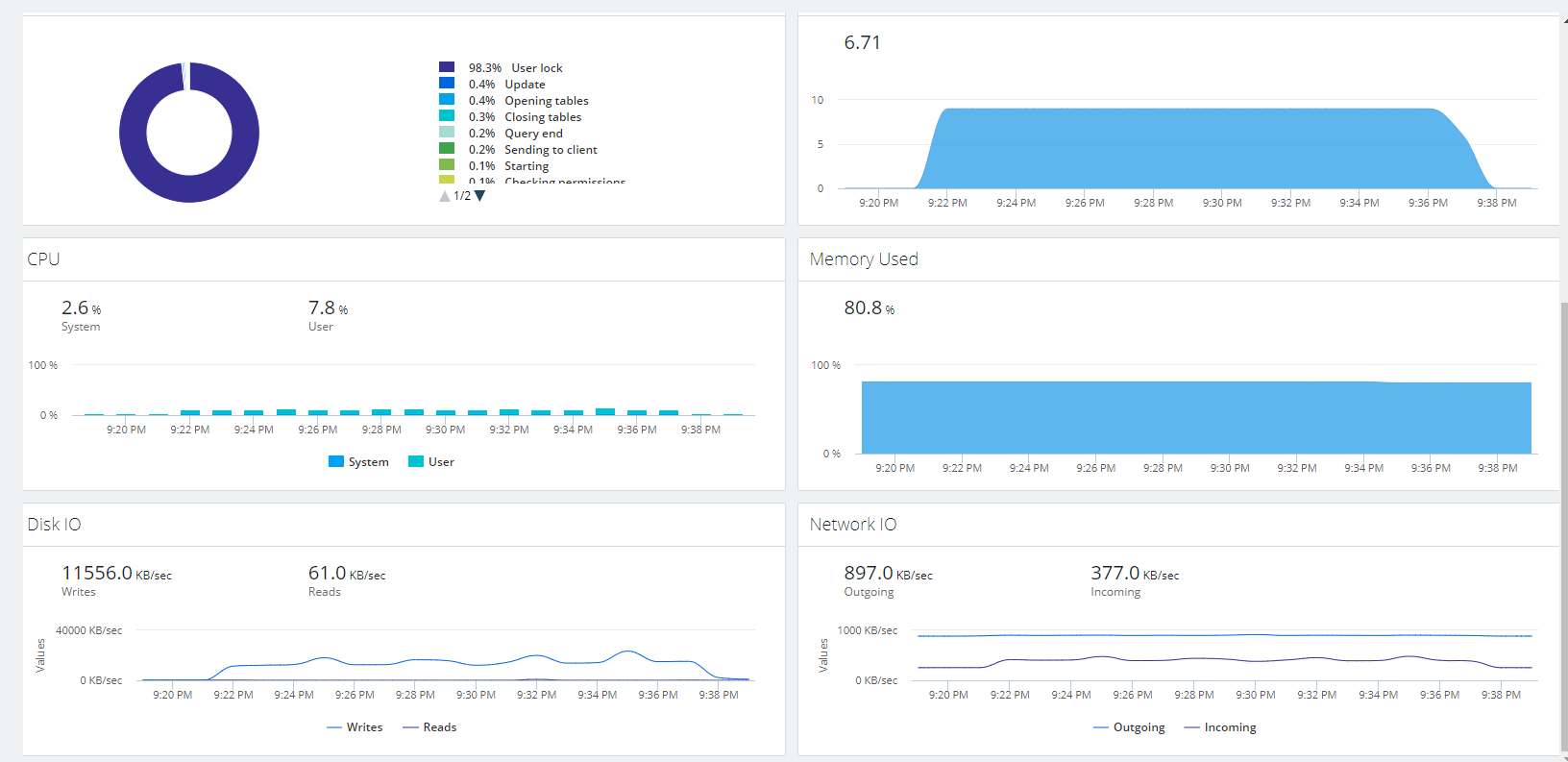

Looking at where query-time is spent most for no-locking :

[image: 10concurrent-no-lock]

<https://user-images.githubusercontent.com/1294731/52176525-524ec000-27b4-11e9-93e9-201952758aa0.PNG>

And with locking:

[image: 10concurrent-lock]

<https://user-images.githubusercontent.com/1294731/52176533-61357280-27b4-11e9-9970-8e3b2b88c71c.PNG>

Seeing most time is spent waiting for the lock, I would not expect much

difference with using a stored procedure.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#189 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAYEvKQ9zeSTWehMphG8HnFpY6wGfx1Cks5vJtEMgaJpZM4aakBS>

.

|

|

At last, here's the data for using the the stored procedure: |

|

@fritz-gerneth @codeliner My thoughts based on the investigation by @fritz-gerneth (Thanks again for this, awesome job!):

That said, I would go with the

Note: In PostgreSQL there is no |

|

If there are no objections, I'll write up a PR tomorrow to:

I'd skip Postgres for now, mostly because I have little experience there and we'd have to come up with a lock-name strategy. I think the issue is documented here and in the readme. Should someone be able to reproduce this issue on Postgres, the solution is here :) |

|

@fritz-gerneth sounds good to me, thank you very much! I'l try to look into postgres this weekend, I hope I find the time. |

How doe this behave with respect to multiple concurrent sessios though?

gaps are to be expected (e.g. on concurrency-exceptions). the issue (hopefully?) to be solved is that events are read out of order. I have outlined the approach for actual gap detection or tracking on the consumer side.

what's your setup on this? what exactly are you seeing here? |

|

It seems that, even though isolation level appears to block other transactions in a multi-terminal setup, it doesn't affect the view of the data from a 3rd party i.e. the event-consumer.php script. I still get gaps. I also get gaps with SELECT GET_LOCK. The only thing that stops pkey gaps is doing I'm testing with php 7.1 on mariadb 10.3 (fork of mysql 5.5.5) and mysql 5.7 I tried setting SERIALIZABLE in the my.cnf and also flusing innodb logs after every commit. Both had no effect. It seems to be that, when there is a gap found by event-consumer.php, that gap is not visible to either event-generator.php script; within their own transactions, they see a consistent, non-gap view of the data. |

Yes. that's the whole issue here. Even when looking at the database later on the gaps are not there yet.

I see you're using my test scripts too. Those are not compatible for MariaDB out of the box. I'm using a timeout of |

|

well, if the problem is in the consumer the producers don't need any changes. With the addition of serializable isolation level we can be certain that TXA commits and finishes before TXB, but the consumer only sees TXB in the results. Even changing settings like Adding Can anyone verify that |

Why should this ensure the correct sequential order of reads when reading? This only prevents issues with concurrent writes on the same row. But that's not happening here anyway.

which problem are you trying to solve? out of order reading of events (and thus skipping) or event number gaps? those are different things and require different solutions. this issue here only deals with the first one. do you have any sample data / output of what you are seeing on your side? why do you think it is is the consumer that is having troubles? are you still seeing the error with the |

|

Btw this is still an issue. GET_LOCK is not doing anything to this, projections from pg event-store are still skipping from 5-10% of events, which is huge. I tried transaction isolation too but results are the same. Anybody with some solution to this? |

|

@Mattin Do you have some more details about your setup? 5-10% of events is really huge. We did not see such massive skips before. For example how many events per second do you have? Did you try to run the test scripts to verify your issue? #189 (comment) |

|

Providing an update on this from my side - I have seen events being skipped very rarely as well (but still orders of magnitudes less likely then before). Unfortunately I have not had yet the time to look into this in more detail but only have a working theory: I only observed this in situations when I do persist multiple events on the same AR. In that case a projection sometimes skips one of these for yet unknown reasons. Event numbers are all in sequence, so this should be fine. Replaying the projection fixes the issue. So I assume this is some nasty in-transition concurrency stuff in MySQL. Not an MySQL expert, don't know one either who's into this on this level. Current plan of mitigation would be to add some sliding window event number gap-detection mechanism to the projections. The alternative might be some bug in the projections fetching incorrect stream positions in some situations. I'll have to add more logging to the projections to really look into this further. |

|

Not sure, but maybe MySQL transaction levels help??

https://dev.mysql.com/doc/refman/5.7/en/innodb-transaction-isolation-levels.html#isolevel_read-committed

…On Tue, Sep 10, 2019, 06:33 Fritz Gerneth ***@***.***> wrote:

Providing an update on this from my side - I have seen events being

skipped very rarely as well (but still orders of magnitudes less likely

then before). Unfortunately I have not had yet the time to look into this

in more detail but only have a working theory:

I only observed this in situations when I do persist multiple events on

the same AR. In that case a projection sometimes skips one of these for yet

unknown reasons. Event numbers are all in sequence, so this should be fine.

Replaying the projection fixes the issue. So I assume this is some nasty

in-transition concurrency stuff in MySQL. Not an MySQL expert, don't know

one either who's into this on this level. Current plan of mitigation would

be to add some sliding window event number gap-detection mechanism to the

projections.

The alternative might be some bug in the projections fetching incorrect

stream positions in some situations. I'll have to add more logging to the

projections to really look into this further.

—

You are receiving this because you modified the open/close state.

Reply to this email directly, view it on GitHub

<#189?email_source=notifications&email_token=AADAJPCCLEMDXHVEWZ524RDQI5ZWXA5CNFSM4GTKIBJKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD6KUH2I#issuecomment-529875945>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AADAJPEJ2LMJ53O54ULBUMDQI5ZWXANCNFSM4GTKIBJA>

.

|

|

Can anyone try transaction isolation level |

|

I tried all these transaction levels: The only thing that fixes it for me with the supplied demo scripts (event-gen-bulk.sh etc) is adding Transactions on the writer don't do anything because it is only ensuring consistency for that one write operation which is only appending rows. The reader seems to be encountering a block of 20 rows, but 10 of them from AR1 are still waiting to finish writing the key index, while 10 rows from AR2 are flushed first (because MVCC has to pick one thing to finish first when getting concurrent writes). The rows are there (this is why replaying the projection works, the pkeys are all in order), but are not available to selects that allow dirty reads. (or phantom reads, not sure which) I also tried A consistent read is a non-locking, non-blocking read that takes a snapshot of the DB and doesn't see changes happening after the select's point in time.

A locking read is triggered with

The types of locks differ with each isolation level, but even the most basic REPEATABLE READ default isolation level will work:

|

I think you are starting the generators without the locking strategy. The locking is controlled by a third parameter. Might want to check the output of the generator scripts, it prints which ever strategy it is using. With locks enabled there should never be two writers active at the same time, therefore index updates would not be an issue. |

I'd really like to solve the problem differently, but looking at the history of this issue and the fact that other libraries in other languages solved the problem with a sliding window gap-detection, too (f.e. AxonFramework) makes me wondering if there is any other solution to this problem :( As I stated earlier in this issue, when we hit the problem in a project that makes heavy use of projections, we solved the problem by adding projection flags to event metadata. We use a custom mongoDB event store (prooph v6 originally and then modified to be compatible with prooph v7 event-store interface). Each projection has its own flag and when it handled an event, it sets its flag to true. This way, we are 100% sure that every projection sees every single event no matter what happens on the write side. A sliding window gap detection would work very similar to that approach with the benefit that events stay immutable and the consumer is responsible for gap detection. |

|

Reply from Allard Buijze (CTO AxonIQ) on twitter:

|

|

We have 2 different scenarios here: Multiple writers

I think this case seems to be solved by the Single writer

After reading some logs here, it seems to be happening even with |

As I've said multiple times, transactions on the writers have no effect on the problem. The writers do not need a consistent view of the table.

In theory, yes. But, index updates are already not the issue. Global table locks on the writers does not guarantee a consistent view of the data from the select's side of things. Non-blocking reads always have a chance to see the database in a state that it never existed - at least in mysql. |

|

I'm trying to understand the discussion and would like to ask a few questions to make sure that I got it right. Let's look at @danizord example with the Single Writer. Two events, one transaction. If I understand @markkimsal correctly, this assumption is not entirely true.

Hence, his suggestion to use @fritz-gerneth says on the other hand, that this solution would enforce single-read-write for a stream. I guess this is bad for read performance? At least it sounds a bit like that, but I'm not an expert.

Is this really the case? If I understand @markkimsal correctly, it's not. And his suggestion to use We should also make sure that we're not looking at the wrong end. A bug in the projections could also be the root of the problem. TBH I don't think this is the case, because the projection logic is simple enough to be considered robust and stable. But in Germany we say "man soll niemals nie sagen!" (Never say never!) |

|

I also reread the article linked by @prolic earlier in the thread:

I'm starting to believe that this issue not only effects concurrent inserts of different transactions, but also inserts of multiple events within the same transaction. It makes sense somehow, because auto-increment is increased at insert time, not at commit time. So this is true for a single transaction, too. If the consumer reads while the writer is in the transaction, the consumer might see newly inserted rows. |

|

It's been a while since the last update on this issue. Now we're also facing the issue with a Postgres Event Store and need a solution. So I've added a simple gap detection mechanism (WIP), see #221 Feedback is very much appreciated! |

|

We can confirm this issue with PostgreSQL for We will try the gap detection and then give further feedback. Thank you so far everybody! |

|

@webdevilopers I can confirm that GapDetection works as it should with Postgre. I have just overridden the projection command with GapDetection and it worked like a charm. No skipped event since March 2020 :). Here is Command class for quick try: https://gist.github.com/Mattin/d668fc62073649aa2d473cbda245dd5b |

Issue: under unknown circumstances the

PdoEventStoreReadModelProjectorskips applying events to registered handlers.I have seen this issue happen a few times over the past year only. Yet since missing events in the projections causes quite a few problems I'm trying to figure out the issue since then. Creating this issue for tracking & by any chance input from others.

Setup: Mysql EventStore & Projections with SingleStreamStrategy running in their own process. DB Server and process server running on different VM hosts.

Projection options:

Symptoms: take a simple set of

IssueCreated,IssueUpdated,IssueDeletedof any examplaryIssueAR. Events occuring in the typical order ofIssueCreated->IssueUpdated(n times) ->IssueDeleted. The projection simply maintains a list of all (undeleted) issues and their latest values. Each event has a handler registered to either insert a row, update a row or delete the row respectively.For 99.9% of the time this works perfectly fine as expected. Yet for unknown reasons very rarely some event is not handled. The effect on the projection differes depending on the event (e.g.

IssueUpdate-> update lost) but is particular bad forIssueCreatedas rows are missing at all (subsequent events might rely on the presence).Resetting the projection solves this issue and all events are applied as expected. But this is a pretty unpractical to do in a production environment when this takes days each time.

Debugging:

To help me make this problem visible in the first place I slightly modified the

[PdoEventStoreReadModelProjector](https://github.com/prooph/pdo-event-store/blob/master/src/Projection/PdoEventStoreReadModelProjector.php#L575)to simply log a line about which event it is now dispatching:In normal operations I get sequential messages in this format (

gcl_collectionis my stream's name):Now there obvisouly might be gaps in the event no due to

ConcurrencyExceptionswhen inserting many events in parallel. But this still gives me an idea if an event has been handled at all. Anynoin the stream should also show up in my log as being dispatched at least once.Having this deployed and running for a few weeks now I finally coud capture this event again (on our low-volumne testing environment this time). My stream reported a

createdevent which had been skipped in the projection:Looking at the event-stream itself this event is clearly here though:

This is not limited to specific aggregate roots but can happen for any event, unrelated to the aggregate version or such. I have not seen this to happen during replays.

This only seems to happen when I insert events at a very high rate. In fact I think this has only happened so far if a single process rapdily creates many events (not necessarily on the same AR though). My current working theory is that while ordered in that way rows become visible to selects in a different order (e.g. the projection selects and gets event

115while114becomes visible to the select a millisecond later or so. But then I;m not that deep into MySQL internals if this is posibble.Any pointers on how to continue investigating this issue are welcome.

The text was updated successfully, but these errors were encountered: