-

Notifications

You must be signed in to change notification settings - Fork 1.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add area under the Precision-Recall curve (AUPRC): a useful metric for class imbalance. #806

Comments

|

@pycaret What is the default input argument to the metric defined through the add_metric feature in Pycaret 2.2? |

|

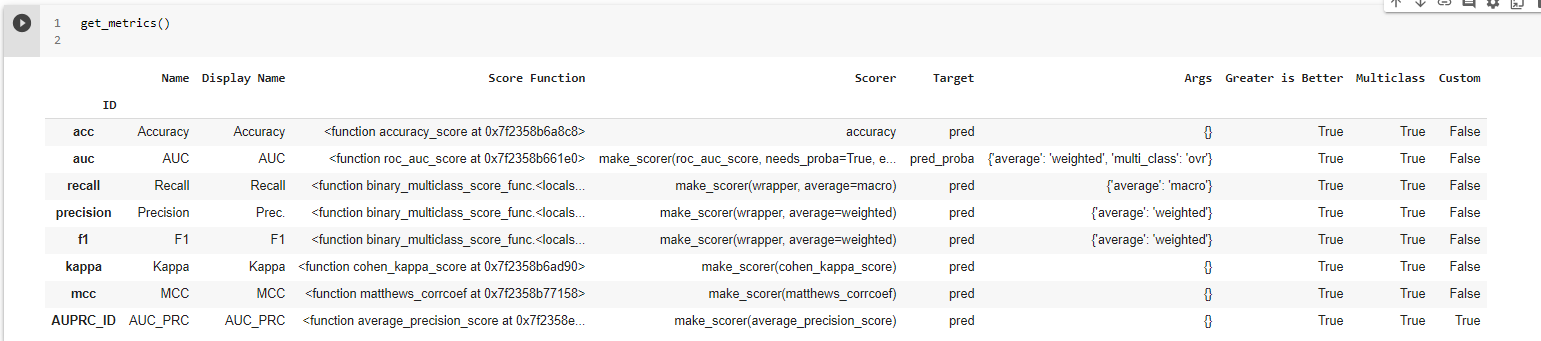

@gm039 You can actually access the metrics PyCaret is using by calling the |

|

@pycaret Thanks! Below are the metrics used. The input argument to the metric should be Target and Predicted Score but its taking Target and Predicted Label as verified in the previous snapshot. Unfortunately, both the average precision calculated is different from the score obtained using the evaluate_model(tuned_model_best) precision-recall curve (See the previous snapshot's PR curve). |

|

@gm039 You can pass the Hope this helps? |

|

@gm039 Please close the issue, if this answers the question. |

|

@pycaret Thankyou so much. It worked. |

The area under the Precision-Recall curve (AUPRC) is very useful metric for class imbalance. Here is the source (https://scikit-learn.org/stable/modules/generated/sklearn.metrics.average_precision_score.html).

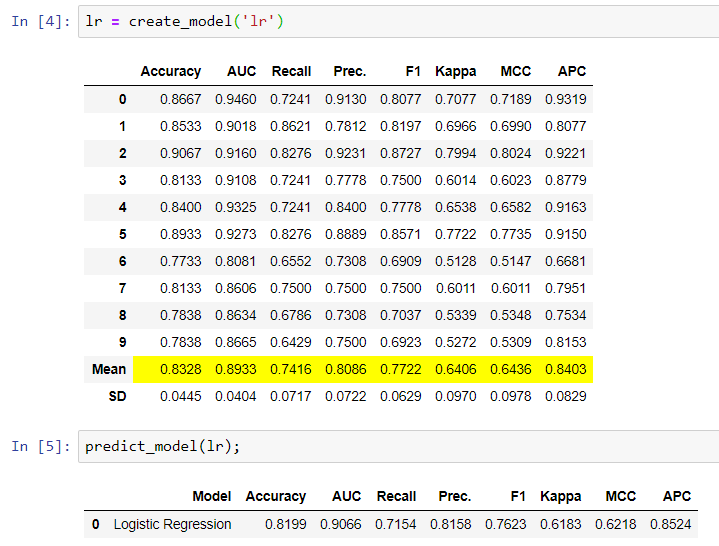

I tried using the add_metric feature in Pycaret 2.2 as below.

from sklearn.metrics import average_precision_score

add_metric('AUPRC_ID','AUC_PRC',average_precision_score, greater_is_better = True)

But the scores are different from the score obtained using the evaluate_model(tuned_model_best) precision-recall curve (See the snapshot below).

Additionally, the AUC_PRC score seems to takes Target and Predicted Label as input argument instead of Target and Predicted Score.

The text was updated successfully, but these errors were encountered: