-

Notifications

You must be signed in to change notification settings - Fork 633

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Very slow speed in complex_norm() function. #740

Comments

|

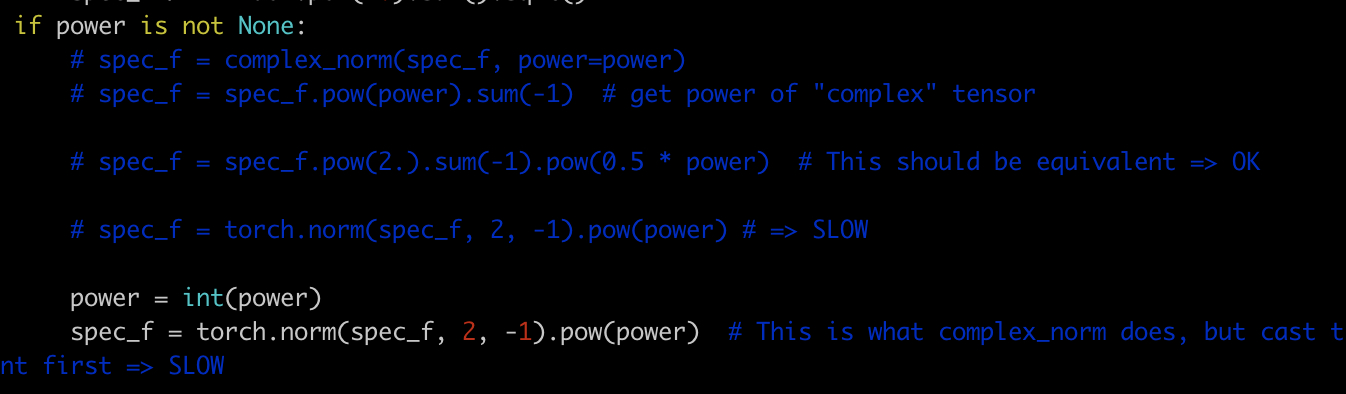

Thanks for helping debug this! Can you try with either of the following in spec_f.pow(2.).sum(-1).pow(0.5 * power) # This should be equivalenttorch.norm(spec_f, 2, -1).pow(power) # This is what complex_norm doespower = int(power)

torch.norm(spec_f, 2, -1).pow(power) # This is what complex_norm does, but cast to int first |

=> OK.

=> SLOW

=> SLOW Looks like |

|

I think this is an issue I posted about in |

|

Thanks to both of you for debugging :) This does look like the same problem. While this is being fixed, let's replace # Replace by torch.norm once issue is fixed

# https://github.com/pytorch/pytorch/issues/34279

complex_tensor.pow(2.).sum(-1).pow(0.5 * power)Do you want to open a pull request for this? |

Looks like you checked the speed in CPU. When I tested my script I ran the script on CPU because |

|

Closed by #747 |

Co-authored-by: holly1238 <77758406+holly1238@users.noreply.github.com>

🐛 Bug

When I call

spectrogram()in torchaudio 0.5.0 my training script runs very slow. I downgraded to pytorch 1.4 and torchaudio 0.4.0 then the script runs fast. So I compared the difference betweeen 0.4.0 and 0.5.0 and found that the complex_norm() is the root cause of the slow speed:audio/torchaudio/functional.py

Line 171 in bc1df48

If I install torchaudio 0.5.0 and change the line 171 to the 0.4.0's one:

and the speed is same as 0.4.0's.

For example in my training script with torachaudio 0.5.0:

The speed is about 70 files/sec. But with the 0.4.0's code:

As you can see the speed of 0.4.0's is about 5.7x faster than 0.5.0's. I can not share the script because the code is from my company. But I think that the speed difference comes from the process that call's the complex_norm() function. For example voxceleb_trainer calls

spectrogram()in main process and there's no speed reduction while my script calls spectrogram() in a forked process which is forked by pytorch's DataLoader.To Reproduce

Steps to reproduce the behavior:

spec_f = complex_norm(spec_f, power=power)tospec_f = spec_f.pow(power).sum(-1)Expected behavior

Environment

torchaudio.__version__print? (If applicable)Please copy and paste the output from our

environment collection script

(or fill out the checklist below manually).

Collecting environment information...

PyTorch version: 1.5.0+cu101

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Ubuntu 18.04.3 LTS

GCC version: (Ubuntu 7.4.0-1ubuntu1~18.04.1) 7.4.0

CMake version: version 3.10.2

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 10.1.243

GPU models and configuration:

GPU 0: Tesla P40

GPU 1: Tesla P40

GPU 2: Tesla P40

GPU 3: Tesla P40

GPU 4: Tesla P40

GPU 5: Tesla P40

GPU 6: Tesla P40

GPU 7: Tesla P40

Nvidia driver version: 418.87.00

cuDNN version: Could not collect

Versions of relevant libraries:

[pip3] numpy==1.18.4

[pip3] torch==1.5.0+cu101

[pip3] torchaudio==0.5.0

[pip3] torchvision==0.6.0+cu101

[conda] Could not collect

The text was updated successfully, but these errors were encountered: