-

Notifications

You must be signed in to change notification settings - Fork 379

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Problem with Polytope Sampler #1225

Comments

|

Thanks for flagging, this is a great observation. It is indeed a problem that "small step" means different things in different directions, we really want this to be isotropic. I'm not quite sure I fully understand where things go wrong in the code, I'll have to take a closer look to better understand that. But I think your suggestion sounds great, and we'd very much welcome a PR! I think we can go even a bit more low-level than build it just into the One of the things we may want to think about a bit more is how we deal with cases in which we don't have bounds, and the polytope is defined purely via inequality constraints. I guess we could just normalize the coefficients in each column of |

|

I will first file a PR with the simpler solution in I hope to have a first PR until end of the week ;) |

|

I approved #1341, but I'm going to leave this open since there are a few minor improvements we can make:

|

Summary: ## Motivation As discussed in issue #1225, the polytope sampler runs into problems if the variables live in different dimensions. This PR fixes the issue using the approach mentioned in the issue above. ### Have you read the [Contributing Guidelines on pull requests](https://github.com/pytorch/botorch/blob/main/CONTRIBUTING.md#pull-requests)? Yes Pull Request resolved: #1341 Test Plan: Unit tests Reviewed By: esantorella Differential Revision: D38485035 Pulled By: Balandat fbshipit-source-id: 8e6ed1c1b81f636d108becb1ab5cf2ca13294420

Summary: ## Motivation This is fixing a very minor issue and I've probably already spent too much time on it. Unless anyone feels really strongly about this, I'd prefer to either have this quickly either accepted or rejected rather than spend a while iterating on revisions. In the past it was possible to use indexers with dtypes that torch does not accept as indexers via `equality_constraints` and `inequality_constraints`. This was never really intended behavior and stopped being supported in #1341 (discussed in #1225 ) . This PR makes errors more informative if someone does try to use the wrong dtypes, since the existing error message did not make clear where the error came from. I aslo refactored a test in test_initalizers.py. ### Have you read the [Contributing Guidelines on pull requests](https://github.com/pytorch/botorch/blob/main/CONTRIBUTING.md#pull-requests)? Yes Pull Request resolved: #1345 Test Plan: Unit tests for errors raised ## Related PRs #1341 Reviewed By: Balandat Differential Revision: D38627695 Pulled By: esantorella fbshipit-source-id: e9e4e917b79f81a36d74b79ff3b0f710667283cb

* adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as pytorch#1225 * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * deprecates `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead Note: This change is in preparation for fixing facebook/Ax#2373

* adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as pytorch#1225 * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * deprecates `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead Note: This change is in preparation for fixing facebook/Ax#2373

* adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as pytorch#1225 * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * deprecates `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead Note: This change is in preparation for fixing facebook/Ax#2373

Summary: This set of changes does the following: * adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * Changes `HitAndRunPolytopeSampler` to take the `seed` arg in its constructor, and removes the arg from the `draw()` method (the method on the base class is adjusted accordingly). The resulting behavior is that if a `HitAndRunPolytopeSampler` is instantiated with the same args and seed, then the sequence of `draw()`s will be deterministic. `DelaunayPolytopeSampler` is stateless, and so retains its existing behavior. * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as pytorch#1225. If `bounds` are note provided, emits a warning that this cannot be performed (doing this would require vertex enumeration of the constraint polytope, which is NP-hard and too costly). * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * removes `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead * simplifies some of the testing code Note: This change is in preparation for fixing facebook/Ax#2373 Test Plan: Ran a stress test to make sure this doesn't cause flaky tests: https://www.internalfb.com/intern/testinfra/testconsole/testrun/3940649908470083/ Differential Revision: D58068753 Pulled By: Balandat

Summary: This set of changes does the following: * adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * Changes `HitAndRunPolytopeSampler` to take the `seed` arg in its constructor, and removes the arg from the `draw()` method (the method on the base class is adjusted accordingly). The resulting behavior is that if a `HitAndRunPolytopeSampler` is instantiated with the same args and seed, then the sequence of `draw()`s will be deterministic. `DelaunayPolytopeSampler` is stateless, and so retains its existing behavior. * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as pytorch#1225. If `bounds` are note provided, emits a warning that this cannot be performed (doing this would require vertex enumeration of the constraint polytope, which is NP-hard and too costly). * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * removes `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead * simplifies some of the testing code Note: This change is in preparation for fixing facebook/Ax#2373 Test Plan: Ran a stress test to make sure this doesn't cause flaky tests: https://www.internalfb.com/intern/testinfra/testconsole/testrun/3940649908470083/ Differential Revision: D58068753 Pulled By: Balandat

Summary: This set of changes does the following: * adds an `n_thinning` argument to `sample_polytope` and `HitAndRunPolytopeSampler`; changes the defaults for `HitAndRunPolytopeSampler` args to `n_burnin=200` and `n_thinning=20` * Changes `HitAndRunPolytopeSampler` to take the `seed` arg in its constructor, and removes the arg from the `draw()` method (the method on the base class is adjusted accordingly). The resulting behavior is that if a `HitAndRunPolytopeSampler` is instantiated with the same args and seed, then the sequence of `draw()`s will be deterministic. `DelaunayPolytopeSampler` is stateless, and so retains its existing behavior. * normalizes the (inequality and equality) constraints in `HitAndRunPolytopeSampler` to avoid the same issue as #1225. If `bounds` are note provided, emits a warning that this cannot be performed (doing this would require vertex enumeration of the constraint polytope, which is NP-hard and too costly). * introduces `normalize_dense_linear_constraints` to normalize constraint given in dense format to the unit cube * removes `normalize_linear_constraint`; `normalize_sparse_linear_constraints` is to be used instead * simplifies some of the testing code Note: This change is in preparation for fixing facebook/Ax#2373 Pull Request resolved: #2358 Test Plan: Ran a stress test to make sure this doesn't cause flaky tests: https://www.internalfb.com/intern/testinfra/testconsole/testrun/3940649908470083/ Reviewed By: saitcakmak Differential Revision: D58068753 Pulled By: Balandat fbshipit-source-id: 9a75c547a3493e393cd7e724edd984318b76e1f4

Hi,

I encountered a problem with the

get_polytope_sampleswhen the individual variables live in different dimensions. Here is a small didactic example:The bounds are as follows:

In addition an equality constraint is applied on the first three variables with all coefficients equal to 1 and an RHS of 1.

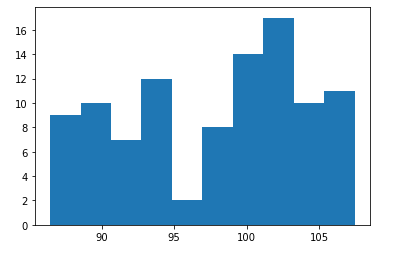

Sampling 100 samples leads to the following histogram for the fourth variables (bounds: 30-700). The distribution is not uniform at all and the values stay in a very slim interval.

In the next step, I scaled all variables to into the 0 - 1 range, including the equality constraint by using the formula below:

where

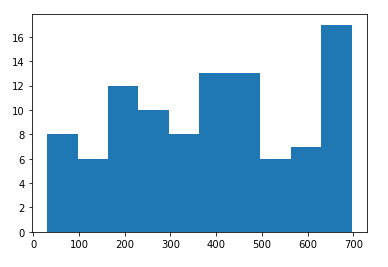

xi*is the scaled variable ands_iis the difference between upper and lower bound for the variable. The code below generates 100 samples for this case including scaling back into the original bounds:Now, the fourth variables is sampled in much more uniform way:

I think the reason for this behavior is that the Hit and Run sampler needs only a small step to reach the boundary of the polytope (defined by the equality constraint) and that this step is to small to guarantee a proper sampling of the fourth variable.

I would recommend to build this scaling directly into the

get_polytope_samplesmethod (PR could be provided by me), as it is also used bygen_batch_initial_conditionswhen linear constraints are present. In the case that people are not running the optimization in the scaled variable space but using aNormalizeInputTransforminstead, this behavior can lead to problems in the optimization process.What do you think?

Best,

Johannes

The text was updated successfully, but these errors were encountered: