New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Reshape] Allow the shape to be computed from constants #3827

Comments

|

Have you tried -const-fold-ops? It tries to constant fold nodes during loading. |

|

Without the flag I get one error: With the -const-fold-ops flag I get multiple errors: |

|

Maybe I miss understood the issue. Are you saying output of Conv is constant, as in can be deduced at compile time of the graph? |

|

The tensor size (shape) is constant not the tensor values. The reshape operator has 2 operands: a tensor and a shape. The problem is about the shape operand. |

|

Sorry derp moment. Are you sure problem is not elsewhere in the graph? I just double checked on onnx ssd which has similar pattern and it worked. Although granted it's on internal branch... |

Not sure 🙂I don't think there's any real downside to enabling it by default.

Good question. Are you sure it's an issue with Shape? It should always be loaded as a Constant so that's weird. Otherwise I'm wondering how the indices are being represented for the Gather, and the data for the Unsqueeze. Can you try adding in some |

|

The problem above is with the "shape" input operand of the Reshape node (and NOT the Shape node). |

|

@mciprian13 Right, but the debug messages you're showing say that there are multiple places where a Constant is not found. The way the const fold ops flag works is it tries to constant fold each op as it's loaded. Compilation might fail at the shape input for Reshape but the problem could be coming because a prior node that was loaded that flows into the shape input for Reshape was not correctly constant folded. Can you share something which repros it, even just that part of the proto that you showed in the issue? |

|

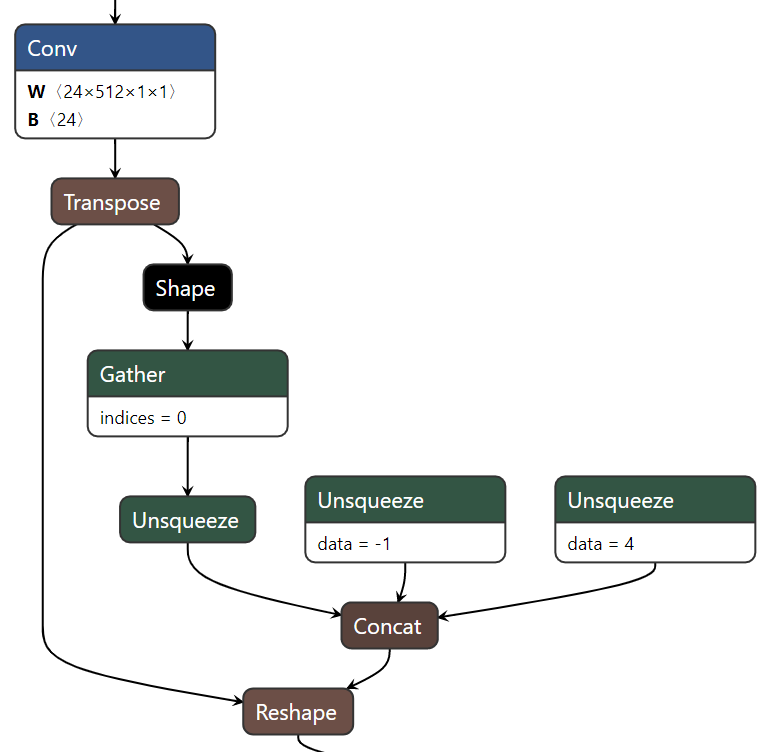

The model with the trouble (the picture above is from this model) is this: I think the option |

|

OK. Figured it out. Code is using mod->getConstantByName. Reason for that is that former iterates through constant vector and checks names against node names. Except those names are modified to be unique. So no match happens. The latter uses hash in Protobufloader which has original name. So that mystery solved. :) |

|

Nice find @ayermolo! Seems like then a simple 1 line fix is needed -- @mciprian13 Can you try this to make sure it fixes the problem for you? I believe it's simply changing: glow/include/glow/Importer/ProtobufLoader.h Line 230 in 69124a0

to |

|

Thanks for the solution. It works! |

Summary: **Summary** - Fix for pytorch#3827. - Enable the constant folding by default. **Documentation** None **Test Plan** None Pull Request resolved: pytorch#4027 Differential Revision: D19449778 Pulled By: jfix71 fbshipit-source-id: 0de441ef22efd759d65eff97f8d2d00269b1e270

Summary: **Summary** - Fix for pytorch#3827. - Enable the constant folding by default. **Documentation** None **Test Plan** None Pull Request resolved: pytorch#4027 Differential Revision: D19449778 Pulled By: jfix71 fbshipit-source-id: f5d3b6b508dca940603ef3b698e51b2f43420954

|

Should be fixed as of 8557756. |

Summary: **Summary** - Fix for pytorch#3827. - Enable the constant folding by default. **Documentation** None **Test Plan** None Pull Request resolved: pytorch#4027 Reviewed By: opti-mix Differential Revision: D19449778 Pulled By: jfix71 fbshipit-source-id: 031313315ad9d51df7a7d298ee9ffbf0b6baa9b8

Assume we have the following case:

Assume also that even though the graph has this form, all the tensor sizes are statically known (that is the size for the output of conv and transpose are known). Right now this model will not work because the Reshape node complains that the "shape" operator is not explicitly a constant even though conceptually the shape is a constant.

Can we do something to support this case?

Maybe something like moving the restriction of "shape" operand being a constant right after all the graph optimizations took place.

The text was updated successfully, but these errors were encountered: