New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

test_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on Intel Cascade Lake machines

#59098

Comments

|

When #43209 was reported, this issue was irreproducible at your end with AVX2 vectorization. In #56992, I used If you'd like to debug this issue, you can trigger CI checks in #56992 & SSH to the CI machine running Maybe Intel folks, such as @mingfeima, can also help out. Thank you! |

test_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on CI for AVX512 ATen kernels, but passes locallytest_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on xlarge resource-class for AVX512 ATen kernels

test_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on xlarge resource-class for AVX512 ATen kernelstest_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on xlarge resource-class

|

Hello @supriyar, @jerryzh168 & @mingfeima, on the current master branch's source-code, this issue can be reproduced with an

Hence it's been established that this issue is hardware-dependent. cc @ezyang @malfet, as this test might've to be skipped on CI when AVX512 support for ATen would've to be added, but a more pressing concern might be the possibility of the existence of any silent failures not currently covered by tests, that can lead to incorrect results on these machines. Thank you! |

test_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on xlarge resource-classtest_lstm in quantization.bc.test_backward_compatibility.TestSerialization fails on Intel Cascade Lake machines

|

@jerryzh168 @supriyar @mingfeima @CaoZhongZ The machine is definitely a Cascade Lake one as it has Thanks! |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

Hey @imaginary-person, two Qs for you. First, what do you need on your end to unblock? Skipping the test as known broken on Cascade seems sufficient to shut up CI and let you continue to test on xlarge. Second, any chance we can isolate this to a simple C program or assembly that gets misrun on Cascade Lake? I'd go about this by minimizing the test case, first tracing it down to a single operator from the test, and then spelunking into the operator implementation to get out the specific code that is responsible for doing the computation that is turning out incorreclty. |

|

Hello @ezyang,

|

|

FBGEMM leverages the |

|

From the discussion in pytorch/FBGEMM#125, it seems that |

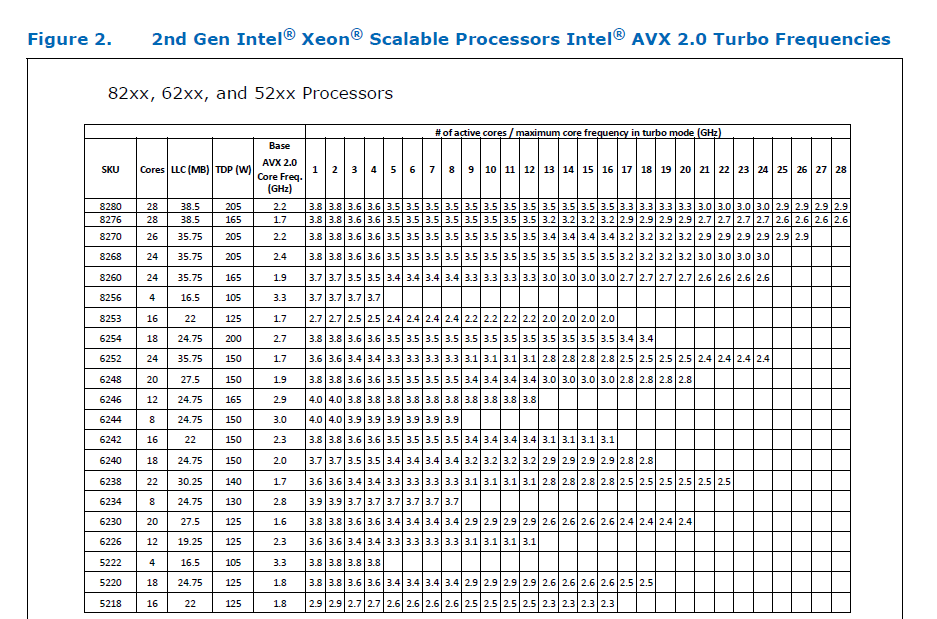

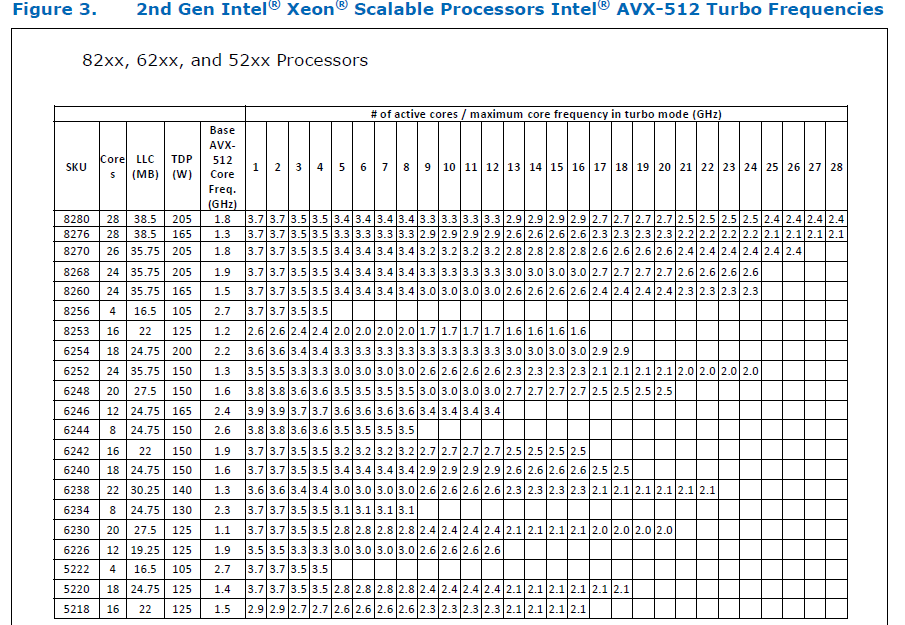

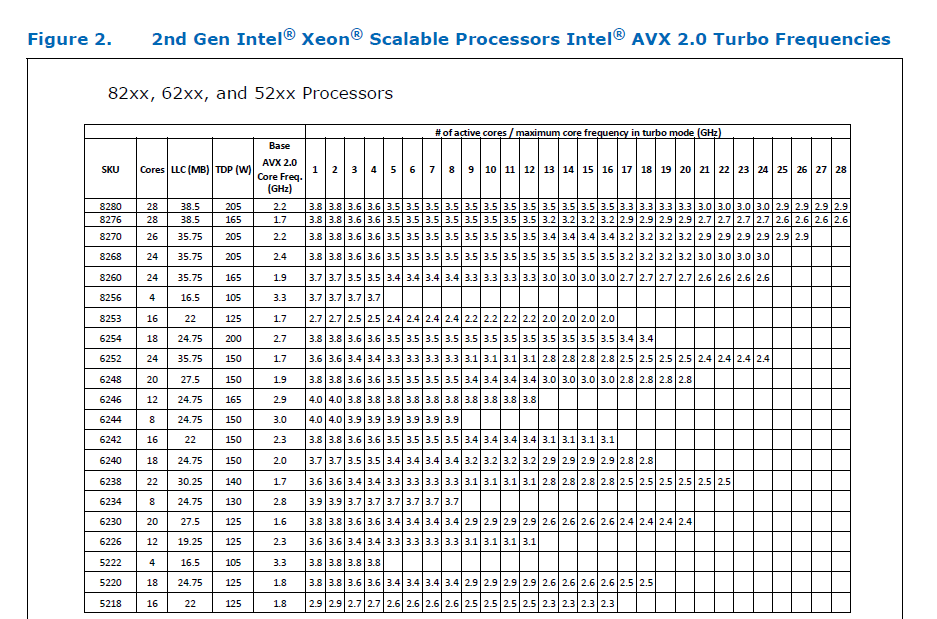

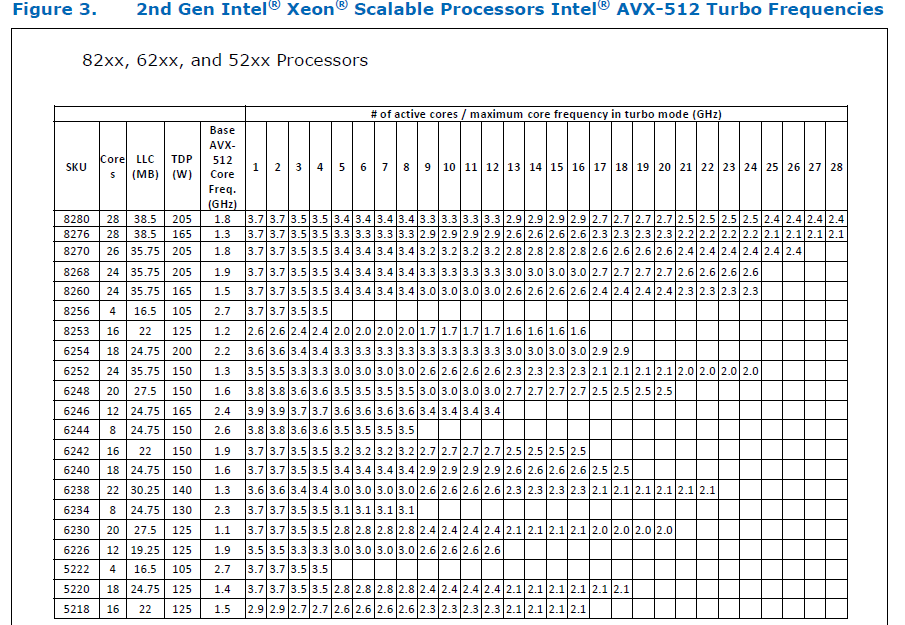

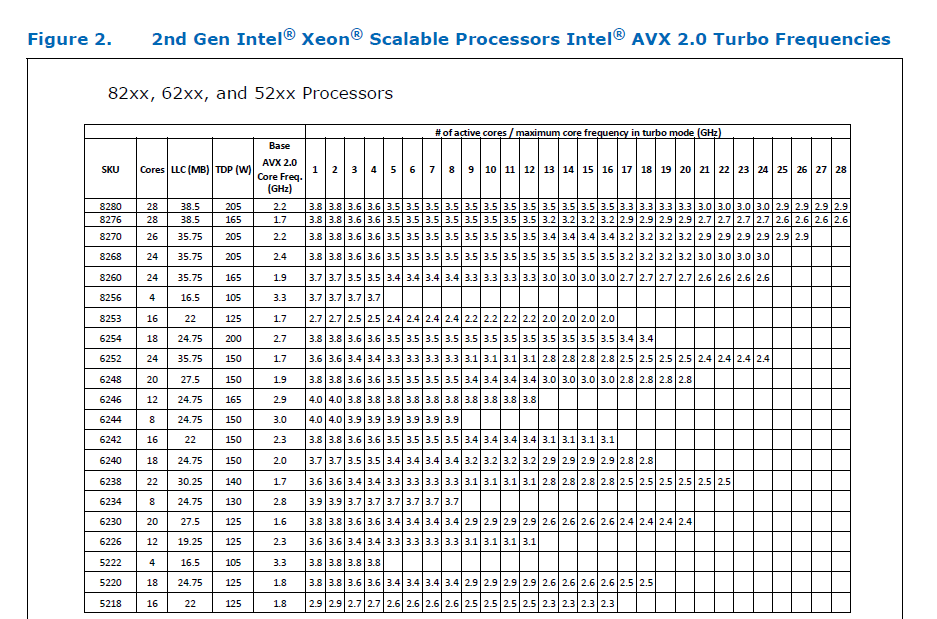

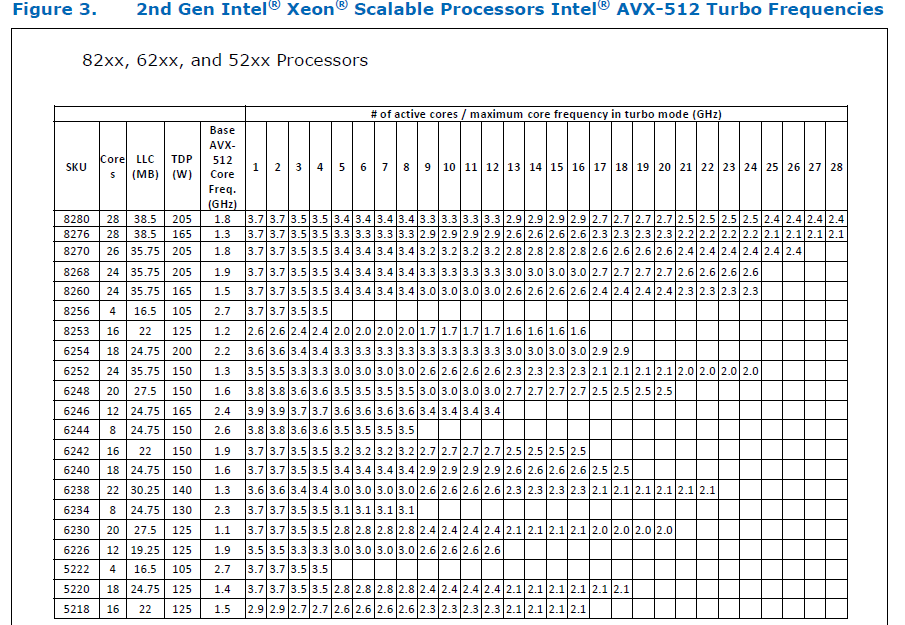

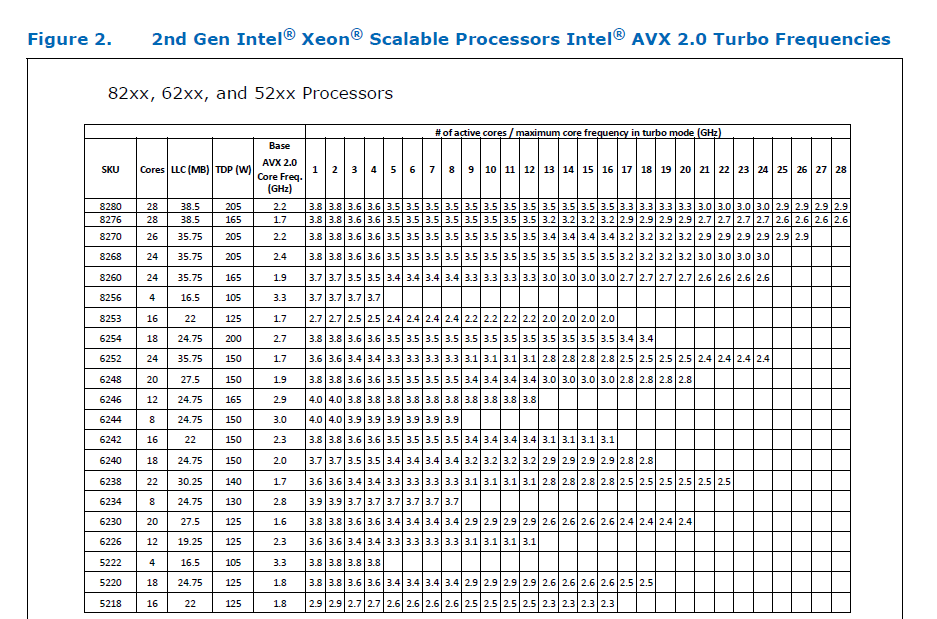

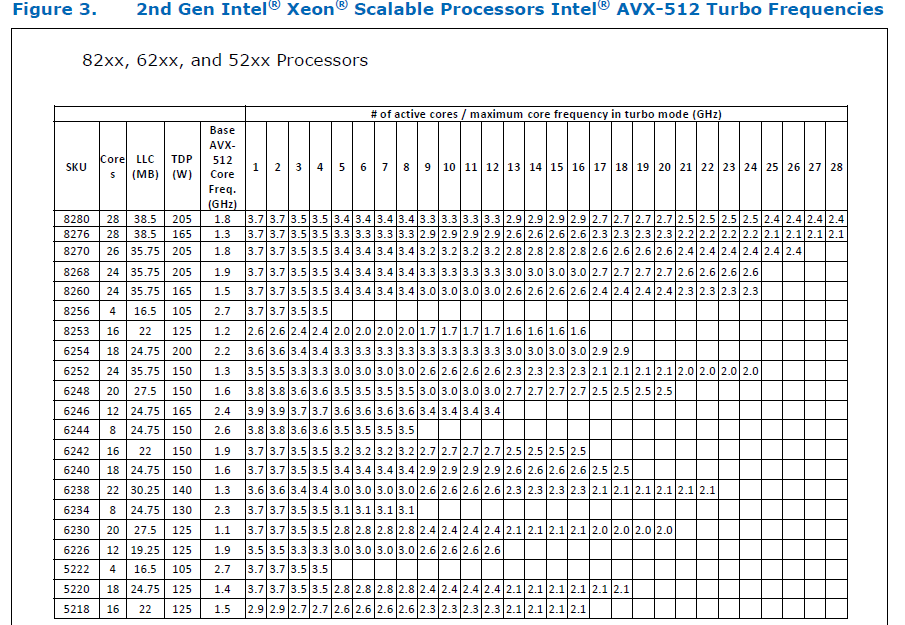

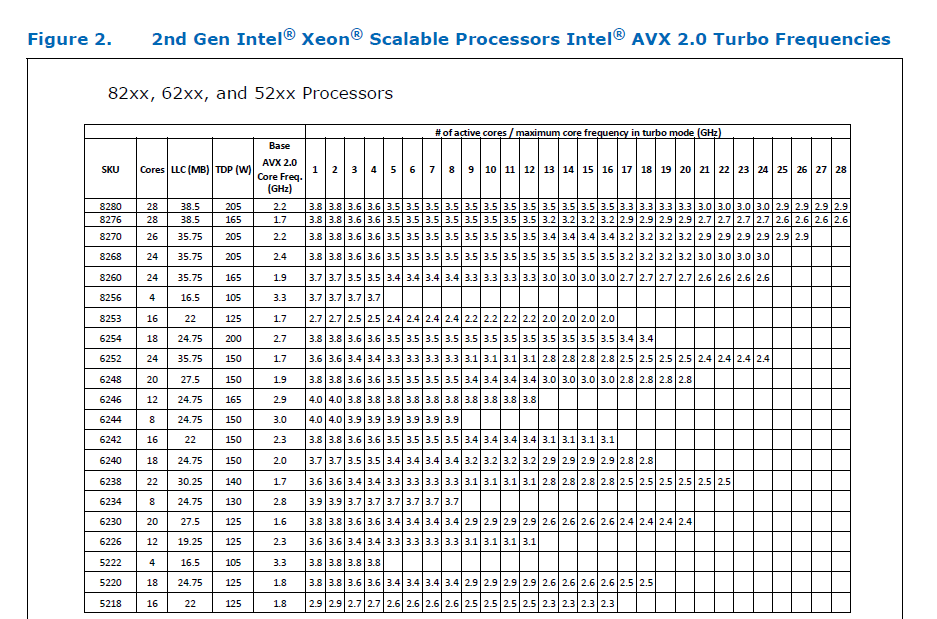

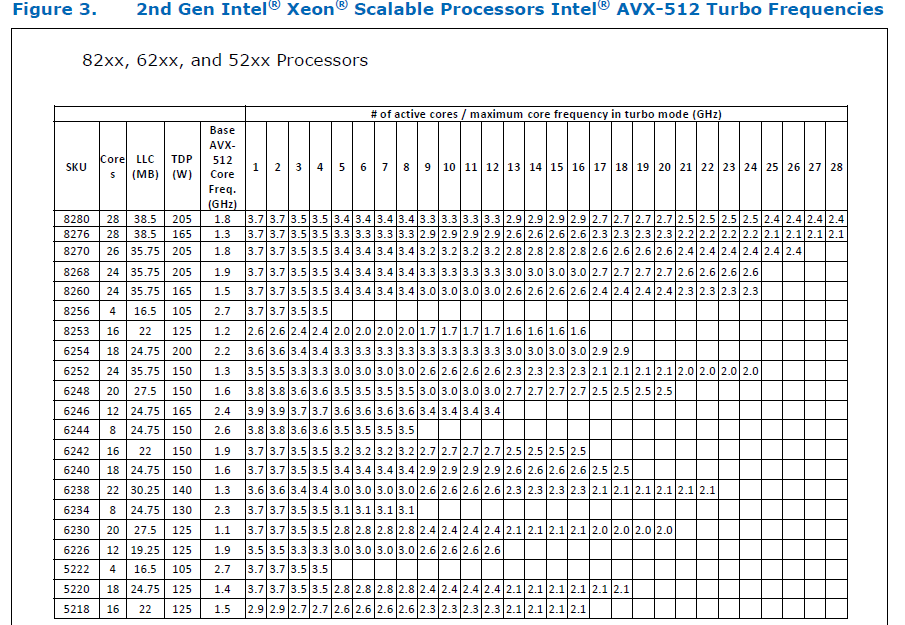

### Remaining Tasks - [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). ### Summary 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](#59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to @ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to @limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to @quickwritereader for helping fix 4 failing complex multiplication & division tests. ### Testing 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. ### Would the downclocking caused by AVX512 pose an issue? I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](pytorch/FBGEMM#209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. ### Is PyTorch always faster with AVX512? No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: #56992 Differential Revision: [D29266289](https://our.internmc.facebook.com/intern/diff/D29266289/) **NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D29266289/)! [ghstack-poisoned]

… remove AVX support" ### Remaining Tasks - [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). ### Summary 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](#59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to @ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to @limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to @quickwritereader for helping fix 4 failing complex multiplication & division tests. ### Testing 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. ### Would the downclocking caused by AVX512 pose an issue? I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](pytorch/FBGEMM#209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. ### Is PyTorch always faster with AVX512? No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: #56992 Differential Revision: [D29266289](https://our.internmc.facebook.com/intern/diff/D29266289/) **NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D29266289/)! [ghstack-poisoned]

…ort" ### Remaining Tasks - [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). ### Summary 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](#59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to @ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to @limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to @quickwritereader for helping fix 4 failing complex multiplication & division tests. ### Testing 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. ### Would the downclocking caused by AVX512 pose an issue? I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](pytorch/FBGEMM#209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. ### Is PyTorch always faster with AVX512? No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: #56992 Differential Revision: [D29266289](https://our.internmc.facebook.com/intern/diff/D29266289/) **NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D29266289/)! [ghstack-poisoned]

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](#59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to @ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to @limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to @quickwritereader for helping fix 4 failing complex multiplication & division tests. 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](pytorch/FBGEMM#209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: #56992 Differential Revision: [D29266289](https://our.internmc.facebook.com/intern/diff/D29266289/) **NOTE FOR REVIEWERS**: This PR has internal Facebook specific changes or comments, please review them on [Phabricator](https://our.internmc.facebook.com/intern/diff/D29266289/)! ghstack-source-id: 97ce82d770c53ee43143945bcf123ad6f6f0de6d Pull Request resolved: #61903

Summary: Pull Request resolved: #61903 ### Remaining Tasks - [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP). ### Summary 1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed. 2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415). It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now. 3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now. 4. One test is currently being skipped - [test_lstm` in `quantization.bc](#59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines. The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d. Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses. Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code. Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests. ### Testing 1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2. Only one test had to be modified, as it was hardcoded for AVX2. 2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support. ### Would the downclocking caused by AVX512 pose an issue? I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](pytorch/FBGEMM#209), which are said to have poor AVX512 performance. This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance. Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -   The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them. ### Is PyTorch always faster with AVX512? No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512. It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed. Original pull request: #56992 Reviewed By: soulitzer Differential Revision: D29266289 Pulled By: ezyang fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

|

@imaginary-person Looks like it's been 2 years. Is this still an issue with the latest master? If not can we close this? |

|

Closing issue. Thanks! |

TLDR

This issue has been established to be hardware-dependent, as it can be reproduced with the current master branch's source-code as well. Please refer to #59100. Debugging this issue can potentially uncover some latent issues with the Intel Cascade Lake series (gcc 9.3 or the hypervisor, etc not supporting it well, as per Occam's razor?).

🐛 Bug

Hello, in #56992, I'm trying to add AVX512 support to ATen.

test_lstminquantization.bc.test_backward_compatibility.TestSerializationis failing on CI (please read Additional Context) for AVX512 kernels in the CI checkpytorch_linux_bionic_py3_8_gcc9_coverage_test1.However, if I clone the repo locally and build, I don't encounter this failure on machines with AVX512 support.

Even if I install the CI build artifact locally, I still don't encounter this failure. Please help me figure out the source of this error on CI. Thanks!

Reproduction steps (require a machine with AVX512 support)

wget https://13766736-65600975-gh.circle-artifacts.com/0/home/circleci/project/dist/torch-1.9.0a0%2Bgitd895ec3-cp38-cp38-linux_x86_64.whlpip install torch-1.9.0a0+gitd895ec3-cp38-cp38-linux_x86_64.whl.OR

Clone the repo of the PR locally,

git clone --recursive https://github.com/imaginary-person/pytorch-1 -b only_vec .USE_MKL=1 USE_MKLDNN=1 USE_LLVM=/usr/lib/llvm-10 python setup.py developpython test/test_quantization.py -v -k test_lstmTwo tests will run with this keyword.

The test failing on CI is

test_lstmofquantization.bc.test_backward_compatibility.TestSerialization.The test fails with error,

Expected behavior

The test should pass on a machine with AVX512 support, as it does locally.

Failing Environment

This issue has been established to be hardware-dependent, as it can be reproduced with the current master branch's source-code as well, which doesn't yet have AVX512-vectorized ATen kernels. Please refer to #59100.

Environments in which the test passes (

locallyon a cloud platform with full-metal hardware)I tried to reproduce the issue on two different machines with Ubuntu 18.04 & Ubuntu 20.04, respectively.

Before testing, I uninstalled previous PyTorch versions.

While installing the CI build artifact, the installation took a few seconds (which is normal, as it suggests that the build didn't have to take place again).

After installing the CI

pytorch_linux_bionic_py3_8_gcc9_coverage_buildartifact,OS: Ubuntu 18.04.1 LTS (x86_64)

GCC version: (Ubuntu 9.3.0-11ubuntu0~18.04.1) 9.3.0

CMake version: version 3.10.2

Libc version: glibc-2.27

Python version: 3.8 (64-bit runtime)

Python platform: Linux-4.15.0-137-generic-x86_64-with-glibc2.27

Versions of relevant libraries:

[pip3] numpy==1.20.3

[pip3] torch==1.9.0a0+gitd895ec3

Python version: 3.8 (64-bit runtime)

Python platform: Linux-5.4.0-67-generic-x86_64-with-glibc2.29

Additional context

Currently, Linux CI checks that are tests are run with

largeresource_classVMs, which, in turn, are run on machines without AVX512 support. Forpytorch_linux_bionic_py3_8_gcc9_coverage_test1&pytorch_linux_bionic_py3_8_gcc9_coverage_test2, I temporarily modified CircleCI config in that PR to usexlargeVMs, as they are run on machines with AVX512 support, so AVX512 kernels can be tested. This can be verified from the fact thatATEN_CPU_CAPABILITYfor these modified CI checks wasavx512in the CI logs.cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel

The text was updated successfully, but these errors were encountered: