-

Notifications

You must be signed in to change notification settings - Fork 144

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Sparse voxel for pointset alongside features #182

Comments

|

Given that you know which points are inside your voxels (e.g, based on a given index for each point), you can utilize scatter_mean(x, index, dim=0, dim_size=num_voxels)Hope this helps! |

|

Hello @rusty1s thanks for your very prompt response. Sorry I didn't follow what you refer to as index in your example? Is this the following? |

|

@rusty1s I think i have a decent understanding of your point, but I'm facing this error with using The traceback I want the scattering (as mean) to take place over a 3D voxel whose indices are in |

|

The issue is that your current import torch

from torch_scatter import scatter_mean

from torch_cluster import grid_cluster

sample = torch.randn(100, 3)

cluster = grid_cluster(sample, size=torch.tensor([0.5, 0.5, 0.5]))

clustered_pos = scatter_mean(sample, cluster, dim=0) |

|

@rusty1s Thank you very much! I've a follow-up question. In addition to In this example, |

|

Mh, one way I could think of is to use a hierarchical version based on larger grid sizes, and then you would only replace the voxel information with more fine-grained information in case there exists any points in those voxels. |

|

This issue had no activity for 6 months. It will be closed in 2 weeks unless there is some new activity. Is this issue already resolved? |

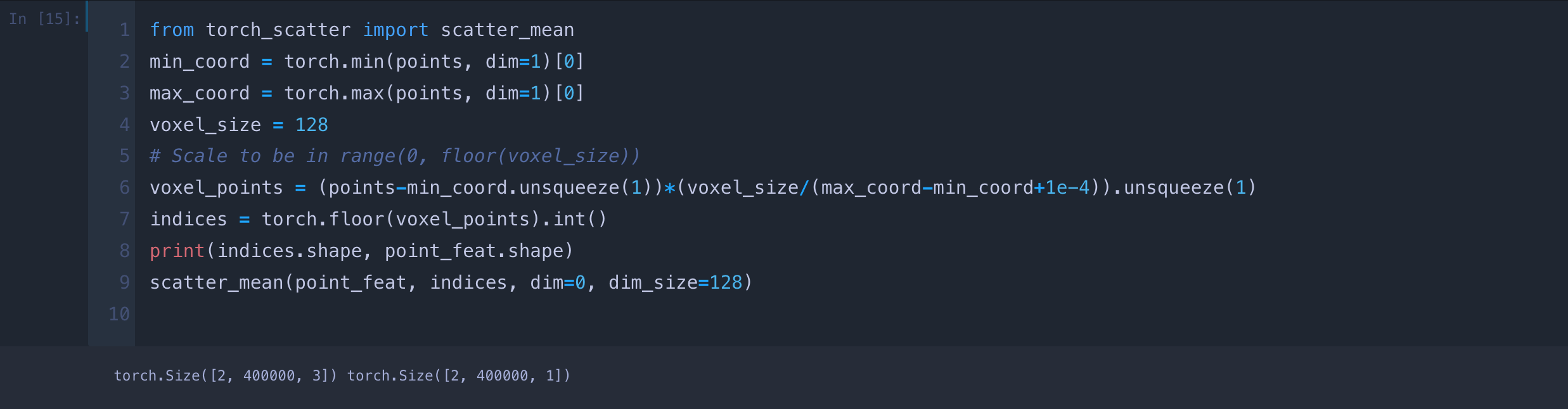

Hello.

I have a (B, N, 3) pointsets and (B, N, 1) pointwise features which I wish to voxelize. The goal of this voxelisation is to be able to query from the voxel, (approximated) feature at any given point with minimal computation time. For example, given a new point and I wish to compute its feature, with a voxel representation, I can interpolate from neighbouring nodes of the given point, which is easy to compute knowing the edge information. Alternatively, I can use KNN , but when N is large (typically 1million), this operation is quite slow.

I can construct my voxels from pointset using torch.histogram, then what is a better choice to fill those voxels with my features? I tried to use

torch_sparse.coalescebut when I query a known location, the features do not match. Can someone please help me out?Thank you.

The text was updated successfully, but these errors were encountered: