This code is the testing implementation of the following work:

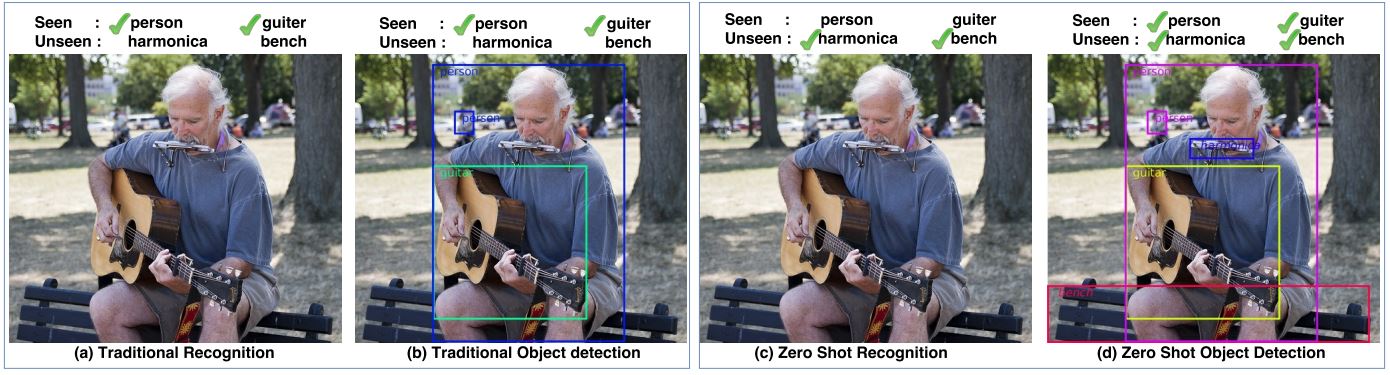

Shafin Rahman, Salman Khan, and Fatih Porikli. "Zero-Shot Object Detection: Learning to Simultaneously Recognize and Localize Novel Concepts." arXiv preprint arXiv:1803.06049 (2018). (Project Page)

- Download the pre-trained model available on the link below and place it inside the "Model" directory (Link to pre-trained model (h5 format))

- Other requirements:

- Python 2.7

- Keras 2.1.4

- OpenCV 2.4.13

- Tensorflow 1.3.0

This code has also been tested with Python 3.6, Keras 2.0.8, OpenCV 3.4.0 and on Ubuntu and Windows.

sample_input.txt: a sample input file containing test image paths

detect.py: to perform zero-shot detection task using sample_input.txt

keras_frcnn: directory containing supporting code of the model

Dataset: directory containing sample input and output

Model: directory containing pre-trained model and configuration file

ImageNet2017

cls_names.txt: list of 200 class names of ImageNet detection dataset.seen.txt: list of seen class names used in the paperunseen.txt: list of unseen class names used in the papertrain_seen_all.zip: it is a zipped version of text filetrain_seen_all.txtwhich contain training image paths and annotation used in the paper. Each line contains training image path, a bounding box co-ordinate and the ground truth class name of that bounding box. For example, Filepath,x1,y1,x2,y2,class_nameunseen_test.txt: all the image paths used for testing in the papers. Images are from training and validation set from ImageNet 2017 detection challenge. Every image contains at least one instance of unseen object.word_w2v.txt: word2vec word vectors of 200 classes + bg used in the paper. The ith column represents the 500-dimensional word vectors of the class name of ith row of cls_names.txt.word_glo.txt: GloVe word vectors of 200 classes + background (bg) used in the paper. The ith column represents the 300-dimensional word vectors of the class name of ith row ofcls_names.txt.

To run zero-shot detection on sample input kept in Dataset/Sampleinput, simply run detect.py after installing all dependencies like Keras, Tensorflow, OpenCV and placing the pre-trained model in the Model directory. This code will generate the output files for each input image to Dataset/Sampleoutput.

The resources required to reproduce results of ImageNet related experiments are kept in the directory ImageNet2017. All the images are from ILSVRC2017_DET.tar.gz which can be obtained from ImageNet detection challenge 2017 website. For both training and testing of this paper, we have used images from /ILSVRC/Data/DET/train and /ILSVRC/Data/DET/val of the zipped arxiv ILSVRC2017_DET.tar.gz.

-

If you get the

CUDA_ERROR_OUT_OF_MEMORYin Tensorflow, place the following snippet after library loadings (the top section) indetect.pyimport tensorflow as tf config = tf.ConfigProto() config.gpu_options.allow_growth = True config.gpu_options.per_process_gpu_memory_fraction = 0.8 #Change it to suit your GPU load K.set_session(tf.Session(config=config))

-

If you want to run the sample code on CPU only, place the following snippet after library loadings (the top section) in

detect.pyimport tensorflow as tf num_cores = 2 # 2,4, or 8 config = tf.ConfigProto(intra_op_parallelism_threads=num_cores,\ inter_op_parallelism_threads=num_cores, allow_soft_placement=True,\ device_count = {'CPU' : 1, 'GPU' : 0}) K.set_session(tf.Session(config=config))

If you use this code and model for your research, please consider citing:

@article{rahman2018zeroshot,

title={Zero-Shot Object Detection: Learning to Simultaneously Recognize and Localize Novel Concepts},

author={Rahman, Shafin and Khan, Salman and Porikli, Fatih},

journal={Asian Conference on Computer Vision},

year={2018}

}

We thank Yann Henon for the following implementation of Faster-RCNN: keras-frcnn