-

Notifications

You must be signed in to change notification settings - Fork 232

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[MRG] Export notebook to gallery #180

[MRG] Export notebook to gallery #180

Conversation

|

I assume that in this PR you will also be fixing #141 |

|

Glad to see this going into documentation soon 💃 |

|

And this fixes #153 |

|

Regarding the dataset, should I go for the faces dataset for instance ? (Probably the supervised version since we use |

|

I just ran another |

Yes. Digits could also be an option. Note that you will have to use dimensionality reduction (say t-SNE) to visualize things in 2D. Otherwise we could work with a 2D or 3D dataset but there is not so many nice things to show in such cases |

Allright, I tried to launch the example with digits, but there is a conditionning problem with SDML, so I think we'll need to wait for #162 to be merged to finish this one |

|

Here is a first result of plotting with the faces dataset (with the digits dataset points are already well separated out with tsne on the raw dataset): RCA failed (I still need to understand why) The improvement is not very clear, except maybe for lmnn, but for lfda for instance it's worse... Though it could work with appropriate tuning. |

|

I tried with the balance dataset and reducing the dimension gives a bit weird results (like I think balance kind of defines a grid of regularly spaced points, which seems a bit like an artificial dataset) (but anyway running the default version of the algos didn't work so much either) |

|

An option would be to add some noise dimensions (this is a bit artificial but will definitely work) Otherwise you have to look for harder datasets - if they t-SNE visualization is already very good on the original representation, it is hard to obtain clear visual improvements |

|

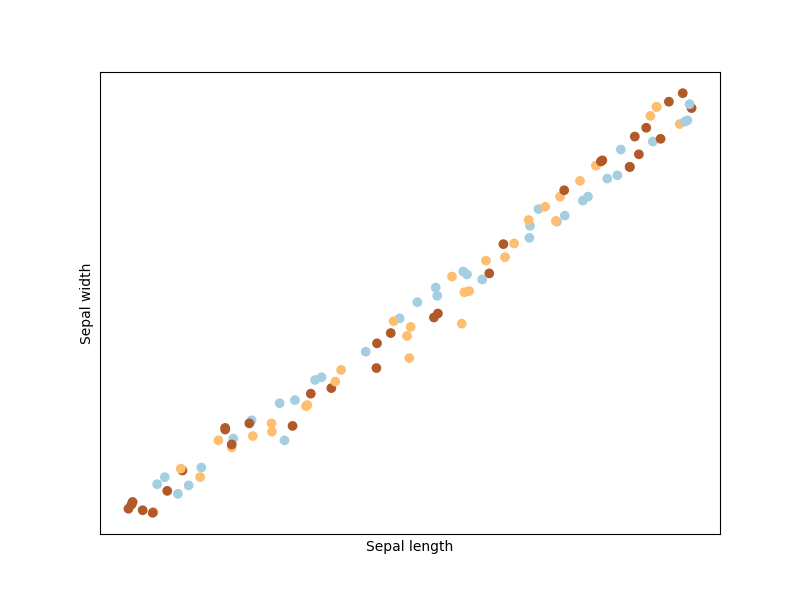

In the end I used iris with 5 columns of noise added, in the last commit is a version that I think is mergeable for the example, let me know what you think |

|

Note that there is still |

|

I haven't read all the text yet, but I agree this is a good approach and a reasonable example to use. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it looks pretty good. As discussed it would be great to use make_classification from sklearn. This allows to natively have noisy dimensions, but also to have several clusters per class (which could be nice to illustrate the difference between methods based on local constraints like LMNN and those based on global ones like ITML), and many other options (class separation, label noise, etc).

|

I just pushed an example that uses @bellet I also wrote something about the fact that some algorithms don't try to cluster all points from the same class in one same cluster, while others implicitly do that, which can be seen quite clearly from these examples (since every class has a distribution with 2 clusters: If I understood correctly, the algorithm that don't cluster similar points in a unique cluster are |

|

If these changes are fine I think we are good to merge |

|

I just had a quick look and it looks good! I have a few nitpick suggestions on the wording and presentation, will write a review for that asap |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some improvements.

Maybe we should add the missing algorithms? (eg MMC, MLKR)

examples/plot_metric_examples.py

Outdated

| visualisation which can help understand which algorithm might be best | ||

| suited for you. | ||

|

|

||

| Of course, depending on the data set and the constraints your results |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would remove this paragraph

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| ~~~~~~~~~~~~~~~~~~~~~~ | ||

|

|

||

| This is a small walkthrough which illustrates all the Metric Learning | ||

| algorithms implemented in metric-learn, and also does a quick |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maybe rather "with some visualizations to provide intuitions into what they are designed to achieve".

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| ~~~~~~~~~~~~~~~~~~~~~~ | ||

|

|

||

| This is a small walkthrough which illustrates all the Metric Learning | ||

| algorithms implemented in metric-learn, and also does a quick |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

also I would mention that this is done on synthetic data

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| """ | ||

|

|

||

| # License: BSD 3 clause | ||

| # Authors: Bhargav Srinivasa Desikan <bhargavvader@gmail.com> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you should add yourself as an author

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| # Loading our data-set and setting up plotting | ||

| # ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ | ||

| # | ||

| # We will be using the IRIS data-set to illustrate the plotting. You can |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is not iris anymore. update and link to make_classification documentation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, I forgot to update this indeed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done

examples/plot_metric_examples.py

Outdated

| Algorithms walkthrough | ||

| ~~~~~~~~~~~~~~~~~~~~~~ | ||

|

|

||

| This is a small walkthrough which illustrates all the Metric Learning |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

not all actually

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, I will add MMC, but I don't know for MLKR, I think I'll just talk about it when describing NCA ? Since the cost function is very similar except that MLKR uses a soft-nearest neighbors for regression as I understood

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

true, MLKR is for regression. it is a good idea to mention it there as proposed

maybe change "all the metric learning algorithms" to "most metric learning algorithms"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the end, I added most metric learning algorithms because we don't talk about Covariance, (we could maybe ?) And I added MLKR with make_regression task because I found the results were pretty cool too! Even if it breaks a bit the outline because it's just between supervised algorithms and the "constraints" section... Tell me what you think ?

examples/plot_metric_examples.py

Outdated

| # | ||

| # Implements an efficient sparse metric learning algorithm in high | ||

| # dimensional space via an :math:`l_1`-penalised log-determinant | ||

| # regularization. Compare to the most existing distance metric learning |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

compared

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Indeed, done

examples/plot_metric_examples.py

Outdated

| # Neighborhood Components Analysis | ||

| # ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ | ||

| # | ||

| # NCA is an extrememly popular metric-learning algorithm, and one of the |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

extremely

maybe remove last part of the sentence

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done for extremely

For the last part, is it because it can make people that don't know the algorithm think that if it's one of the first few it might be not cutting edge/outdated ?

Done

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

just that it is maybe not such a relevant thing to mention here. you could also argue this for LMNN, and others like MMC

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's right, they are also one of the first ones indeed

examples/plot_metric_examples.py

Outdated

|

|

||

|

|

||

| ###################################################################### | ||

| # Manual Constraints |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We do not say explicitly what is meant by "constraint" before so I think this part is quite confusing

Also I think we want to insist on the fact that many metric learning algorithms only need the weak supervision given by constraints, not labels. In many applications, this is easier to obtain.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree, I'll reformulate this paragraph, let me know what you think

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we can also delete the num_constraints=200 in all *_Supervised algorithms what do you think ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

i guess so, unless it increases the computation time too much

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's right, I tried and it tooked almost the same time, so I removed them

examples/plot_metric_examples.py

Outdated

| # it's worth one's while to poke around in the constraints.py file to see | ||

| # how exactly this is going on. | ||

| # | ||

| # This brings us to the end of this tutorial! Have fun Metric Learning :) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maybe also add that metric-learn is compatible with sklearn and so we can easily do model selection, cross validation, scoring etc and refer to the doc for more details.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree, done

|

@bellet Thanks for your review, I addressed your comments |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some additional small comments for improvement.

Very nice example!!! Looking forward to merge it

examples/plot_metric_examples.py

Outdated

| # distance in the input space, in which the contribution of the noisy | ||

| # features is high. So even if points from the same class are close to | ||

| # each other in some subspace of the input space, this is not the case in the | ||

| # total input space. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maybe "this is not the case when considering all dimensions of the input space"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| # good literature review of Metric Learning. | ||

| # | ||

| # We will briefly explain the metric-learning algorithms implemented by | ||

| # metric-learn, before providing some examples for it's usage, and also |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

its

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done

examples/plot_metric_examples.py

Outdated

| # | ||

| # Basically, we learn this distance: | ||

| # :math:`D(x,y)=\sqrt{(x-y)\,M^{-1}(x-y)}`. And we learn this distance by | ||

| # learning a Matrix :math:`M`, based on certain constraints. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

- there is repetition here "we learn this distance"

- add very quick explanation of what is meant by "constraint" (can be inspired from What is metric learning? page). For instance something like, "we learn the parameters :math:

Mof this distance to satisfy certain constraints on the distance between points, for example requiring that points of the same class are close together and points of different class are far away."

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| # | ||

| # We will briefly explain the metric-learning algorithms implemented by | ||

| # metric-learn, before providing some examples for it's usage, and also | ||

| # discuss how to go about doing manual constraints. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

remove manual constraint and replace by something like "discuss how to perform metric learning with weaker supervision than class labels"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| plot_tsne(X_rca, Y) | ||

|

|

||

| ###################################################################### | ||

| # Metric Learning for Kernel Regression |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Regression example: Metric Learning for Kernel Regression

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done

examples/plot_metric_examples.py

Outdated

| # going to go ahead and assume that two points labelled the same will be | ||

| # closer than two points in different labels. | ||

| # | ||

| # Do keep in mind that we are doing this method because we know the labels |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

move this above, right after the sentence saying that we are going to create constraints from the labels

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done

examples/plot_metric_examples.py

Outdated

|

|

||

|

|

||

| ###################################################################### | ||

| # Using our constraints, let's now train ITML again. We should keep in |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

not sure the last sentence is needed (already said that before)

examples/plot_metric_examples.py

Outdated

| ###################################################################### | ||

| # And that's the result of ITML after being trained on our manual | ||

| # constraints! A bit different from our old result but not too different. | ||

| # We can also notice that it might be better to rely on the randomised |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this last sentence is not very clear. i would remove it

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, done

examples/plot_metric_examples.py

Outdated

| # also compatible with scikit-learn, since their input dataset format described | ||

| # above allows to be sliced along the first dimension when doing | ||

| # cross-validations (see also this :ref:`section <sklearn_compat_ws>`). See | ||

| # also some :ref:`use cases <use_cases>` where you could use scikit-learn |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

where you could combine metric learning with scikit-learn estimators

examples/plot_metric_examples.py

Outdated

| # pipeline or cross-validation procedure. And weakly-supervised estimators are | ||

| # also compatible with scikit-learn, since their input dataset format described | ||

| # above allows to be sliced along the first dimension when doing | ||

| # cross-validations (see also this :ref:`section <sklearn_compat_ws>`). See |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

avoid repetition of "see"

|

Thanks for the review @bellet, I addressed all your comments |

|

Thanks! I have pushed a few small updates myself to avoid another reviewing cycle. I think we can merge once CI passes |

Fixes #141 #153

Hi, I've just converted @bhargavvader 's notebook from #27 into a sphinx-gallery file (with this snippet: https://gist.github.com/chsasank/7218ca16f8d022e02a9c0deb94a310fe). This way, it will appear nicely in the documentation, and can also allow us to check if every algorithms work fine.

There are a few things to change to make the PR mergeable (to compile the doc, you need sphinx-gallery):