New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[MRG] Merge IterativeImputer into master branch #11977

Conversation

|

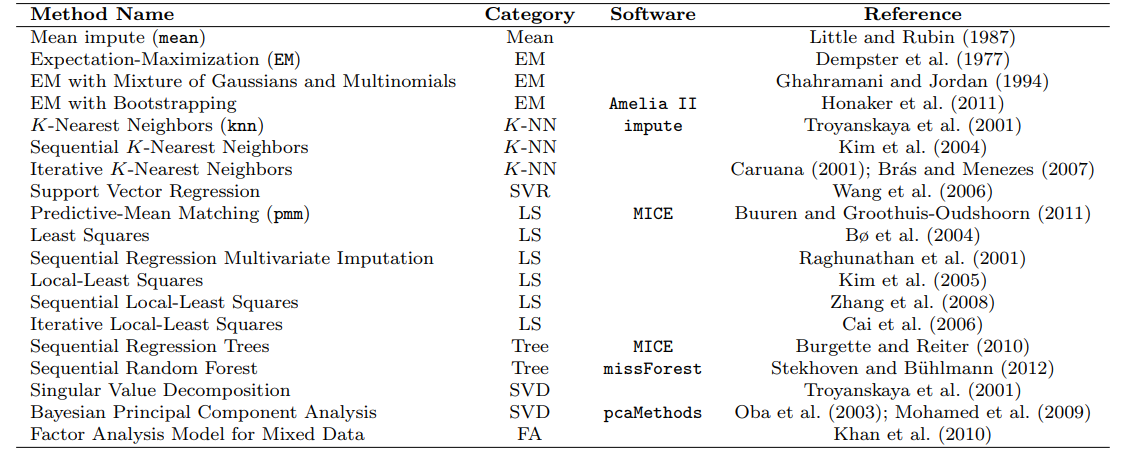

Here's a good recent paper about imputation methods: http://www.jmlr.org/papers/volume18/17-073/17-073.pdf And a useful table from it: As discussed, An example could perhaps compare RidgeCV, KNN, CART, RandomForest as model inputs? |

|

Here we are considering all the "sequential" approaches as being roughly

the same thing. Although we could consider multiple classes in fact, if we

think differences are substantial

|

|

I don't have any reasons to think differences are substantial, but I also don't have enough experience using various sequential imputers. |

|

what's to do here? Does this need reviews? |

|

@amueller not yet. We have to merge a few more examples and modifications in here before it's ready for more reviews. |

|

@jnothman looks like the most recent merge caused some branch conflicts, but I don't have access to resolve them. |

|

PR to the IterativeImputer branch is welcome, but for simple merge

conflicts it's fine if I resolve them.

|

|

Great, thanks. |

|

Test failures on some (but not the latest) version of scipy, around the usage of truncnorm. |

|

Some thoughts of things we might want here to improve usability:

|

|

I still don't think Also, looks like you're having the same weird issue with the doctest that I'm having in the other PR. |

I am not proposing to apply it with sample_posterior=True. Without sample_posterior it would be good to have a way to identify how well it converged. I can only get 26 in the doctest locally (haven't tried installing different dependencies), including with repeated |

|

OK, that makes sense to me. I'll make a PR with the changes you suggested once work dies down a little and once we get the missforest example PR in. Do you still want to wait on the amputer and MICE example before merging this? We may not get anyone to finish those up... |

|

I don't think they're essential. The multiple imputation example looks good

but a bit complicated, and needs an API update. Amputation needs to happen

separately.

|

|

I agree. It would be good to get this into the hands of users (i.e. merged before next major release) as I assume there will be plenty of other things we'll learn from them - and that will inform further how to make the examples better. |

|

I'm not sure what you mean by improving training examples. Do you mean improving examples to use real-world missing data or data removed not completely at random? When you say we should go beyond mean and std of predictions, do you mean mean and std of scores? Why should we go beyond it in this case? |

|

Yes I mean only example, training seems a typo. My issue with the current example is that we show that the mean is slightly better with huge standard deviation. So this is actually difficult to conclude something. Having thing like wilcoxon test to check how many time the iterative imputer is better than other might bring more insight.

Sent from my phone - sorry to be brief and potential misspell.

|

|

Btw I'm now again not sure about your statement about stacking a missing

indicator, @sergeyf. The feature indicating missingness for the column

being imputed will be all 0 in training, since those samples where it is

missing are deleted, aren't they?

|

|

Yea, they are 0 at training and 1 at predict time. Could this ever become a

problem for any passed in estimator?

…On Thu, Feb 14, 2019, 12:08 AM Joel Nothman ***@***.*** wrote:

Btw I'm now again not sure about your statement about stacking a missing

indicator, @sergeyf. The feature indicating missingness for the column

being imputed will be all 0 in training, since those samples where it is

missing are deleted, aren't they?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#11977 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/ABya7BwOist1K-AFaGfJHoF6FTFv5RtQks5vNRlrgaJpZM4WW65f>

.

|

|

Something like K nearest neighbours might be affected detrimentally by

that... Most estimators should not.

|

|

Actually even K neighbours will just offset the distances where the query

has a 1 but it should make no difference to the neighbours. Radius

neighbours might be a problem

|

|

My instinct is to avoid the feature entirely if some estimators could have

a problem. Or explicitly remove the associated missing column.

…On Thu, Feb 14, 2019, 2:11 PM Joel Nothman ***@***.*** wrote:

Actually even K neighbours will just offset the distances where the query

has a 1 but it should make no difference to the neighbours. Radius

neighbours might be a problem

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#11977 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/ABya7GWQQm7t3syzliEoEattOUDy-3Wpks5vNd8bgaJpZM4WW65f>

.

|

|

Yes, if we implement it as a feature within this estimator, removing that

column makes sense. But if we just recommend stacking the input, I don't

think it's a big risk

|

|

I'm going to merge this and get it road tested. Can you set the base for the multiple imputation pr to master? Thanks and congratulations! |

|

Unfortunately GitHub has recorded me as the author of that commit :( I should have done the merge manually |

|

Oh man I am very happy!

Thanks to you and @glemaitre for your patience and partnership on this

project. I learned a lot from you both, and I'm a better writer of software

for it.

And don't worry about the authorship of the PR. I don't care that much -

it's more important to me that people find this work useful.

…On Thu, Feb 14, 2019, 5:04 PM Joel Nothman ***@***.*** wrote:

I'm going to merge this and get it road tested. Can you set the base for

the multiple imputation pr to master?

Thanks and congratulations!

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#11977 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/ABya7M5Z2v951VNv8_A7tmlzuLFTWfq4ks5vNge2gaJpZM4WW65f>

.

|

|

AWESOME! Amazing work y'all! |

|

@sergeyf Thanks for the hard work. |

|

Great work! In reading the documentation, it seems there is no variance adjustment for the so-imputed values for estimators. Is something that is out of scope? |

|

@reckoner Do you have a pointer to what variance adjustment means in this case? What purpose does it serve? |

|

@sergeyf In Chapter 5 ("Estimation of imputation uncertainty") of

The issue is that the estimators that are generated from processing the dataset that have been filled out via imputation have to be adjusted for the variance in the imputed values. For example, the mean based on the so-imputed data has a different estimator variance (e.g., confidence interval) than that of the non-imputed data. Imputing multiple times means that the uncertainties (i.e., variance) of the multiple imputation is used to estimate this variance and flow this down to the ultimate estimator. I don't know, outside of I hope that helps. |

|

Ah ok. Well, Please take a look and comment there if you think it's appropriate. We're also happy to take contributions to that PR because the original person (who knows MICE-related topics better than I do) was unable to continue working on it. |

|

You are correct. Seems like this issue was raised here by @jnothman Let me study this carefully. |

|

Generally we don't report variance of coefficients in scikit-learn. Where

we give predictive intervals we do not currently have a way to adjust

these. So yes , that is diy.

|

By Sergey Feldman

This reverts commit 518b183.

This reverts commit 518b183.

By Sergey Feldman

This is a placeholder to track work on a new IterativeImputer (formerly MICE, ChainedImputer) that should accommodate the best features of R's MICE and missForest, especially as applicable to the settings of predictive modelling, clustering and decomposition.

Closes #11259

TODO:

n_iter->max_iterwith checks for convergence atmax(abs(X - Xprev)) < tol([MRG] IterativeImputer: n_iter->max_iter #13061)predictortoestimator([MRG] IterativeImputer: n_iter->max_iter #13061)Ping @sergeyf, @RianneSchouten