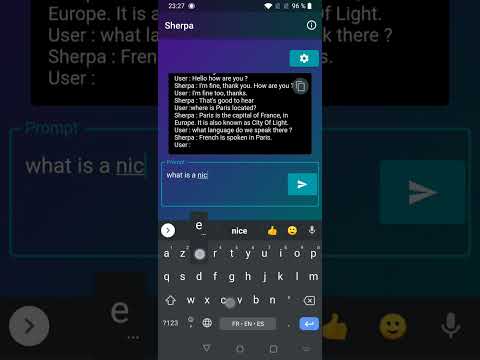

This app is a demo of the llama.cpp model that tries to recreate an offline chatbot, working similar to OpenAI's ChatGPT. The source code for this app is available on GitHub.

You can use the latest models on the app.

Windows, mac and android ! Releases page

The app was developed using Flutter and implements ggerganov/llama.cpp, recompiled to work on mobiles. Please note that Meta officially distributes the LLaMA models, and they will not be provided by the app developers.

To run this app, you need to download the 7B llama model from Meta for research purposes. You can choose the target model (should be a xxx.bin) from the app.

Additionally, you can fine-tune the ouput with preprompts to improve its performance.

To use this app, follow these steps:

- Download the

ggml-model.binfrom Meta for research purposes. - Rename the downloaded file to

ggml-model.bin. - Place the file in your device's download folder.

- Run the app on your mobile device.

Please note that the llama.cpp models are owned and officially distributed by Meta. This app only serves as a demo for the model's capabilities and functionality. The developers of this app do not provide the LLaMA models and are not responsible for any issues related to their usage.