Active sensing through the use of Adaptive Depth Sensors is a nascent field, with potential in areas such as Advanced driver-assistance systems (ADAS). They do however require dynamically driving a laser / light-source to a specific location to capture information, with one such class of sensor being the Triangulation Light Curtains (LC). In this work, we introduce a novel approach that exploits prior depth distributions from RGB cameras to drive a Light Curtain's laser line to regions of uncertainty to get new measurements. These measurements are utilized such that depth uncertainty is reduced and errors get corrected recursively. We show real-world experiments that validate our approach in outdoor and driving settings, and demonstrate qualitative and quantitative improvements in depth RMSE when RGB cameras are used in tandem with a Light Curtain. This code has the official pytorch implementation for this paper:

Monocular RGB Depth Estimation

Monocular RGB Depth Estimation + Light Curtion Fusion

Youtube Video

Paper

https://github.com/soulslicer/adap-fusion/blob/master/pics/paper.pdf

Triangulation Light Curtains were introduced in 2019 and developed by the Illumination and Imaging (ILIM) Lab at CMU. This sensor (comprising of a rolling shutter NIR camera and a galvomirror with a laser) uses a unique imaging strategy that relies on the user providing the depth to be sampled, with the sensor returning the return intensity at said location. The user provides a 2D top-down profile, which then generates a curtain in 3D. Any surface that is within the thickness of this curtain, returns a signal in the NIR image which we can capture.

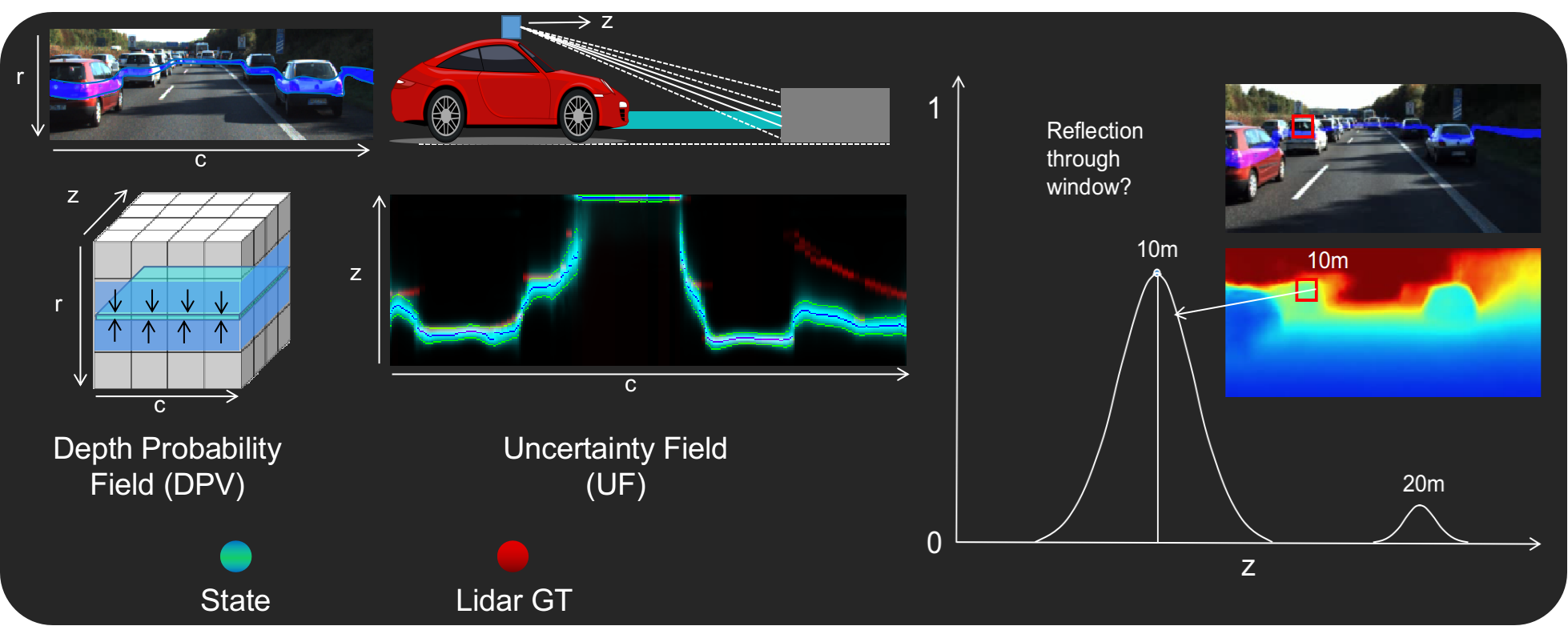

Neural RGBD introduced the world to Depth Probability Fields (DPV), where instead of predicting depth per pixel, we predict a distribution per pixel. To help us visualize the uncertainty, we collapsed the distribution along the surface of the road so that you can visualize the Uncertainty Field (UF). You can read more details in here. One can see that Monocular RGB depth estimation has significant uncertainty.

We show how errors in Monocular Depth Estimation are corrected when used in tandem with an Adaptive Sensor such as a Triangulating Light Curtain (Yellow Points and Red lines are Ground Truth). (b) We predict a per-pixel Depth Probability Volume from Monocular RGB and we observe large per-pixel uncertainties (σ=3m) as seen in the Bird's Eye View / Top-Down Uncertainty Field slice. (c) We actively drive the Light Curtain sensor's Laser to exploit and sense multiple regions along a curve that maximize information gained. (d) We feed these measurements back recursively to get a refined depth estimate, along with a reduction in uncertainty.

Our Light Curtain (LC) Fusion Network can take in RGB images from a single monocular image, multiple temporally consistent monocular images, or a stereo camera pair to generate a Depth Probability Volume (DPV) prior. We then recursively drive our Triangulation Light Curtain's laser line to plan and place curtains on regions that are uncertain and refine them. This is then fed back on the next timestep to get much more refined DPV estimate.

- Download Kitti

# Download from http://www.cvlibs.net/datasets/kitti/raw_data.php

# Use the raw_kitti_downloader script

# Link kitti to a folder in this project folder

ln -s {absolute_kitti_path} kitti

# Alternatively download from http://megatron.sp.cs.cmu.edu/raaj/data3/Public/raaj/kitti.zip

- Install Python Deps

torch, matplotlib, numpy, cv2, pykitti, tensorboardX

- Compile external deps

cd external

# Select the correct sh file depending on your python version

# Modify it so that you select the correct libEGL version

# Eg. /usr/lib/x86_64-linux-gnu/libEGL.so.1.1.0

sudo sh compile_3.X.sh

# Ensure everything compiled correctly with no errors

- Download pre-trained models

sh download_models.sh

In this code, we demonstrate how we use the KITTI dataset and the Triangulation Light Curtain Simulator to simulate the response of light curtains and feed information back to the network

# Eval KITTI

- Monocular RGB Depth Estimation

python3 train.py --config configs/default_exp7_lc.json --eval --viz

- Monocular RGB Depth Estimation + LC Fusion

python3 train.py --config configs/default_exp7_lc.json --lc --eval --viz

- Monocular RGB Depth Estimation + LC Fusion (Debug)

python3 train.py --config configs/default_exp7_lc.json --lc --eval --viz --lc_debug

# Training KITTI

- Training only works with Python 3 as it uses distributed training.

- Simply remove the eval and viz flags. Use the `batch_size` flag to change batch size. It automatically splits it among the GPUs available

- `pkill -f -multi` to clear memory if crashes

# ILIM

- To train with the ILIM dataset, download http://megatron.sp.cs.cmu.edu/raaj/data3/Public/raaj/ilim.zip

- Train with configs/default_exp7_lc_ilim.json instead

Obviously you do not have a light curtain with you. Hence, we have devised a mechanism which you can actually run the actual light curtain device from the comfort of your own home. To do this, we have over 600 static scenes that we swept a planar light curtain at 0.1m intervals from 3m to 18m, complete with stereo data and LIDAR data. The code below demonstrates how our planning, placement and update algorithm works when used with the real curtain.

This step is optional and is for training the model with the ILIM dataset

- Download the ILIM dataset

http://megatron.sp.cs.cmu.edu/raaj/data3/Public/raaj/ilim.zip

- Train on KITTI from 3m to 18m

python3 train.py --config configs/default_318.json

- Train on ILIM from 3m to 18m

python3 train.py --config configs/default_318_lc_ilim.json --init_model (PATH TO PRETRAINED KITTI MODEL HERE) --lc

- Download the light curtain sweep dataset from http://megatron.sp.cs.cmu.edu/raaj/data3/Public/raaj/sweep.zip

- Run the notebooks (namely lc_error.ipynb)

lc_model.ipynb to understand the light curtain model

lc_loading.ipy to show how to load the sweep dataset

lc_correct.ipynb to demonstrate algorithm