This is my attempt at reproducing the NeurIPS 2017 paper: Generating Steganographic Images via Adversarial Training in modern PyTorch. I have not tested all declared experimental results in the paper, but basic functionality should be all available here.

PyTorch related dependencies are defined in environment.yml:

conda env create -f environment.yml -n advstegThen activate the environment:

conda activate advstegMamba (drop-in replacement for conda) is also supported and is what I myself use.

Other dependencies are defined and installed with Poetry:

# Inside the virtual environment

poetry installWeights & Biases is used for logging and visualization. You can either create an account and login with wandb login or add the environment variable WANDB_MODE=disabled to disable logging.

To start training, download the CelebA (Align&Cropped Images) dataset and extract it to data/celeba. Then run:

python train.py --cuda --batch-size=128 --epochs=100 --fraction=0.1This loads up 10% of the CelebA dataset, which approximates to 20,000 images. For 100 epochs, this trains for a little over 30 minutes with default parameters on a single RTX 3090 (~20 seconds each epoch).

To train on all CelebA images for 500 epochs (as in the paper):

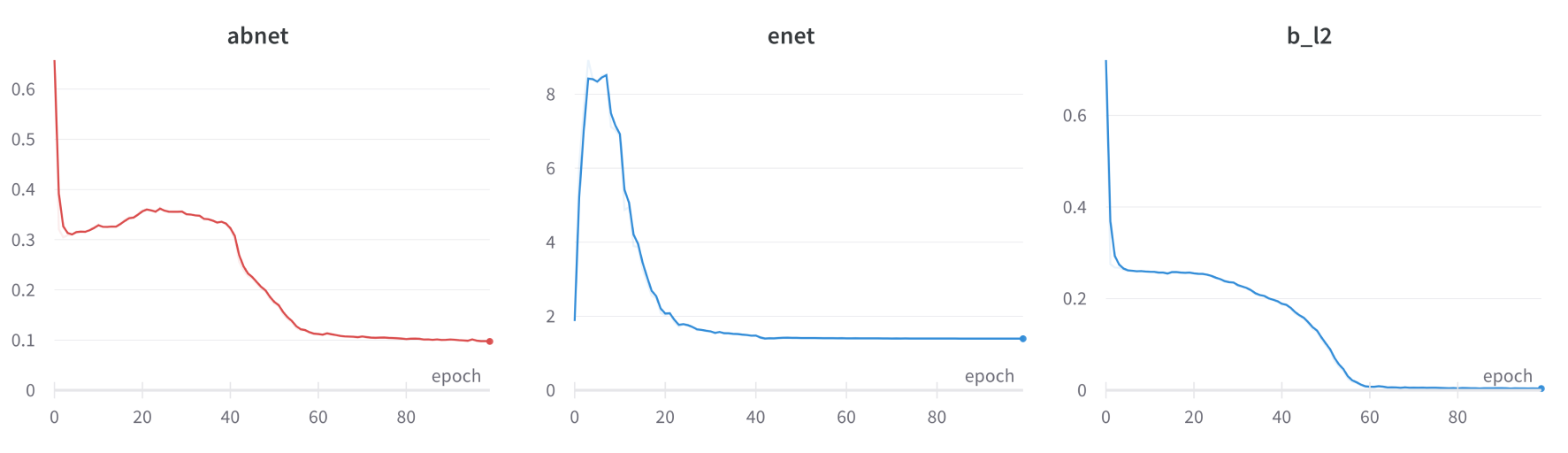

python train.py --cuda --batch-size=256 --epochs=500Loss curves on the first 100 epochs trained with 10% of the dataset:

Generated steganographic images:

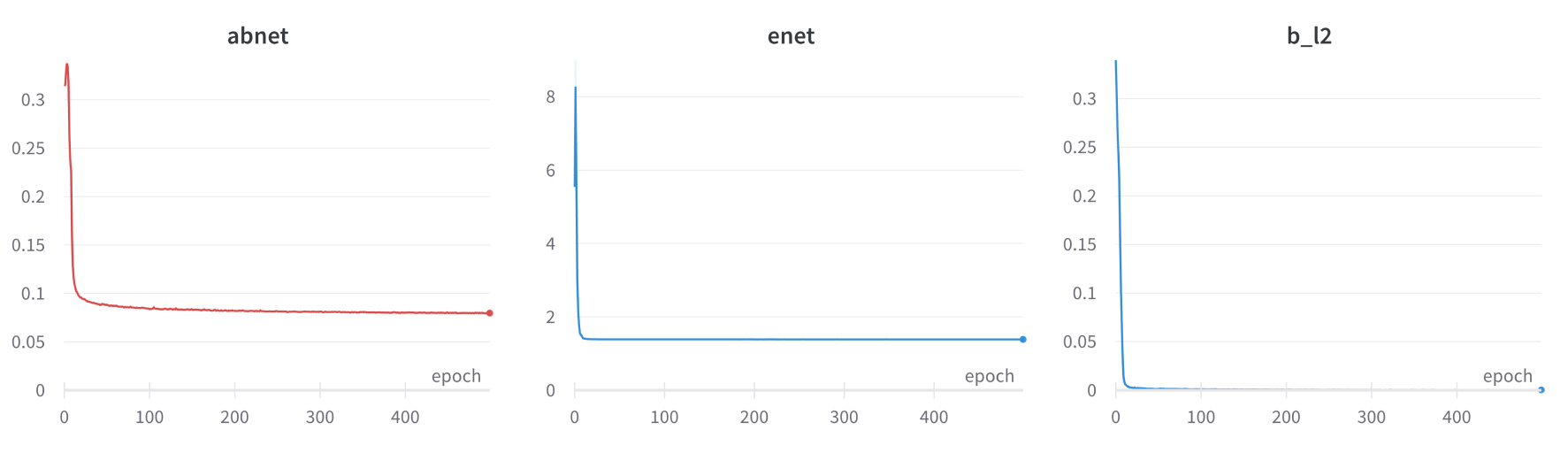

Loss curves on the entire CelebA dataset for 500 epochs:

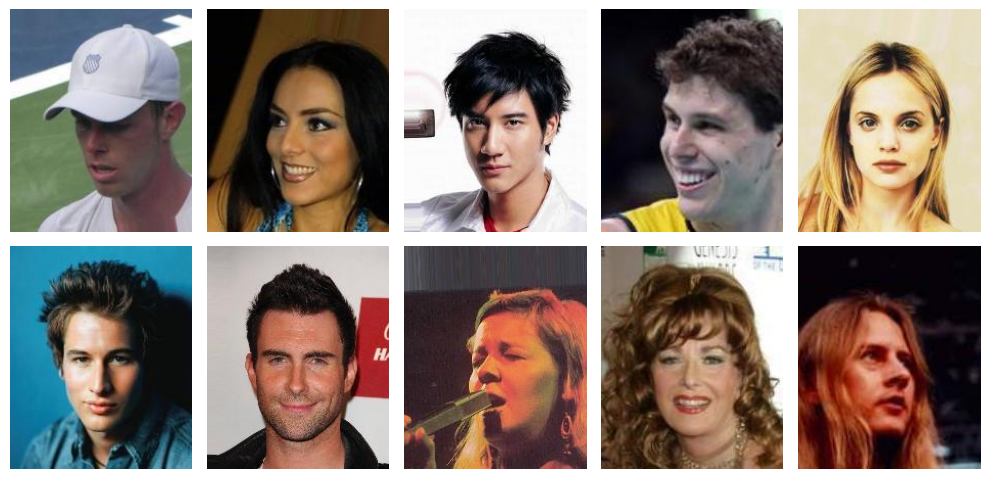

Generated steganographic images:

Cover images:

Check under ./notebooks/ for more information.

The model proposed in the paper highly resembles the DCGAN architecture, but with a few differences:

| Model | Same as DCGAN's ... | What changes? | Why | Role |

|---|---|---|---|---|

| Alice | Generator | First layer swapped to a Linear layer. | So that the secret message can be embedded into the flattened cover image. | Encoder |

| Bob | Discriminator | Final layer swapped to a Linear layer with an output of the same length as the secret message. | So that Bob can decode the secret message embedded by Alice. | Decoder |

| Eve | Discriminator | Final layer swapped to a Linear layer with an output channel of one. | So that Eve can distinguish cover images from stego images. | Steganalyzer |

Changes to the training procedure that I had to make to get the model to train:

- Learning rate is set to 1e-4 instead of 2e-4.

- Input

image_sizeis changed to 109 andoutput_sizeis changed to 64. - SGD is used for optimizing Eve instead of Adam: as Eve was getting too good (discriminator's loss drops to 0 very quickly).

The paper trained for 500 epochs, but I found that losses started to converge already after 100 epochs.

The author's original TensorFlow implementation is available at jhayes14/advsteg.

@inproceedings{NIPS2017_fe2d0103,

author = {Hayes, Jamie and Danezis, George},

booktitle = {Advances in Neural Information Processing Systems},

title = {Generating steganographic images via adversarial training},

url = {https://proceedings.neurips.cc/paper/2017/file/fe2d010308a6b3799a3d9c728ee74244-Paper.pdf},

volume = {30},

year = {2017}

}