-

Notifications

You must be signed in to change notification settings - Fork 22

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

support more than one simultaneous GPU/worker #38

Comments

|

This was a result of setting the number of background workers to a value greater than the number of GPUs I had available, causing multiple pipelines to run simultaneously and compete for resources. Reducing the number of workers to 1 fixes them, but does not allow more than 1 GPU to be used. |

|

Would this require multiple GPUs? I might be able to help with that on one of my servers as long as the pipeline doesn't require full PCIe bandwidth for each GPU. |

|

@elliotcourant I want to test both cases: 2 different GPUs with different device IDs, and 1 GPU with enough memory for 2 models and 2 simultaneous workers. I don't think PCIe bandwidth matters as much for this as it does for gaming, since the model is usually loaded once and run many times, but I expect it will have some impact on those load times at least. |

|

This is mostly implemented, although I need to make sure all of the pipeline code is using |

|

Passing session options requires the SessionOptions type: Trying to run a second image causes the first job to crash with an error about an unwanted list of tensors: |

|

The LPW pipeline from #27 is not aware of the |

|

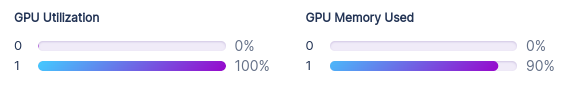

There are still a few issues that can arise around reusing the same model on multiple workers, I'm not sure if the process worker pool would resolve those, or if I need to keep track of the device in the model cache. However, this is mostly working: One of the possible cache errors: |

Even with the thread limit from #15, making multiple requests to generate images even with the same pipeline and model will likely crash the API:

The text was updated successfully, but these errors were encountered: