New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Multiple cameras support not working for mapping #477

Comments

|

OK. For an unknown reason, if I compute each zed camera on its own thread, parallele mapping works .... a little. Here my code : ///////////////////////////////////////////////////////////////////////////

//

// Copyright (c) 2022, STEREOLABS.

//

// All rights reserved.

//

// THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

// "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

// LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

// A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

// OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

// SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

// LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

// DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

// THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

// (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

// OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

//

///////////////////////////////////////////////////////////////////////////

/**********************************************************************************

** This sample demonstrates how to capture a live 3D reconstruction of a scene **

** as a fused point cloud and display the result in an OpenGL window. **

**********************************************************************************/

#include <sl/Camera.hpp>

#include <opencv2/opencv.hpp>

#include "GLViewer.hpp"

bool _enableMapping = true;

bool _running = true;

void run(int index, std::mutex &mutex, GLViewer &viewer, sl::FusedPointCloud &map, sl::Transform tf) {

sl::Camera zed;

// Set configuration parameters for the ZED

sl::InitParameters init_parameters;

init_parameters.depth_mode = sl::DEPTH_MODE::PERFORMANCE;

init_parameters.coordinate_system = sl::COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP; // OpenGL's coordinate system is right_handed

init_parameters.coordinate_units = sl::UNIT::MILLIMETER;

init_parameters.camera_resolution = sl::RESOLUTION::HD720;

//init_parameters.camera_fps = 60;

init_parameters.depth_minimum_distance = 200;

init_parameters.sdk_verbose = true;

// Open the camera

init_parameters.input.setFromCameraID(index);

zed.open(init_parameters);

// Setup and start positional tracking

sl::PositionalTrackingParameters positional_tracking_parameters;

positional_tracking_parameters.enable_area_memory = true;

positional_tracking_parameters.enable_imu_fusion = true;

positional_tracking_parameters.initial_world_transform = tf;

zed.enablePositionalTracking(positional_tracking_parameters);

if (_enableMapping) {

// Set spatial mapping parameters

sl::SpatialMappingParameters spatial_mapping_parameters;

// Request a Point Cloud

spatial_mapping_parameters.map_type = sl::SpatialMappingParameters::SPATIAL_MAP_TYPE::FUSED_POINT_CLOUD;

// Set mapping range, it will set the resolution accordingly (a higher range, a lower resolution)

spatial_mapping_parameters.set(sl::SpatialMappingParameters::MAPPING_RANGE::LONG);

spatial_mapping_parameters.set(sl::SpatialMappingParameters::MAPPING_RESOLUTION::HIGH);

// Request partial updates only (only the latest updated chunks need to be re-draw)

spatial_mapping_parameters.use_chunk_only = true;

// Start the spatial mapping

zed.enableSpatialMapping(spatial_mapping_parameters);

}

std::chrono::high_resolution_clock::time_point ts_last;

// Setup runtime parameters

sl::RuntimeParameters runtime_parameters;

runtime_parameters.confidence_threshold = 50;

auto camera_infos = zed.getCameraInformation();

auto resolution = camera_infos.camera_configuration.resolution;

// Define display resolution and check that it fit at least the image resolution

sl::Resolution display_resolution(std::min((int)resolution.width, 720), std::min((int)resolution.height, 404));

// Create a Mat to contain the left image and its opencv ref

sl::Mat image_zed(display_resolution, sl::MAT_TYPE::U8_C4);

cv::Mat image_zed_ocv(image_zed.getHeight(), image_zed.getWidth(), CV_8UC4, image_zed.getPtr<sl::uchar1>(sl::MEM::CPU));

std::cout << "Camera " << index << " Type:" << camera_infos.camera_model << " SN:" << camera_infos.serial_number << std::endl;

while (_running) {

if (zed.grab(runtime_parameters) == sl::ERROR_CODE::SUCCESS) {

// Retrieve the left image

zed.retrieveImage(image_zed, sl::VIEW::LEFT, sl::MEM::CPU, display_resolution);

// Retrieve the camera pose data

sl::Pose pose;

auto tracking_state = zed.getPosition(pose);

viewer.updatePose(index, pose, tracking_state);

if (_enableMapping && tracking_state == sl::POSITIONAL_TRACKING_STATE::OK) {

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::high_resolution_clock::now() - ts_last).count();

/*

mutex.lock();

zed.extractWholeSpatialMap(map);

viewer.updateChunks();

mutex.unlock();

*/

// Ask for a fused point cloud update if 100ms have elapsed since last request

if ((duration > 0) && viewer.chunksUpdated()) {

// Ask for a point cloud refresh

zed.requestSpatialMapAsync();

ts_last = std::chrono::high_resolution_clock::now();

}

// If the point cloud is ready to be retrieved

if (zed.getSpatialMapRequestStatusAsync() == sl::ERROR_CODE::SUCCESS && viewer.chunksUpdated() && mutex.try_lock()) {

zed.retrieveSpatialMapAsync(map);

viewer.updateChunks();

mutex.unlock();

}

}

cv::imshow("ZED View : " + std::to_string(index) + " : " + std::to_string(camera_infos.serial_number), image_zed_ocv);

}

cv::waitKey(10);

}

image_zed.free();

zed.close();

}

int main(int argc, char **argv)

{

// Initialize point cloud viewer

sl::FusedPointCloud map;

// Point cloud viewer

GLViewer viewer;

viewer.init(argc, argv, &map, sl::MODEL::ZED2i);

std::mutex zedmutex;

std::thread zed1thread(run, 0, std::ref(zedmutex), std::ref(viewer), std::ref(map), sl::Transform(sl::Rotation(), sl::Translation(0, 0, -75)));

std::thread zed2thread(run, 1, std::ref(zedmutex), std::ref(viewer), std::ref(map), sl::Transform(sl::Rotation(M_PI, sl::Translation(0, 1, 0)), sl::Translation(0, 0, 75)));

while (viewer.isAvailable())

sl::sleep_ms(1);

_running = false;

if (zed1thread.joinable()) zed1thread.join();

if (zed2thread.joinable()) zed2thread.join();

// Save generated point cloud

//map.save("MyFusedPointCloud");

return 0;

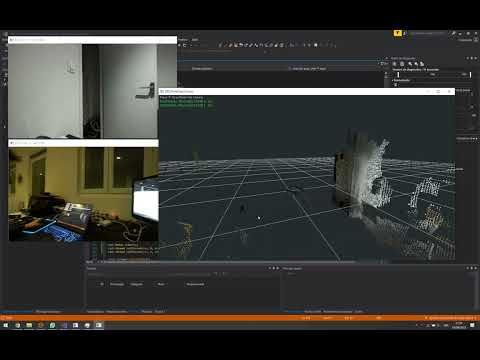

}It seems the sl::FusedPointCloud does not support very well multiple retrieveSpatialMapAsync() (or extractWholeSpatialMap()) from different cameras because the updated chunks are corrupted as you can see on the following video : |

|

Hi, thank you for reporting this issue, we are working to fix it. |

|

Hi all,

I tested with the two versions of the sample (non threaded and threaded). Is there something else wrong ? |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment otherwise it will be automatically closed in 5 days |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment otherwise it will be automatically closed in 5 days |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment otherwise it will be automatically closed in 5 days |

|

hi ! did you try spatial mapping multi option that uploaded 2 month ago. If you have tried already can you please share your configuration file |

|

@celikemir did you succeed to run the multi camera spatial mapping without issues? I am also interested for this configuration. |

Preliminary Checks

Description

I have two ZED2i cameras and I try to use them together (in a rigid body) in a real time mapping process.

Steps to Reproduce

I updated the realtime mapping sample to handle two cameras :

Expected Result

See in OpenGLWindow the realtime mapping with the two cameras and two openCV windows with the left image of each camera

Actual Result

Mapping with only the second camera.

See the openCV window of the second camera

Get errors in loop on the console :

ZED Camera model

ZED2i

Environment

Anything else?

No response

The text was updated successfully, but these errors were encountered: