Data Manager is a one stop shop as Apache Spark based data lake management platform. It covers all the needs for data team that is using Apache Spark for batching process. However, data visualization and notebook (like JupyterHub) is not included in Data Manager. For more informations, please read Data Manager wiki Pages

|

You setup your own Apache Spark Cluster. |

|

Use PySpark package, fully compatible to other spark platform, allows you to test your pipeline in a single computer. |

|

You host your spark cluster in databricks |

|

You host your spark cluster in Amazon AWS EMR |

|

You host your spark cluster in Google Cloud |

|

You host your spark cluster in Microsoft Azure HDInsight |

|

You host your spark cluster in Oracle Cloud Infrastructure, Data Flow Service |

|

You host your spark cluster in IBM Cloud |

Data Manager support many Apache Spark providers, including AWS EMR, Microsoft Azure HDInsight, etc. For complete list, see readme.md.

- Pipelines, Data Applications are sit on top of an abstraction layer that hides the cloud provider details.

- Moving your Data Manager based data lake from one cloud provider to another just need configuration change, no code change is required.

We achieved this by introducing “job deployer” and “job submitter” via python package spark-etl.

- By using “spark_etl.vendors.oracle.DataflowDeployer” and “spark_etl.vendors.oracle.DataflowJobSubmitter”, your can run Data Manager in OCI DataFlow, here is an example.

- By using “spark_etl.vendors.local.LocalDeployer” and “spark_etl.vendors.local.PySparkJobSubmitter”, you can run Data Manager with PySpack with your laptop, here is an example

- By using "spark_etl.deployers.HDFSDeployer" and "spark_etl.job_submitters.livy_job_submitter.LivyJobSubmitter", you can run Data Manager with your on premise spark cluster, here is an example

Even you are using a public cloud for your production data lake, you can test your pipeline and data application using PySpark on your laptop with down scaled sample data with exactly the same code.

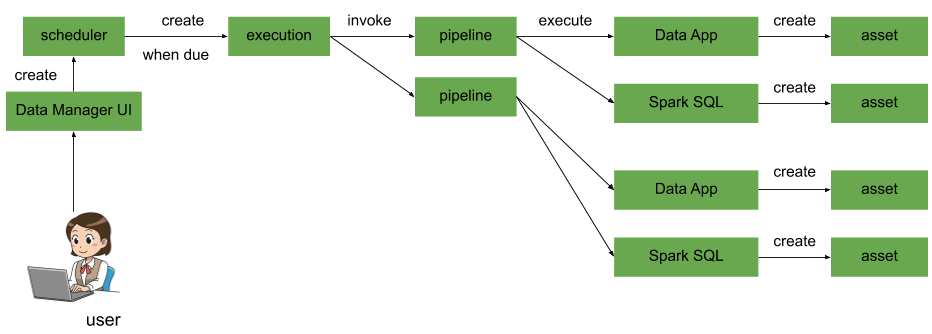

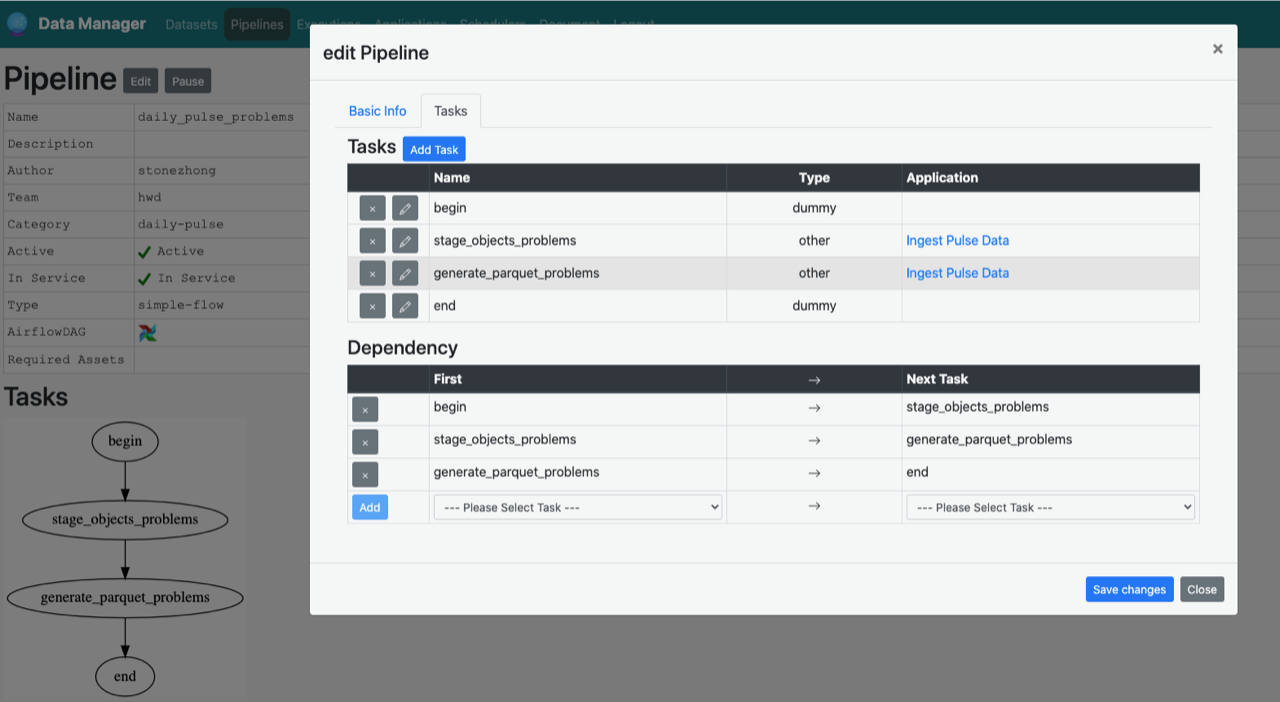

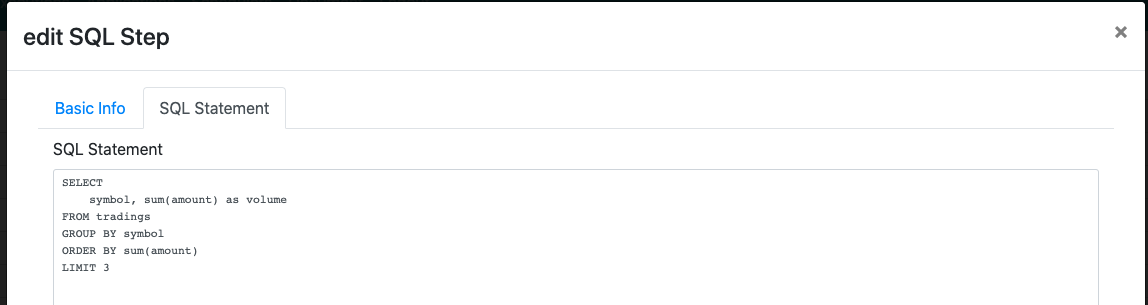

It allows non-programmer to create pipeline via UI, the pipeline can have multiple “tasks”, each task is either executing a series of Spark-SQL statements or launching a Data Application.

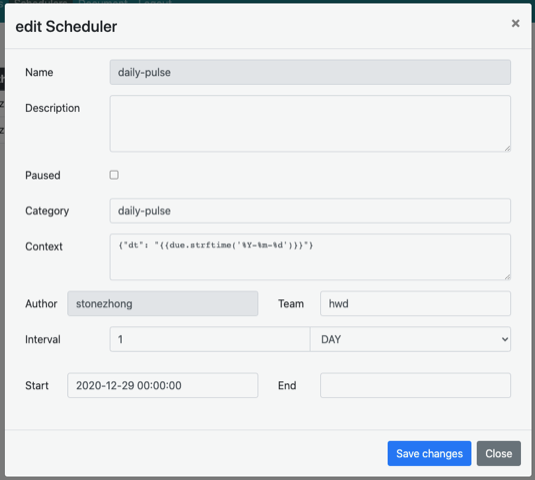

User can schedule the execution of pipeline with UI. It will execute all the pipelines related to the category of the scheduler, when these pipelines are invoked, they will use shared context defined in this scheduler.

It provides a data catalog which tracks all datasets and assets. The data catalog has a REST API for client to query and register dataset and asset.

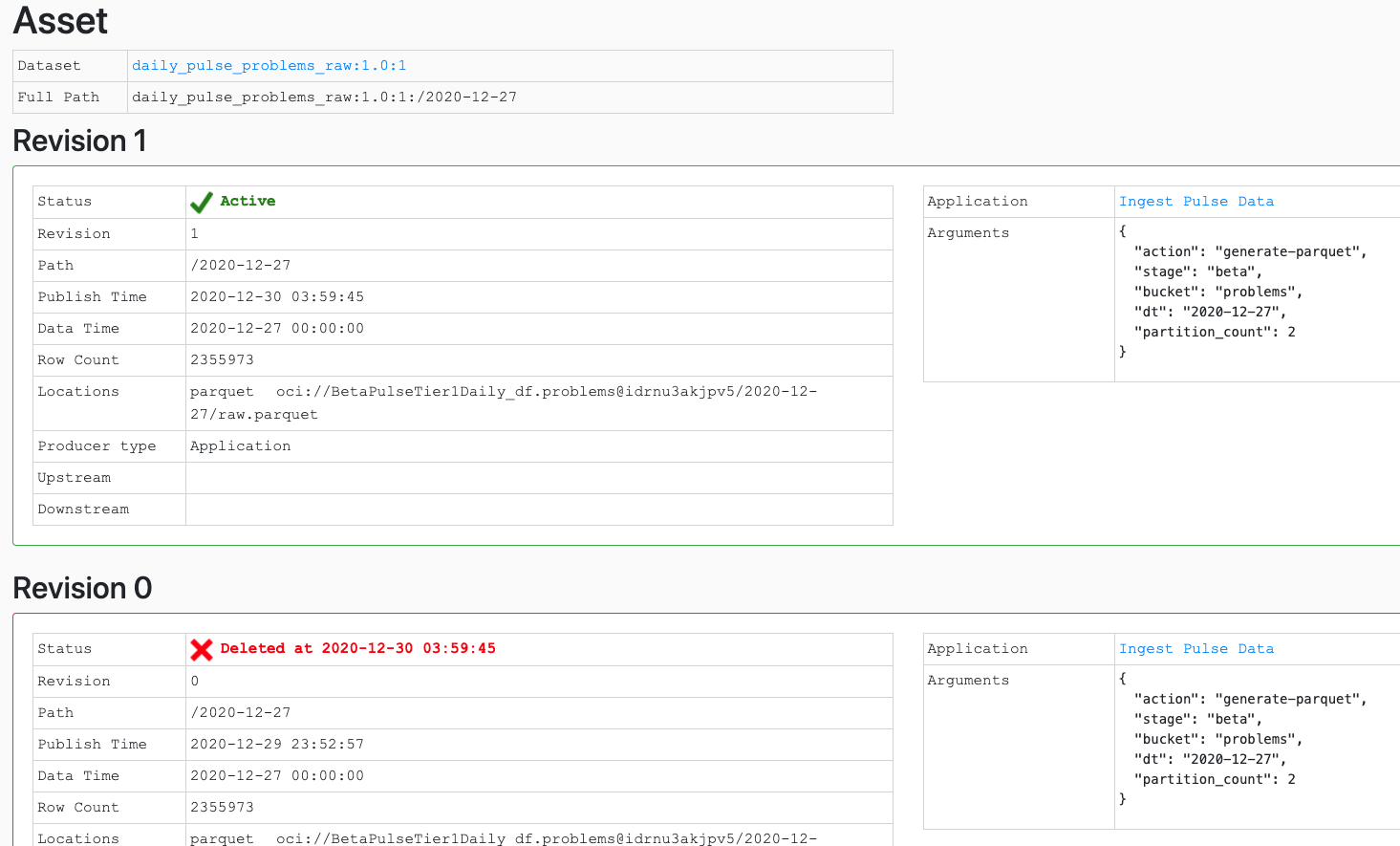

Data Manager keeps track the data lineage info. When you view an asset, you will see:

- The actual SQL statement that produces this asset if the asset is produced by Spark-SQL task

- The application name and arguments if the asset is produced by a data application

- Upstream assets, list of assets being used when producing this asset

- Downstream assets, list of assets that require this asset when produced

With these information, you understands where data comes from, where data goes to and how it is produced.

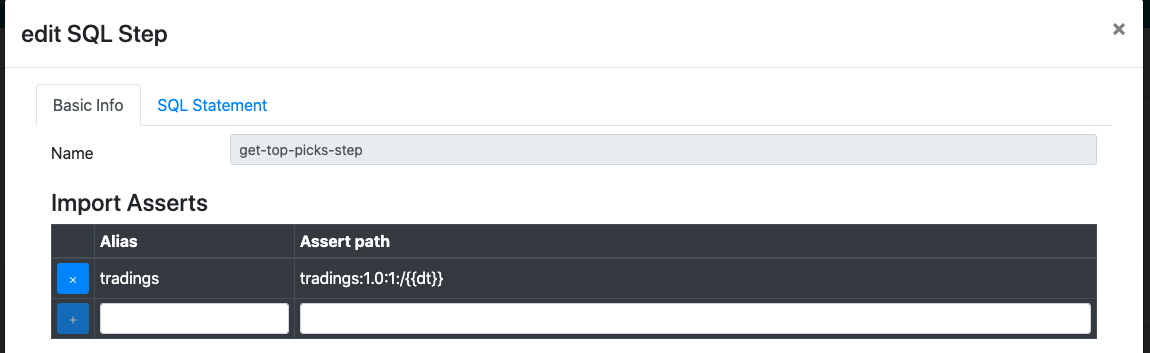

You can specify a list of assets as “require” for a pipeline. The scheduler will only invoke the pipeline when all the required assets shows up.

Note, the required asset here in the example is tradings:1.0:1:/{{dt}}, this is actually a jinja template. "dt" is part of the rendering context belongs to the scheduler.

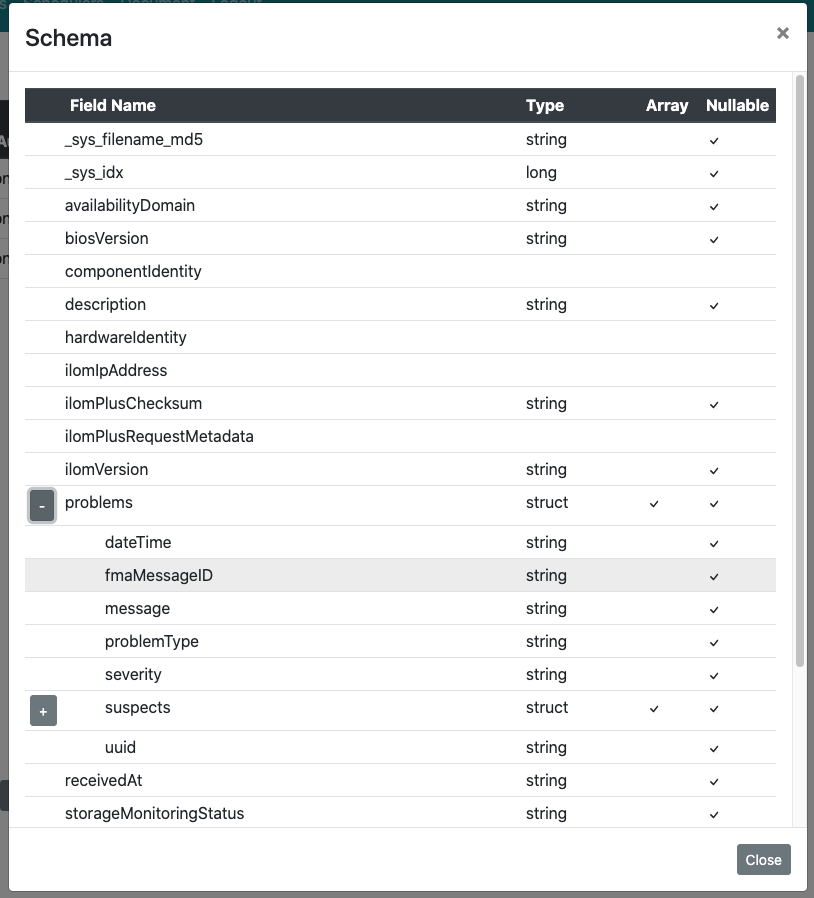

In the Dataset Browser UI, you can see the schema for any dataset. Here is an example:

Assets are immutable, if you want to republish an asset (maybe the earlier asset has a data-bug), it will bump the revision. If you cache the asset or derived data from the asset, you can check the asset revision to decide whether you need to update your cache.

Each asset, only the latest revision might be active, and the metadata tracks all the revisions.

This page also shows the revision history, so you can see if the asset has been corrected or not, and when.

As you can see, below is a task inside a pipeline, it imports an asset tradings:1.0:1/{{dt}} and call it “tradings”, then run a SQL statement. User do not even need to know where is the storage location of the asset, Data Manager automatically resolve the asset name to storage location and load the asset from it.

This also make it easy to migrate data lake between cloud providers since your pipeline is not tied to the storage location of assets.

If you go to the asset page, it shows the storage location for asset, for example: