- Overview

- Documentation

- System Requirements

- Installation Guide

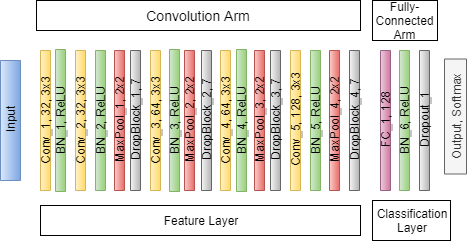

- DCNN DropBlock Algorithm flow

- Dataset

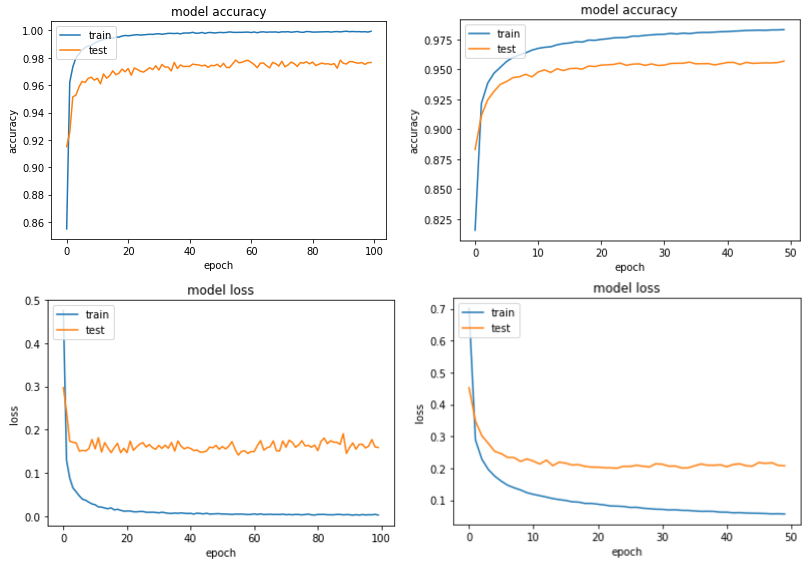

- Results

- Visualization

- Credits

- Citation

- License

Kuzushiji-Dropblock is a Project based on Recognizition of Japanese Historical Image classification.

📝 View our research paper titled "Japanese Historical Character Recognition using Deep Convolutional Neural Network (DCNN) with DropBlock Regularization" availble at (http://dx.doi.org/10.35940/ijrte.b2923.078219)

🚀 Kuzushiji-DropBlock package requires only a standard computer with enough RAM to support the in-memory operations.

This code is supported for Windows, macOS and Linux. The code has been tested on the following systems:

- Windows: Professional (10)

- macOS: Mojave (10.14.1)

- Linux: Ubuntu 16.04

Kuzushiji-DropBlock mainly depends on the Python scientific stack.

Keras

numpy

scipy

pandas

matplotlib

scikit-learn

PyTorch

Tensorflow

DropBlock

In Bash,

pip install DropBlock2D

In Google Colab,

!pip install DropBlock2D

GitClone

git clone https://github.com/sujatasaini/Kuzushiji-DropBlock

cd Kuzushiji-DropBlock

📁 Kuzushiji-MNIST is a drop-in replacement for the MNIST dataset (28x28 grayscale, 70,000 images), provided in the original MNIST format as well as a NumPy format.

Kuzushiji-MNIST contains 70,000 28x28 grayscale images spanning 10 classes (one from each column of hiragana), and is perfectly balanced like the original MNIST dataset (6k/1k train/test for each class).

| File | Examples | Download (MNIST format) | Download (NumPy format) |

|---|---|---|---|

| Training images | 60,000 | train-images-idx3-ubyte.gz (18MB) | kmnist-train-imgs.npz (18MB) |

| Training labels | 60,000 | train-labels-idx1-ubyte.gz (30KB) | kmnist-train-labels.npz (30KB) |

| Testing images | 10,000 | t10k-images-idx3-ubyte.gz (3MB) | kmnist-test-imgs.npz (3MB) |

| Testing labels | 10,000 | t10k-labels-idx1-ubyte.gz (5KB) | kmnist-test-labels.npz (5KB) |

Mapping from class indices to characters: kmnist_classmap.csv (1KB)

it's recommended to download in NumPy format, which can be loaded into an array as easy as:

arr = np.load(filename)['arr_0'].

Kuzushiji-49, has 49 classes (28x28 grayscale, 270,912 images), is a much larger, but imbalanced dataset containing 48 Hiragana characters and one Hiragana iteration mark.

Kuzushiji-49 contains 270,912 images spanning 49 classes, and is an extension of the Kuzushiji-MNIST dataset.

| File | Examples | Download (NumPy format) |

|---|---|---|

| Training images | 232,365 | k49-train-imgs.npz (63MB) |

| Training labels | 232,365 | k49-train-labels.npz (200KB) |

| Testing images | 38,547 | k49-test-imgs.npz (11MB) |

| Testing labels | 38,547 | k49-test-labels.npz (50KB) |

Mapping from class indices to characters: k49_classmap.csv (1KB)

Kuzushiji-Kanji is an imbalanced dataset of total 3832 Kanji characters (64x64 grayscale, 140,426 images of both common and rare characters), ranging from 1,766 examples to only a single example per class.

The full dataset is available for download here (310MB).

💾 You can run python download_data.py to interactively select and download any of these datasets!

You can also download the data from Kaggle and participate in Kaggle Kuzushiji Recognition Competition.

📈 Results of our DCNN Model with different regularization methods (DropBlock, Dropout, SpatialDropout) on MNIST, Fashion-MNIST Kuzushiji-MNIST and Kuzushiji-49, trained on Google Colab average over 3 runs.

| Models | MNIST | Fashion-MNIST | Kuzushiji-MNIST | Kuzushiji-49 |

|---|---|---|---|---|

| DCNN-DropBlock | 99.47% | 93.40% | 97.66% | 95.67% |

| DCNN-Dropout | 97.99% | 85.47% | 86.43% | 95.34% |

| DCNN-Spatial-Dropout | 97.17% | 84.44% | 81.08% | 58.18 |

Have more results to add to the table? Feel free to submit an issue or pull request!

| Models | MNIST | Fashion-MNIST | Kuzushiji-MNIST | Kuzushiji-49 |

|---|---|---|---|---|

| 4-Nearest Neighbor Baseline | 97.14% | 85.97% | 91.59% | 86.00% |

| Naive-Bayes | 98.06% | 86.60% | 92.17% | 88.44% |

| AlexNet | 98.19% | 87.47% | 91.82% | 81.01% |

| Simple CNN | 99.08% | 92.54% | 95.02% | 90.42% |

| Transfer Learning with CNN | 99.34% | 97.46% | 97.06% | 83.96% |

| LeNet-5 | 99.13% | 91.33% | 94.66% | 89.64% |

| MobileNet | 99.20% | 93.04% | 95.09% | 91.06% |

| DCNN-DropBlock | 99.47% | 93.40% | 97.66% | 95.67% |

📊 *Used dropblock with block_size=7 and keep_prob=0.8 over 100 iterations

Example available here

Please cite Kuzushiji-DropBlock in your publications if it helps your research::100:

@article{2019,

doi = {10.35940/ijrte.b2923.078219},

url = {https://doi.org/10.35940%2Fijrte.b2923.078219},

year = 2019,

month = {jul},

publisher = {Blue Eyes Intelligence Engineering and Sciences Engineering and Sciences Publication - {BEIESP}},

volume = {8},

number = {2},

pages = {3510--3515},

title = {Japanese Historical Character Recognition using Deep Convolutional Neural Network ({DCNN}) with Drop Block Regularization},

journal = {International Journal of Recent Technology and Engineering}

}