-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

bug with frame_step in tf.contrib.signal overlap_and_add inverse_stft #16465

Comments

|

/CC @rryan, can you take a look? |

|

Thanks very much for the detailed bug report @memo! I'll take a look, though I probably won't have time until 2/9. |

|

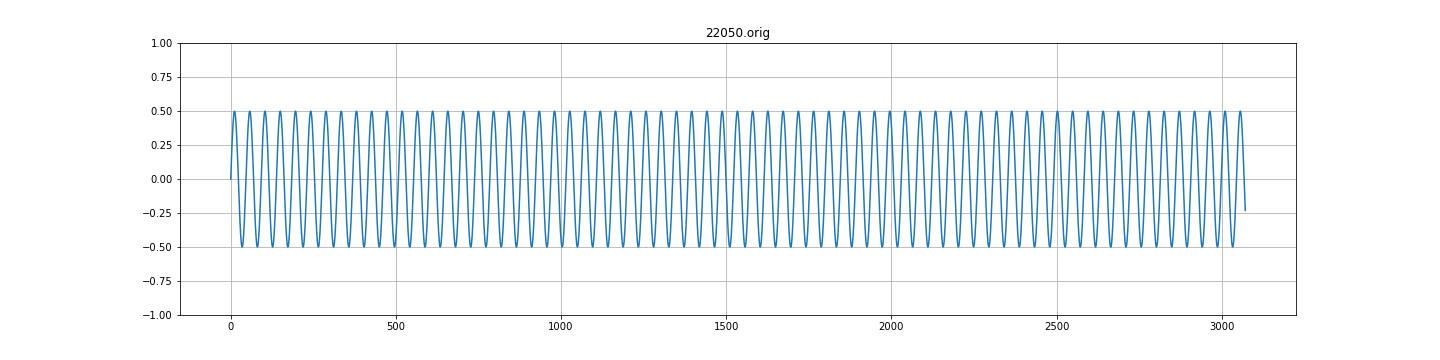

I am having a (probably) related problem when I try to use the istft to reconstruct a signal. import functools

import tensorflow as tf

from tensorflow.contrib.signal.python.ops import window_ops

sampling_rate = 44000

freq = 440

countOfCycles = 4

_time = tf.range(0, 1024 / sampling_rate, 1 / sampling_rate, dtype=tf.float32)

firstSignal = tf.sin(2 * 3.14159 * freq * _time)

with tf.name_scope('Energy_Spectogram'):

fft_frame_length = 128

fft_frame_step = 32

window_fn = functools.partial(window_ops.hann_window, periodic=True)

stft = tf.contrib.signal.stft(signals=firstSignal, frame_length=fft_frame_length, frame_step=fft_frame_step,

window_fn=window_fn)

istft = tf.contrib.signal.inverse_stft(stfts=stft, frame_length=fft_frame_length, frame_step=fft_frame_step,

window_fn=tf.contrib.signal.inverse_stft_window_fn(fft_frame_step,

forward_window_fn=window_fn))

with tf.Session() as sess:

original, reconstructed = sess.run([firstSignal, istft])

import matplotlib.pyplot as plt

plt.plot(original)

plt.plot(reconstructed)

plt.show()Note that the problem is worse when you don't explicitly give the window (which in this case is the default one). Giving amplitude modulation all across the signal. |

|

For anyone else still having problems even when manually passing in the inverse window function to I still had problems reconstructing the original signal, but I managed to fix the issue by manually zero-padding the signal by |

|

I've also noticed that this is mostly a border effect. Zero padding fixes it to some extent, but it's not optimal for my application. |

|

@rryan How is this going? |

|

Thanks a ton @nuchi, @memo, and @andimarafioti for your patience and helpful repro code. As you've summarized nicely, this is caused by at least two issues:

Here is a replacement for def reconstruct_from_stft(x, frame_length, frame_step):

name = 'stft'

center = True

if center:

# librosa pads by frame_length, which almost works perfectly here, except for with frame_step 256.

pad_amount = 2 * (frame_length - frame_step)

x = tf.pad(x, [[pad_amount // 2, pad_amount // 2]], 'REFLECT')

f = tf.contrib.signal.frame(x, frame_length, frame_step, pad_end=False)

w = tf.contrib.signal.hann_window(frame_length, periodic=True)

spectrograms_T = tf.spectral.rfft(f * w, fft_length=[frame_length])

output_T = tf.contrib.signal.inverse_stft(

spectrograms_T, frame_length, frame_step,

window_fn=tf.contrib.signal.inverse_stft_window_fn(frame_step))

if center and pad_amount > 0:

output_T = output_T[pad_amount // 2:-pad_amount // 2]

return name, output_THere is a Colab notebook demonstrating. |

|

@rryan How can I use my own wave file? I mean when I use my own wave file like... instead of input_data = generate_data(duration_secs=1, sample_rate=sample_rate, num_sin=1, rnd_seed=2, max_val=0.5) Files like tensorflow.16000.stft.1024.wav, tensorflow.16000.stft.256.wav,tensorflow.16000.stft.768.wav,tensorflow.16000.stft.512.wav have significant noises. How can I apply reconstruct_from_stft function for my own wave file? I want to try to train end-to-end noise reduction model like below. Since I'm new to DSP, probably I miss some basic things... My wave file's sample_rate is 16000 and 10 seconds length. Thanks in advance. |

|

@kouohhashi, what shape and type is |

|

@rryan input_data was like: [ 0. 0. 0. ... -5206. -4761. -3248.]. BTW, I noticed one thing. Original file: New file: Is "Bits per sample change" the cause of problem? Thanks, |

Thank you @rryan , that worked well. Just wanted to say that for me what gave results the same as librosa is: Used:

|

|

@sushreebarsa yes, this issue is from 4 years ago! :) I'm not sure if it is still relevant. |

|

@memo Thank you for your response! |

System information

Describe the problem

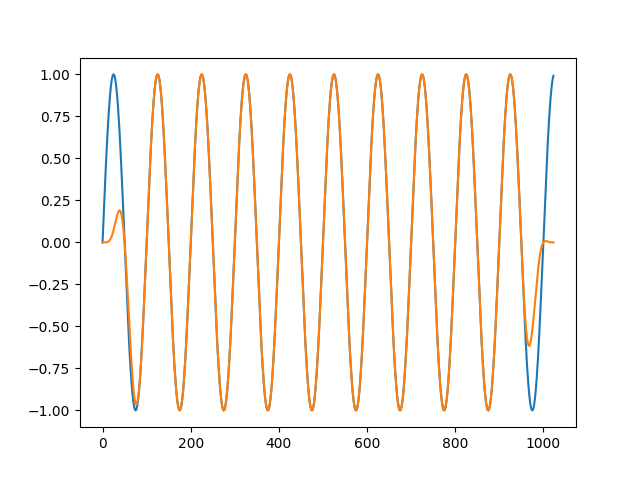

A.) When I create frames from a signal with

frame_length=1024andframe_step=256(i.e. 25% hop size, 75% overlap) using a hann window (also tried hamming), and then I reconstruct withoverlap_and_add, I'd expect the signal to be reconstructed correctly (because of COLA etc). But instead it comes out exactly double the amplitude. I need to divide the resulting signal by two for it to be correct.B.) If I use STFT to create a series of overlapping spectrograms, and then reconstruct with inverse STFT, again with

frame_length=1024andframe_step=256, the signal is again reconstructed at double amplitude.I realise why these might be the case (unity gain at 50% overlap for hann, so 75% overlap will double the signal). But is it not normal for the reconstruction function to take this into account? E.g. librosa istft does return signal with correct amplitude while tensorflow returns double.

C.)

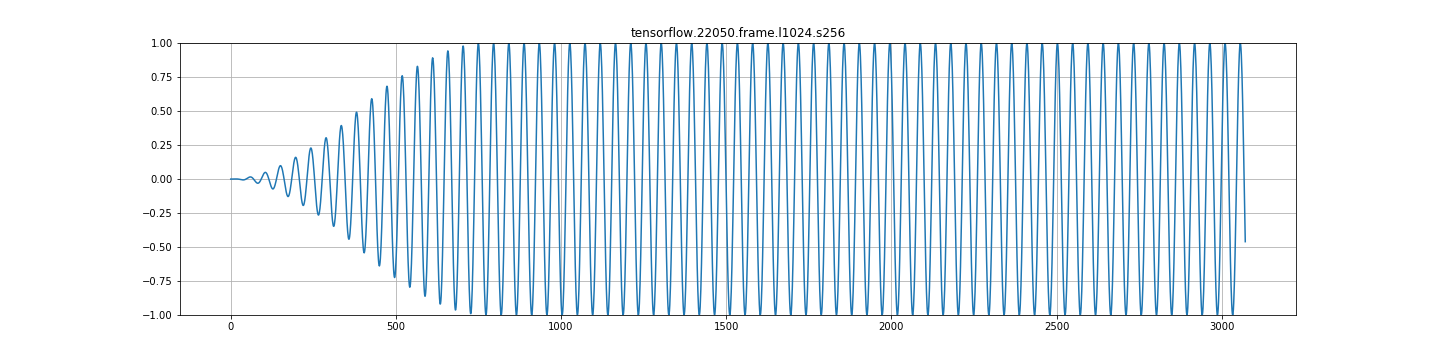

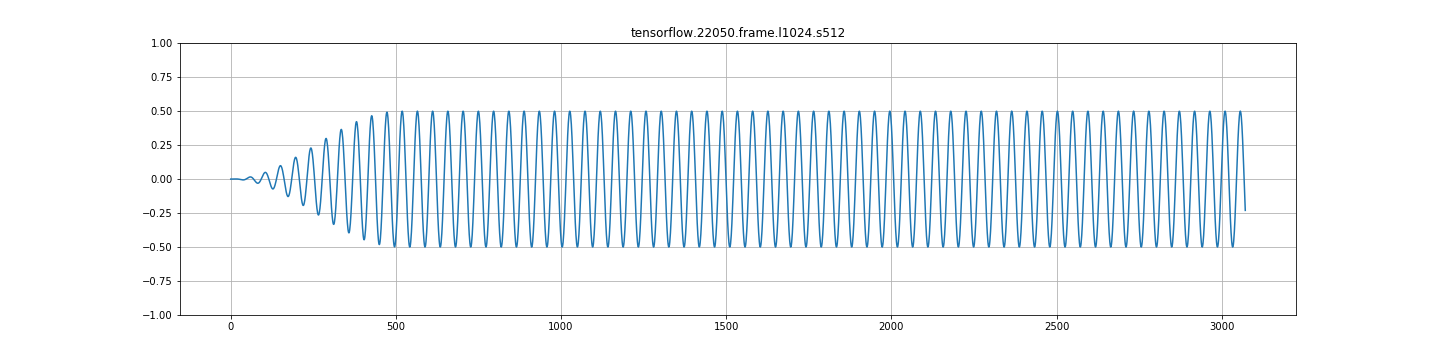

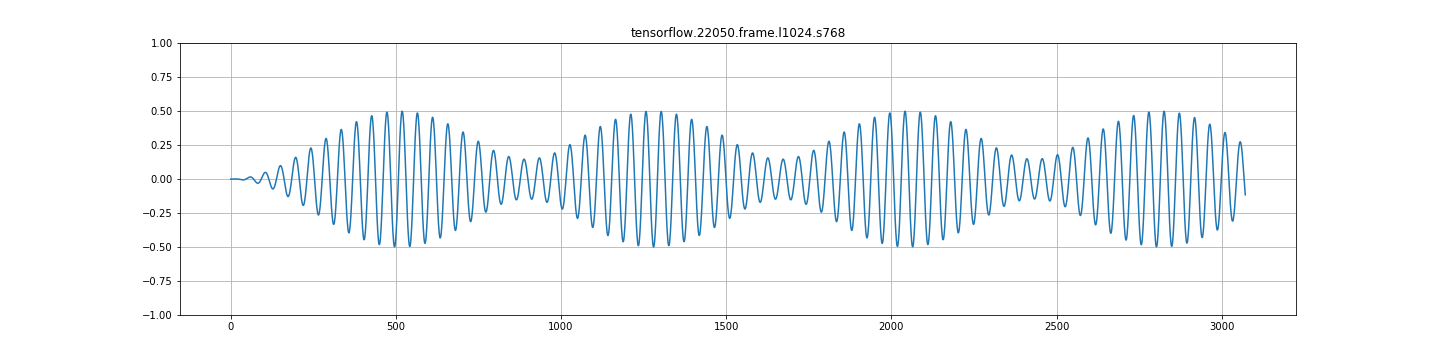

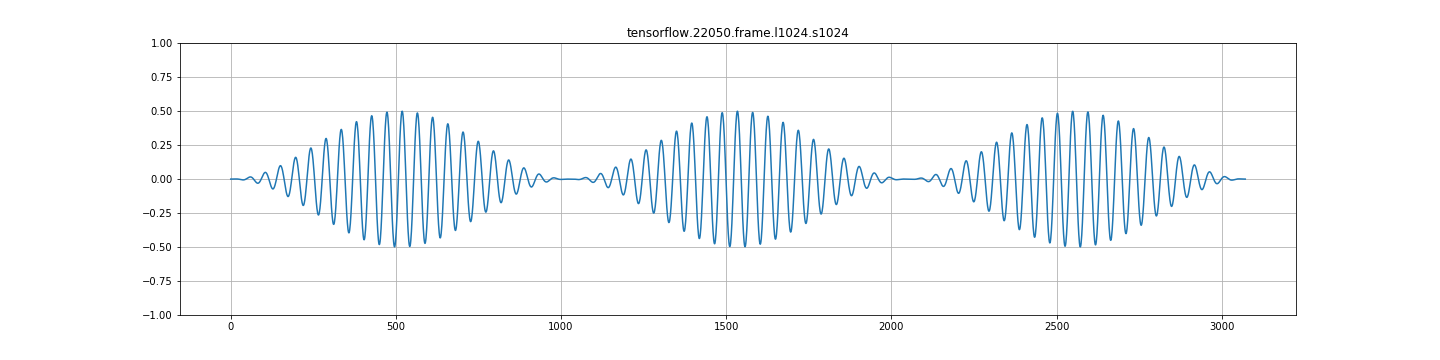

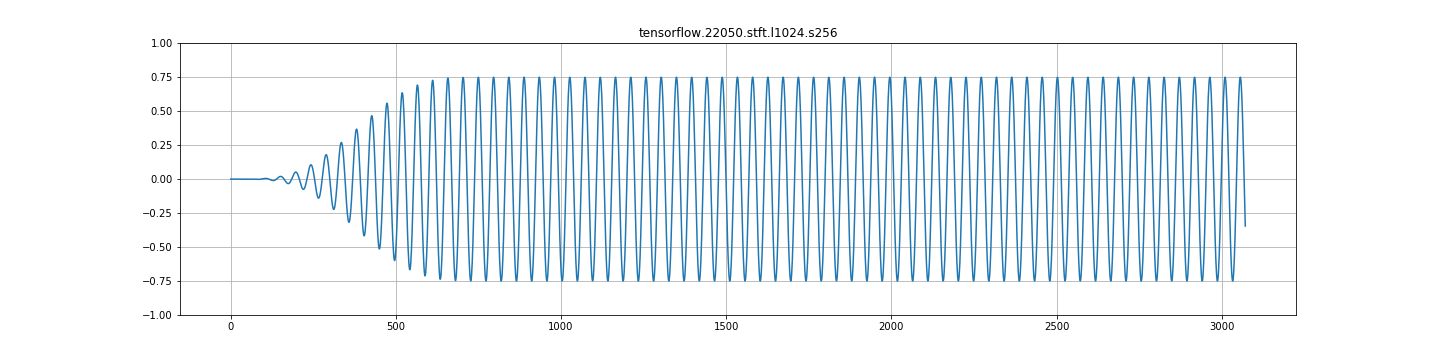

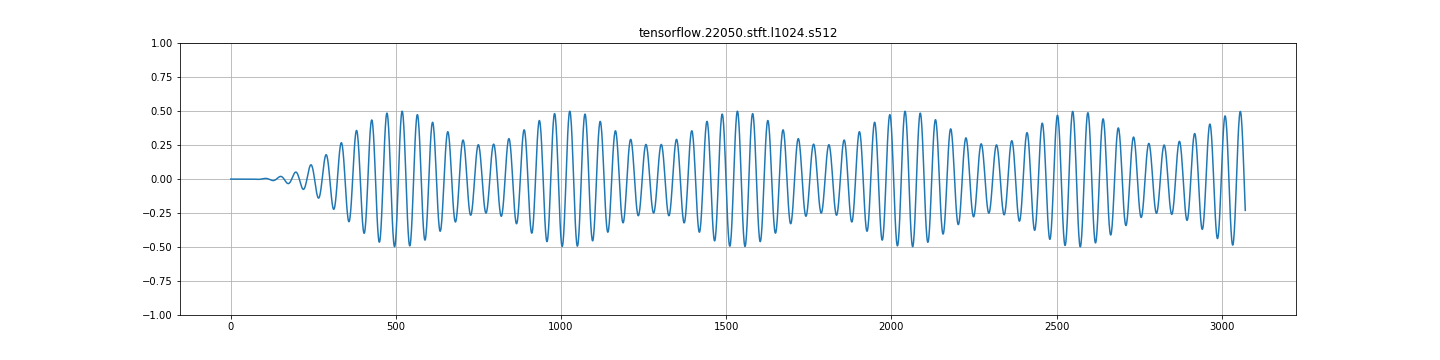

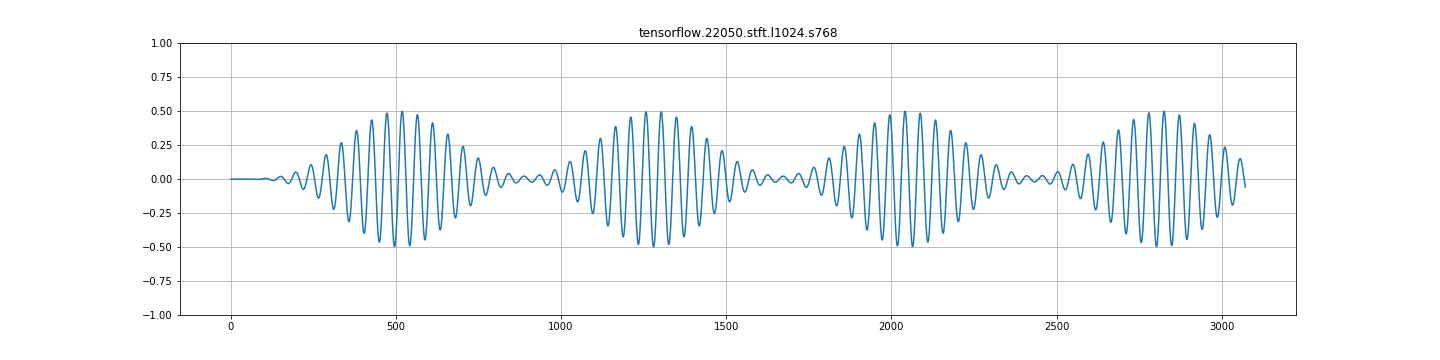

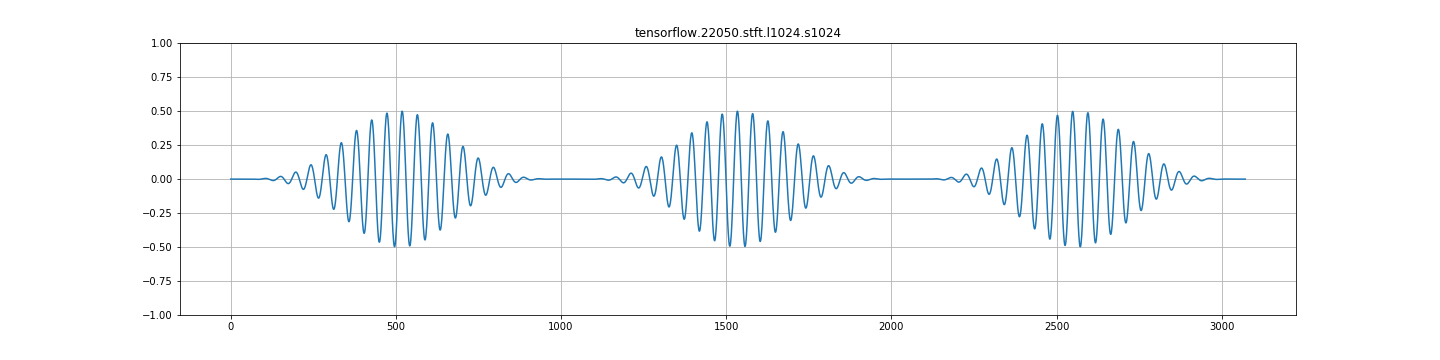

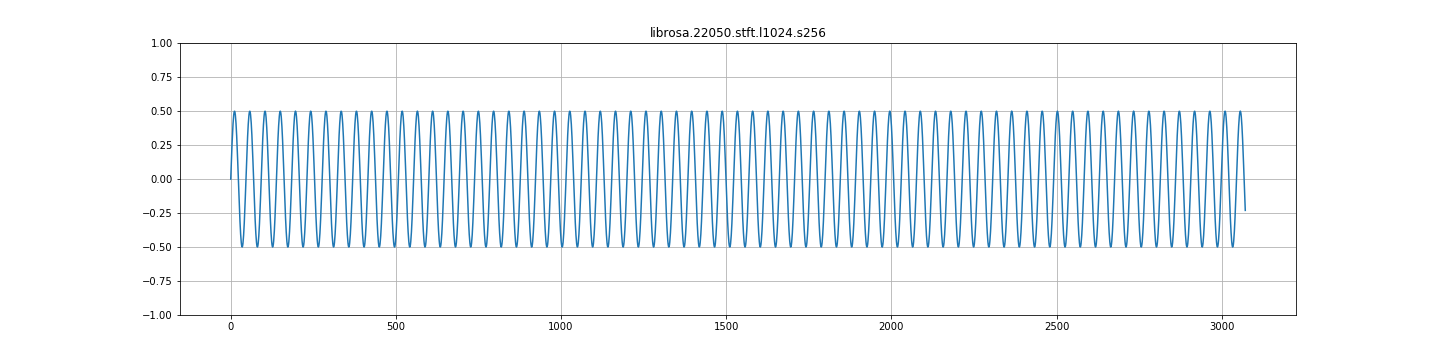

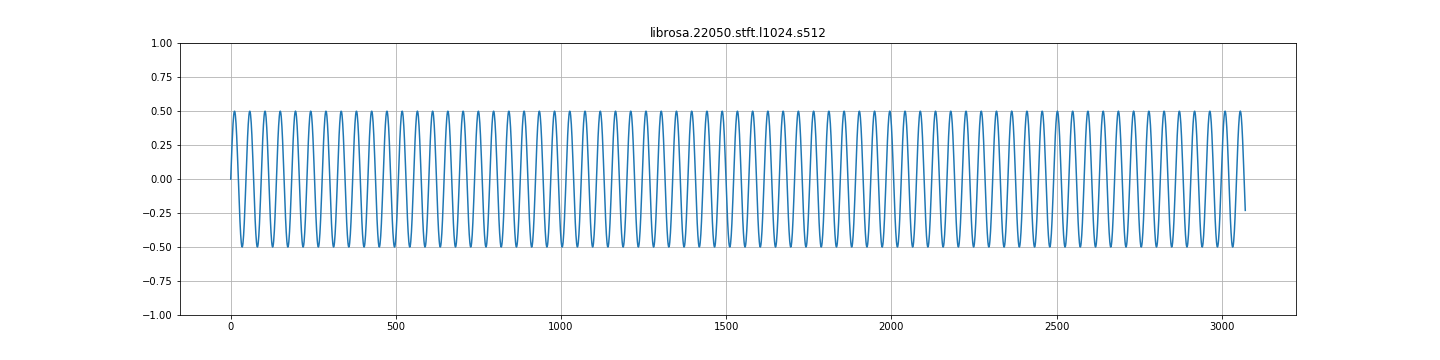

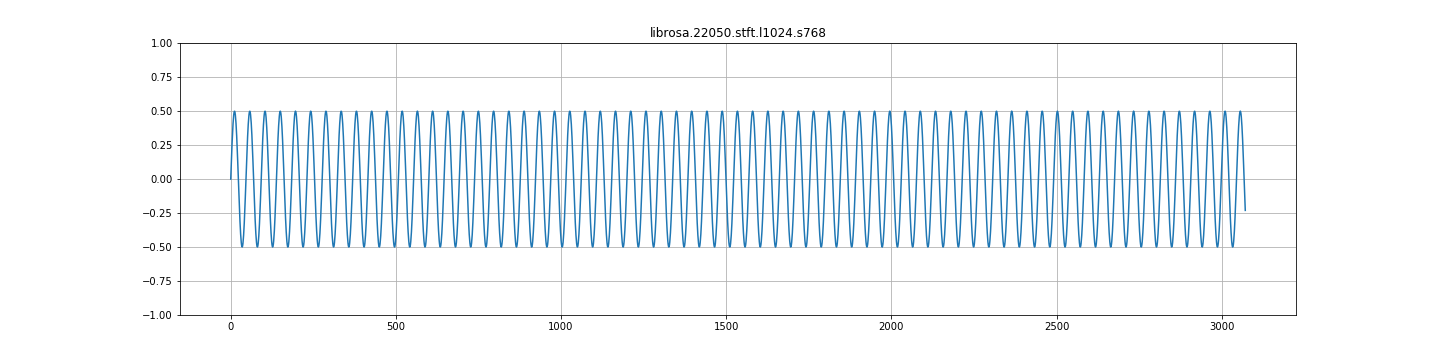

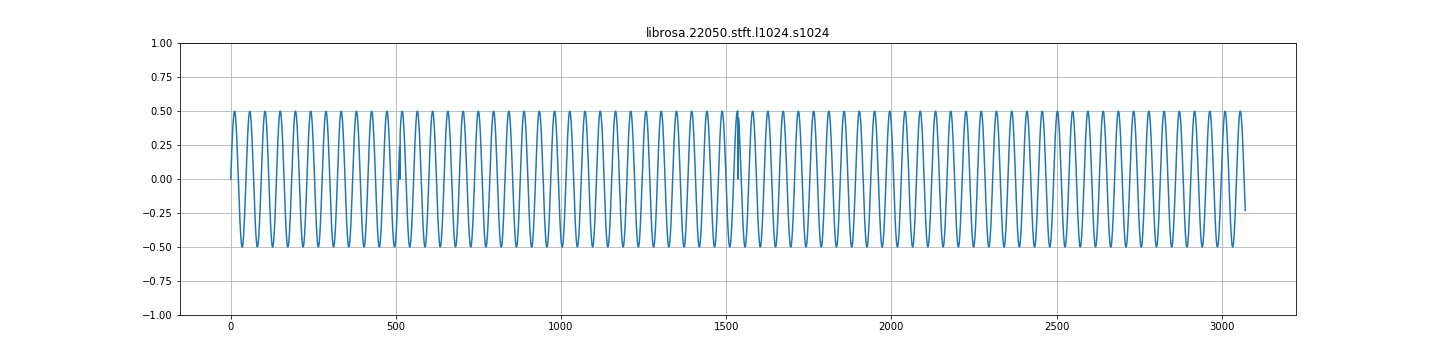

At any other frame_step there is severe amplitude modulation going on. See images below. This doesn't seem right at all.

UPDATE: If I explicitly set

window_fn=tf.contrib.signal.inverse_stft_window_fn(frame_step)ininverse_stftthe output is correct. So it seems theframe_stepparameter ininverse_stftis not being passed into the window function (which is also what the results hint at).Source code / logs

original data:

tensorflow output from frames + overlap_and_add:

tensorflow output from stft+istft:

librosa output from stft+istft:

tensorflow code:

librosa code:

The text was updated successfully, but these errors were encountered: