-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error converting the model to TF Lite #17684

Comments

|

For example, such commands work successfully And all models work on android, using TensorFlowInferenceInterface. |

|

@andrehentz Do you have some insight into this? |

|

frozen_graph.zip |

|

@Neargye I get the same problem when I try converting the mobilenet_v1 to a .tflite mode. The mobilenet_v1 which I used is in https://github.com/tensorflow/models/tree/master/research/slim/nets. |

|

I have a very similar issue here. Graph froze successfully but getting the same error I0413 09:45:28.332509 34490 graph_transformations.cc:39] Before Removing unused ops: 282 operators, 471 arrays (0 quantized) |

|

You should not use graph.pbtxt(produced when training) to froze graph. You should use a eval.pbtxt to frozen_graph. Just like ziped files (each file contains a eval.pbtxt)in https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.md |

|

I think this might be the cause of the issue. Unfortunately, the issue template was never filled out so the issue is closed. I just started working with TensorFlow this week, I might be wrong. The issue describes an error related to ResolveBatchNormalization input dimentions. I'm getting the same error as above : |

|

I removed the training nodes, and got the following error @GarryLau I can not use this version TF Lite, because the necessary operations have not been implemented yet? |

|

@GarryLau how can I generate eval.pbtxt? I tried running mobilenet_v1_eval with latest checkpoint but it is not gearing anything. |

|

@smitshilu @Neargye The method to generate eval.pbtxt is in the following: def export_eval_pbtxt():

"""Export eval.pbtxt."""

with tf.Graph().as_default() as g:

images = tf.placeholder(dtype=tf.float32,shape=[None,224,224,3])

# using one of the following methods to create graph, depends on you

#_, _ = mobilenet_v1.mobilenet_v1(inputs=images,num_classes=NUM_CLASSES, is_training=False)

with slim.arg_scope(mobilenet_v1.mobilenet_v1_arg_scope(is_training=False,regularize_depthwise=True)):

_, _ = mobilenet_v1.mobilenet_v1(inputs=images, is_training=False, depth_multiplier=1.0, num_classes=NUM_CLASSES)

eval_graph_file = '/home/garylau/Desktop/mobilenet_v1/mobilenet_v1_eval.pbtxt'

with tf.Session() as sess:

with open(eval_graph_file, 'w') as f:

f.write(str(g.as_graph_def()))Then, call the function to generate eval.pbtxt. |

|

Nagging Assignee @andrehentz: It has been 14 days with no activity and this issue has an assignee. Please update the label and/or status accordingly. |

|

I'm facing the same problem. Can batch normalization currently not be used? |

|

Maybe this holds only for me but I got it solved. I defined the graph using keras. The problem was I called K.learning_phase(0) after defining the graph. This led to the above error. When calling K.learning_phase(0) before defining the model it works :) |

|

Nagging Assignee @andrehentz: It has been 14 days with no activity and this issue has an assignee. Please update the label and/or status accordingly. |

|

I tried the conversion with the provided frozen_graph.zip and it seems to work now. Please reopen if you still encounter issues. |

|

@GarryLau I am able to use the code you provided to create the eval.pbtxt. |

|

@ychen404 |

|

@ychen404 toco(float): toco(QUANTIZED_UINT8): |

|

Hi GarryLau, It's working now! Thank you very much! |

|

Hey Arun, I feel the issues is with TensorFlow version that you are using, there might be an issue with toco_protos if you install TensorFLow alternate sources. I have tried it my self using 1.15, simplest way to try it yourself is to use Google colab and generate 8 bit quantized tflite file. Hope this answers your question, feel free to reach out to me. Best, |

|

Dear Naveen, I am in parallel doing from beginning to create the quantization aware training, to avoid post-training quantization problems. Kind Regards |

|

Hello Arun, Thanks for checking back, could you share me details of your input and output ? or what have been using for conevrter.representative_dataset. I can try to perform 8 bit quatization from my end and wil be happy to sahre my colab code with you. Its not very diffrent from system installtion vesrion of TensorFlow but in general colab would be common environment. So we can make sure we are using official version and it would easy to troubleshoot for third person like me intead of understanding your local environment. Trying out quatization aware traning could be a great option ; but for few models post-training quatization could be eaiser (transfer leraning or using a pre trained model) Will be happy to answer you question if you have more, Best, |

System information

Describe the problem

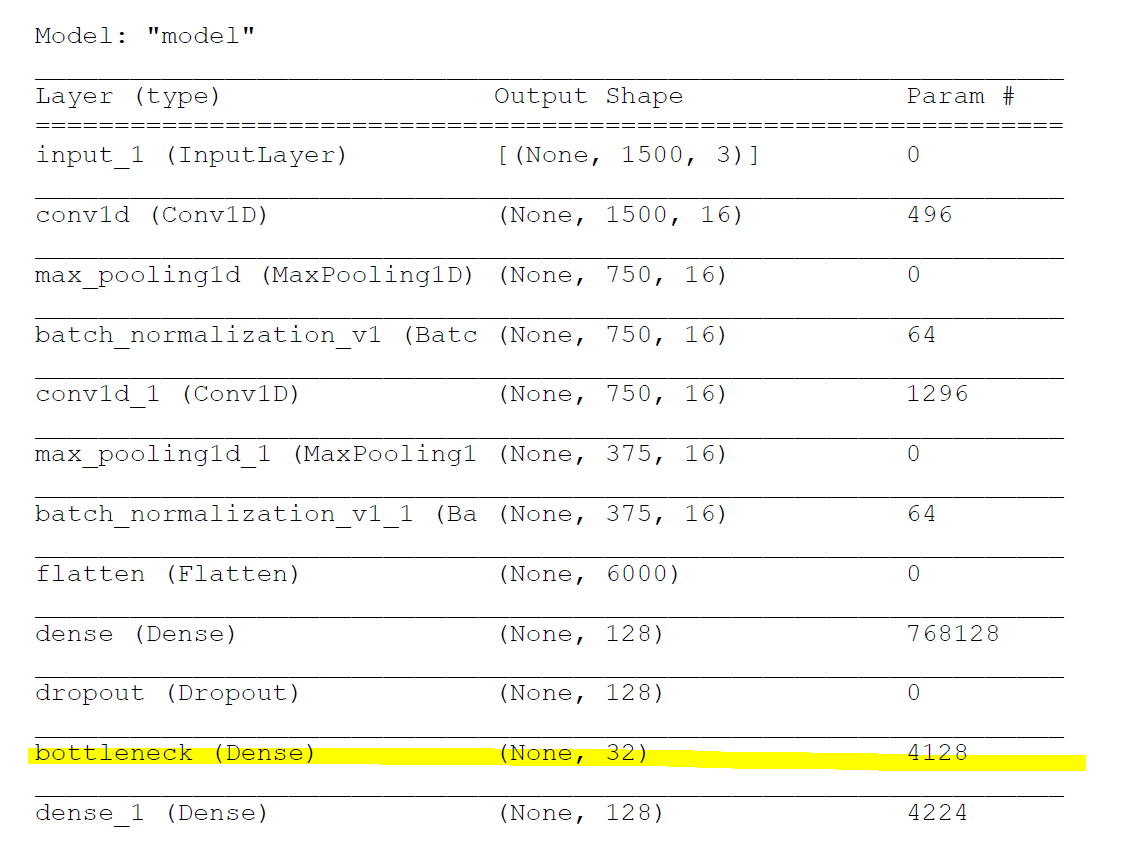

Trained model, successfully froze, it works on the tensorflow android, using TensorFlowInferenceInterface.

I try to convert this into a TF Lite format, but I get an error.

Source code / logs

The text was updated successfully, but these errors were encountered: