New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Can't allocate memory for the interpreter in tflite #19982

Comments

|

Hey Sayan, thanks for following up on this. Could you possibly update the build.gradle file to 'org.tensorflow:tensorflow-lite:0.0.0-nightly' and see if it fixes your issue? It contains a much more recent version of the TFLite library (built every day). Let me know if it does. I have a fix coming up that makes that the default. |

|

Hey Alan, thanks for taking up the issue! |

|

I'll try to reproduce it locally - it could have something to do with updating the Gradle file. #7470 seems relevant |

|

I tried compiling and running the app again today with the nightly build and I get the following error message now: |

|

Hey Sayan! There seems to have been a problem in the model conversion process (ex: TOCO) as opposed to the demo app. As the error message indicates, one of your conv operators is provided with an input tensor with no dimensions. Some Background for Debugging No we can suppose in your model, the conv op that's failing is op B. For some reason, op A has not resized tensor C and the conv op is seeing an input tensor with no dims. Unfortunately, I don't currently have cycles to help debug a model-specific issue. If it's ok for you to share your frozen graph file, that'd be great for when someone gets cycles to look into it. |

|

Nagging Assignee @alanchiao: It has been 14 days with no activity and this issue has an assignee. Please update the label and/or status accordingly. |

|

I'm also having the issue |

|

Nagging Assignee @alanchiao: It has been 14 days with no activity and this issue has an assignee. Please update the label and/or status accordingly. |

|

with the same problem. |

|

@karlzheng: could you provide more details?

If it's a separate error message, please create a separate issue. Thanks! |

|

I had the same issue (Nr. 3). Its probably because you retrained you modell with a modified retrain.py script. To complete the tutorial I had to give a variable called input_layer another value (to solve a different issue). |

|

Thank you @bayan100 for sharing how you resolved Nr. 3! Closing this issue since the original reporter hasn't responded for a while. @WhiteSEEKER and @karlzheng, feel free to create a separate issue and link to this one for context. If what Bayan said is true (you'd modified retrain.py), it'd be great if you mentioned it here so others know that that's the cause. |

|

java.lang.NullPointerException: Internal error: Cannot allocate memory for the interpreter: tensorflow/contrib/lite/kernels/conv.cc:191 input->dims->size != 4 (1 != 4)Node 0 failed to prepare. how to solve? |

|

@luolugithub : there isn't sufficient information from just that error. Does bayan100's comment apply to you? We'd need the .tflite model file at a minimum (or something the reproduces the error), and if it's generated from a working model (e.g. via retraining via retrain.py), it'd be useful to see both the original model and the generation code. Thanks! |

|

Having the same issue when trying to run on Android. Edit : Tried to use mobilenet_v2_140_224 model, still the same error on my phone |

|

The .pb model looks good because still have 4 dimension with Placeholder but when i try to open .tflite converted mode, it doesn't find any input array. Still working on it, may be a problem with toco |

|

Same error here but only when Im trying to use the post-training quantization.

|

|

@damhurmuller I'm doing the same thing as you and running into issues. Did you manage to find a solution? |

|

@SreehariRamMohan I was using an old version 1.10.* I guess. Now I'm using the nightly version and is ok. |

|

@damhurmuller I switched to compiling the tf nightly version but I still get the following error:

|

|

@alanchiao, what version of TFLite is required to use post_training_quant? |

|

E/AndroidRuntime: FATAL EXCEPTION: main I use tflite convert because it support multi inputs below is the command: if i use toco it will report this error below is the command: |

|

@mstc-xqp : please create a separate issue. While the error message is the same, the underlying cause is different. @damhurmuller : that makes sense. I don't think the post_training_quantize was ready for use until the 1.11 release |

|

I am having the same problem. Has anyone worked out a solution to this problem. |

|

@MATTYGILO : could you please create a separate issue (and CC mstc-xqp if the error is exactly the same). Note that for mstc's case, the error is for the concatenation.cc , whereas for Pierre, it's for the conv.cc. These are all model-specific and op-specific issues |

|

same problem here |

|

I encountered a similar problem when trying to convert pb to tflite using the toco converter (via bazel). I managed to get rid of the problem by passing in the |

System information

Detailed Description

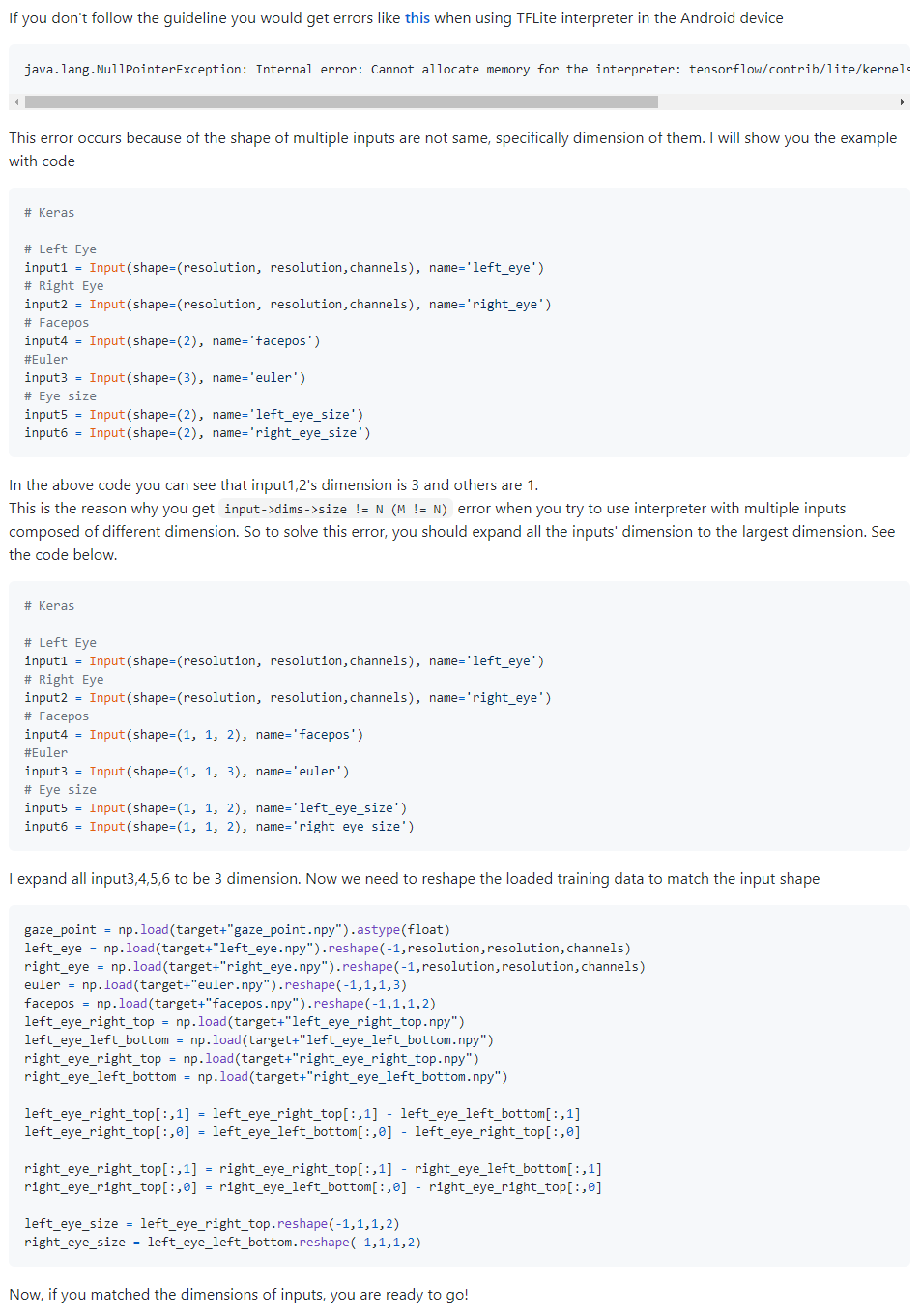

I created a custom TensorFlow model and converted it to TOCO (as described in the tensorflow-for-poets tutorial) and I replaced the old graph.lite file with my custom model and changed nothing else in the code. When I run the app, I get the following runtime error:

Fixes Already Tried

compile 'org.tensorflow:tensorflow-lite:+', but changing it tocompile 'org.tensorflow:tensorflow-lite:0.1.7'doesn't resolve the issue either.--change_concat_input_ranges=falseflag.The text was updated successfully, but these errors were encountered: