New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

No module named '_tensorflow_wrap_toco' #22617

Comments

|

Please refer this for exporting a tf.keras file into tensorflow lite |

|

@ymodak I've run the code in tf.keras file into tensorflow lite, but get the same error. |

|

Thanks for the information. |

|

@ymodak I've tried tf-nightly and tf-nightly_gpu, but it didn't work, got same error. |

|

I haven't been able to replicate the results using When I run Can you try running your code in a virtual environment with a fresh installation of TensorFlow (preferably through |

|

+1. I was able to build successfully using tf-nightly too. |

|

I created a completely new python env from Pycharm and run "pip install tf-nightly", then got the same error. |

|

Same issue on windows 10 |

|

I have also the same issue on Windows 10. I set up a new virtualenv and installed tensorflow nightly with I get the same error with: |

|

i have the same problem ,when i run in Unbuntu |

|

@cjr0106: Please file a new issue with reproducible instructions. |

|

You forget to install tf_nightly. I am using windows 10. In CMD, please input this: |

System information

yes

Windows 10

binary (through pip)

1.11.0

3.6.4

None (CPU only)

None

Describe the problem

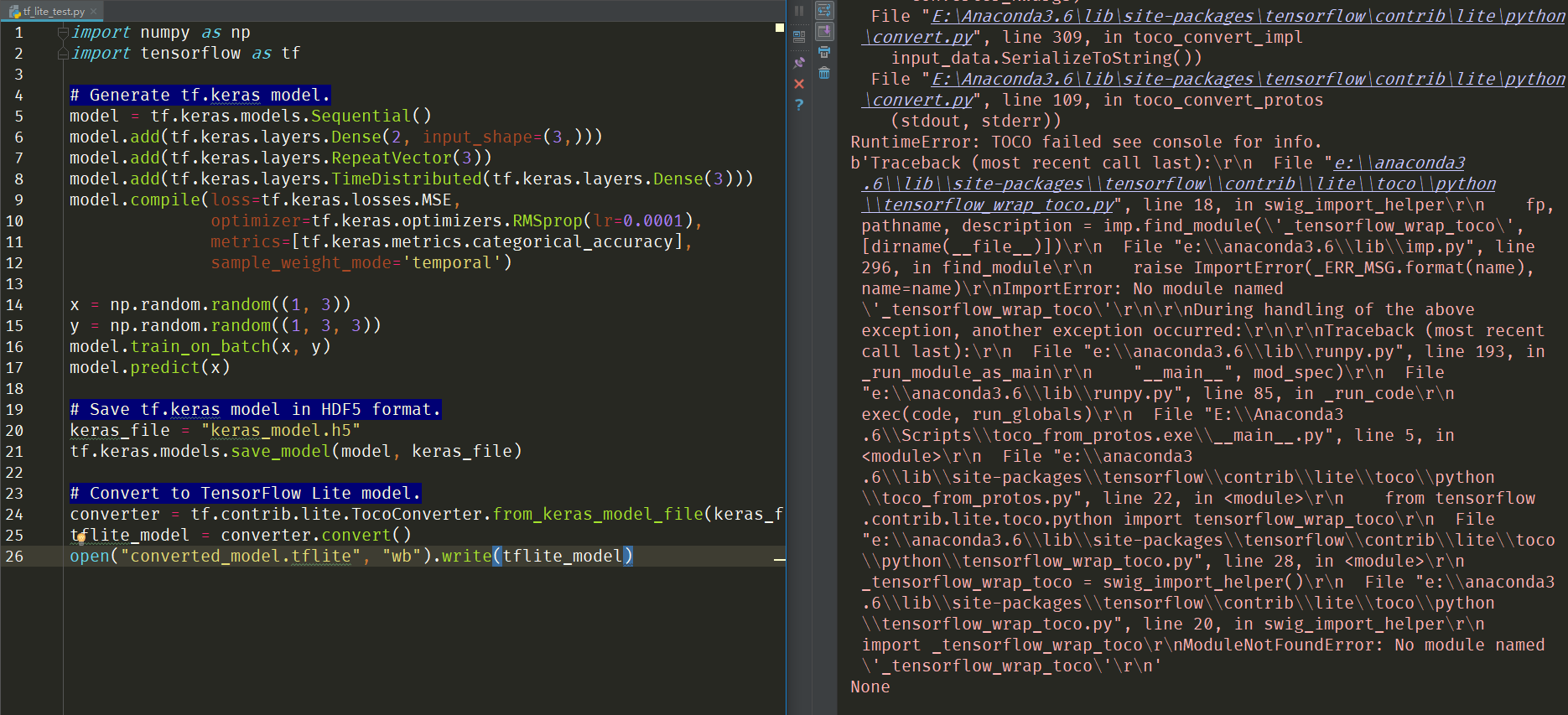

I saved my keras model to file and tried to use "lite.TFLiteConverter.from_keras_model_file(...)" and "tflite_model = converter.convert()" to get a lite model, but got error "No module named '_tensorflow_wrap_toco'". This is only a "tensorflow_wrap_toco.py" in "\tensorflow\contrib\lite\toco\python", and no "_tensorflow_wrap_toco" in that file.

I have updated my tensorflow through "pip install tensorflow --upgrade"

Source code / logs

Logs:

FutureWarning: Conversion of the second argument of issubdtype from

floattonp.floatingis deprecated. In future, it will be treated asnp.float64 == np.dtype(float).type.from ._conv import register_converters as _register_converters

Using TensorFlow backend.

2018-09-29 21:03:55.936260: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

WARNING:tensorflow:No training configuration found in save file: the model was not compiled. Compile it manually.

Traceback (most recent call last):

File "C:/Users/wang/Desktop/OpenPoseApp/camera-openpose-keras/demo_camera.py", line 325, in

save_tf_lite_model()

File "C:/Users/wang/Desktop/OpenPoseApp/camera-openpose-keras/demo_camera.py", line 311, in save_tf_lite_model

tflite_model = converter.convert()

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\lite\python\lite.py", line 453, in convert

**converter_kwargs)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\lite\python\convert.py", line 342, in toco_convert_impl

input_data.SerializeToString())

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\lite\python\convert.py", line 135, in toco_convert_protos

(stdout, stderr))

RuntimeError: TOCO failed see console for info.

b'c:\programdata\anaconda3\lib\site-packages\h5py\init.py:36: FutureWarning: Conversion of the second argument of issubdtype from

floattonp.floatingis deprecated. In future, it will be treated asnp.float64 == np.dtype(float).type.\r\n from ._conv import register_converters as _register_converters\r\nTraceback (most recent call last):\r\n File "c:\programdata\anaconda3\lib\site-packages\tensorflow\contrib\lite\toco\python\tensorflow_wrap_toco.py", line 18, in swig_import_helper\r\n fp, pathname, description = imp.find_module('_tensorflow_wrap_toco', [dirname(file)])\r\n File "c:\programdata\anaconda3\lib\imp.py", line 297, in find_module\r\n raise ImportError(_ERR_MSG.format(name), name=name)\r\nImportError: No module named '_tensorflow_wrap_toco'\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File "c:\programdata\anaconda3\lib\runpy.py", line 193, in _run_module_as_main\r\n "main", mod_spec)\r\n File "c:\programdata\anaconda3\lib\runpy.py", line 85, in _run_code\r\n exec(code, run_globals)\r\n File "C:\ProgramData\Anaconda3\Scripts\toco_from_protos.exe\main.py", line 5, in \r\n File "c:\programdata\anaconda3\lib\site-packages\tensorflow\contrib\lite\toco\python\toco_from_protos.py", line 22, in \r\n from tensorflow.contrib.lite.toco.python import tensorflow_wrap_toco\r\n File "c:\programdata\anaconda3\lib\site-packages\tensorflow\contrib\lite\toco\python\tensorflow_wrap_toco.py", line 28, in \r\n _tensorflow_wrap_toco = swig_import_helper()\r\n File "c:\programdata\anaconda3\lib\site-packages\tensorflow\contrib\lite\toco\python\tensorflow_wrap_toco.py", line 20, in swig_import_helper\r\n import _tensorflow_wrap_toco\r\nModuleNotFoundError: No module named '_tensorflow_wrap_toco'\r\n'None

The text was updated successfully, but these errors were encountered: