-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Tensorboard does not display more then 100 bounding boxes #30464

Comments

|

For faster resolution please post the issue on TF-tensorboard |

|

@ravikyram I believe this is an issue with the tensorflow/models/research/object_detection. I tried to debug the issue but I do lack a lot of context here. Can you please reassign it to the person from the owners listed here? Thanks! |

|

Hi, I've made a config and tfrecord's for this issue on my drive You can download it, change paths in pipeline.config and run training (run.sh - set CUDA_VISIBLE_DEVICES and paths). Can I help you in some way to solve this issue? |

|

I've tried tensorpack lib and succesfully got results for image with more then 100 bboxes |

|

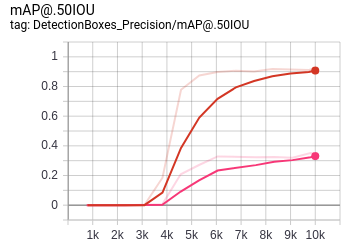

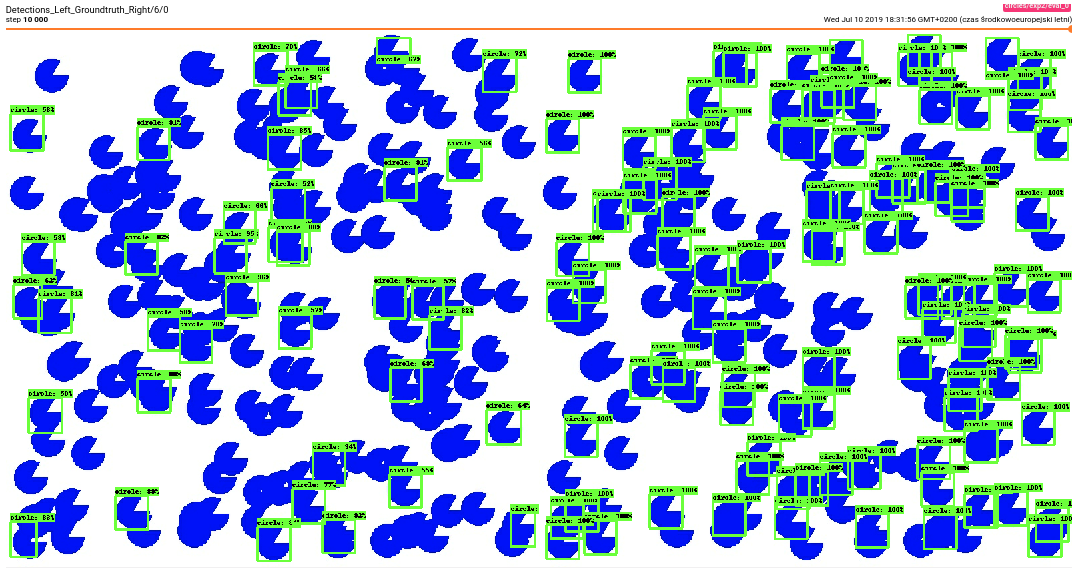

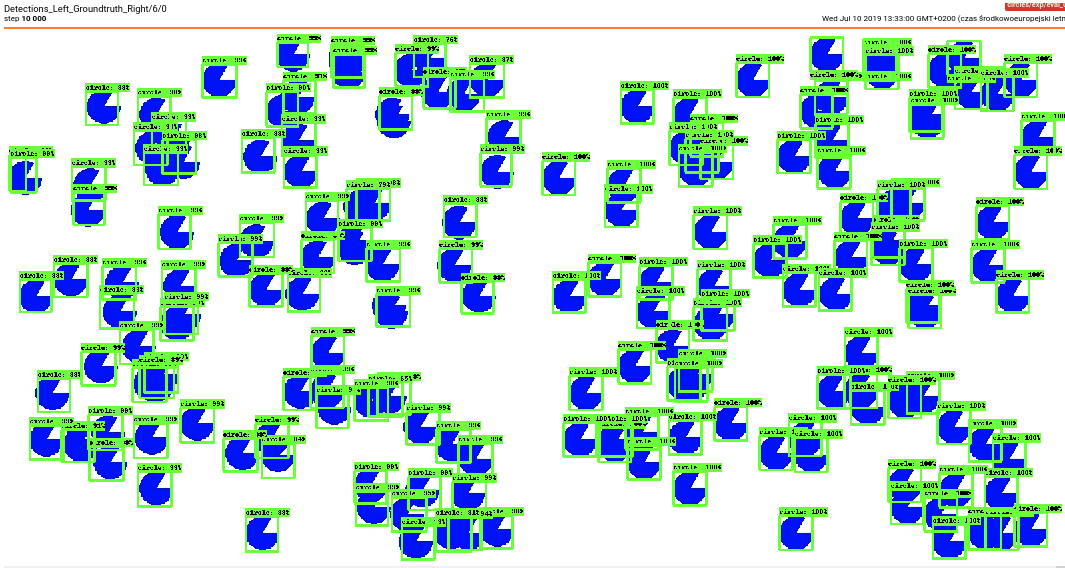

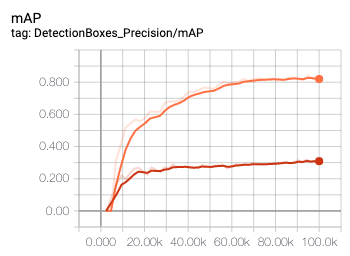

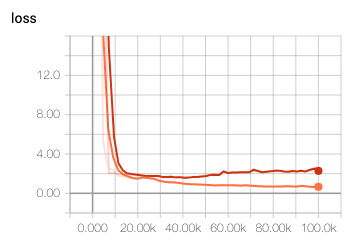

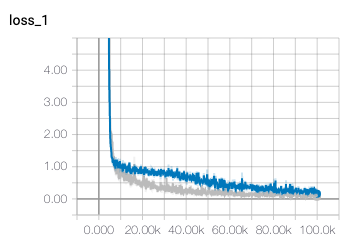

I’ve struggle the same issue and done some investigation around this topis. It looks like the issue is only in the evaluation step of a training process. Experiment 1 - training on pictures with less than 100 objectsI’ve done an experiment where training set had only images with less than 100 objects. I’ve done the training, which went fine - mAP around 0.82. Then, when I was exporting the model for inference, I’ve changed in pipeline.config, in section I’ve checked the predictions for a photo where there was over 100 objects in the picture. The number of predictions was correct - it marked all objects. Experiment 2 - training on pictures with more than 100 objectsI’ve then done the same experiment, but with a training set having some of the images with over 100 objects, and parameter MetricsLegend: Gray - training, images with less than 100 objects Evaluator IssueI’ve checked evaluator sorce code. The COCO evaluator has a fixed value of 100 maximum detections (link to source code). This causes, that mAP and other metrics calculations are incorrect, because in the evaluation dataset there are examples, where in Ground Truth photos there is over 100 objects, and during evaluation only 100 detections are done. Any ideas how to tackle the problem with maxDets limit in COCO evaluator? I've already tried setting the maxDets parameter to [1, 10, 300] for box_evaluator (after this line), but this caused, that mAP was calculated as -1.000, so something were not working fine. |

|

Hi There, We are checking to see if you still need help on this, as you are using an older version of tensorflow which is officially considered end of life . We recommend that you upgrade to the latest 2.x version and let us know if the issue still persists in newer versions. Please open a new issue for any help you need against 2.x, and we will get you the right help. This issue will be closed automatically 7 days from now. If you still need help with this issue, please provide us with more information. |

System information

v1.12.0-0-ga6d8ffae09 1.12.0

(I've also tried b'v1.12.0-6120-gdaab2673f2' 1.13.0-dev20190116 and results are the same)

Describe the current behavior

I run training

This is only one step training and its goal is to visualize bounding boxes in tensorboard images tam.

On the "IMAGES" tab in tensorboard I don't see all bounding boxes which are in the tfrecord file.

Options provided in the pipeline.config

have no effect on this.

Describe the expected behavior

I see all bounding boxes (ground thruth) in the tensorboard>images (pictures on the right)

Code to reproduce the issue

I've created repo where you can find

The text was updated successfully, but these errors were encountered: