-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Cannot convert model containing categorical_column_with_vocabulary_list op #37844

Comments

|

Was able to reproduce the issue with TF v2.2.0-rc1 and TF-nightly. Please find the attached gist. Thanks! |

|

Thanks for the prompt response to this issue. I also read the official document about operator compatibility, saying that, ops such as EMBEDDING_LOOKUP, HASHTABLE_LOOKUP, |

|

EMBEDDING_LOOKUP is already supported via TensorFlow Lite builtin ops. And the experimental hashtable op kernels are existing under the following directory: https://github.com/tensorflow/tensorflow/tree/master/tensorflow/lite/experimental/kernels. The AddHashtableOps Python module exists in here: https://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/experimental/kernels/BUILD#L177 If you want to make your own custom ops, please check out https://www.tensorflow.org/lite/guide/ops_custom. For the below error at the conversion, currently, we are trying to fix it soon hopefully. I will leave a comment when it is fixed in the nightly build. |

|

@abattery Hi Jae Sung, thanks a lot for your feedback. Actually I saw your commits of hashtable ops in tensorflow project. Thus I was looking forward for your reply when I created this issue: ) After running the above example, I still have a couple of questions.

Thank you again! |

|

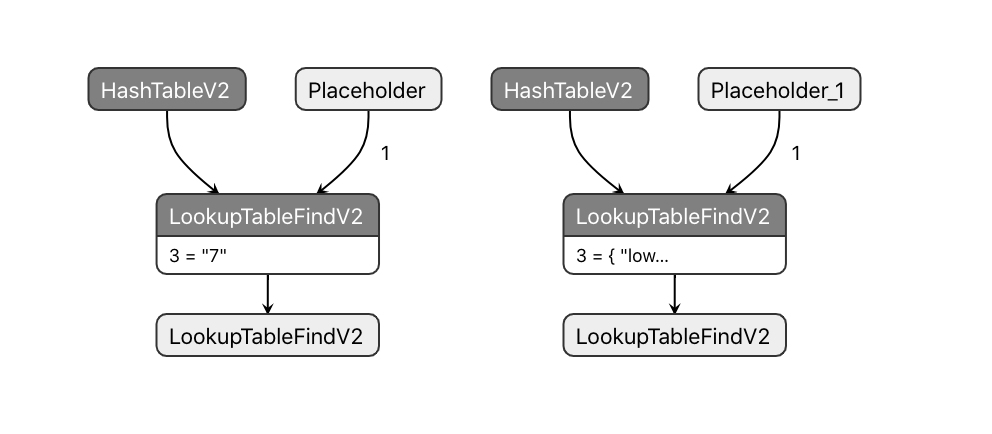

Hi, I have tested with both TF 1.15.0 version and TF 2.2.0rc2 version with the above sample. As you said, the model generated from TF 1.15.0 version does not contains LookupTableImportV2 node. In your case, is it okay to use TF 2.x version? Thank you for reporting a bug. The tf.control_dependencies method creates an explicit dependency between things in a graph. For example, it is required because the LookupTableImportV2 op node should be located before the LookupTableFindV2 op node appears. Best regards, |

|

|

Removed AddHashtableOps support in Python temporarily. However, you can still add this to an interpreter in C++. |

|

How to Include Hashtable ops in your TFLite.Currently, hashtable ops are under the experimental stage. You need to add hashtable ops manually by including the following dependency:

And then, your op resolver should add them like the following statements: |

|

@abattery Hi Jaesung, thanks a lot for your reply. Since the TF1.15.0 of export+convert cannot success, I'm wondering if model exported from TF version under 2.x could be converted correctly?

Say the currently lookup example you posted previously: I've tried to firstly export with model using SavedModelBuilder with |

|

Model should need to store a explicit dependency between LookupImportV2 op and other Lookup ops. However, it seems that TF v1.15.0 could not store that information. If the information is already lost, the TF 2.2 converter can not revive the missing information. |

|

@abattery May I ask for some details about the dependency? Is it the Python API part or C++ kernels? I'm wondering if I could get this done through refactor some tensorflow code. |

|

Could you try setting with "drop_control_dependency=False" in the TFLiteConverterV1? |

|

@abattery is this still the case?

If it's been added back, do you have any example code on how to use it from python? As mentioned in this stack overflow post, I was able to add |

|

Please take a look at https://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/kernels/hashtable/README.md in order to use the provided hash table op kernels as a custom op library. |

|

Was able to replicate the issue with TF v2.5,please find the gist here ..Thanks! |

|

This above converter error can be gone when the saved model converter is choosen. |

System information

Command used to run the converter or code if you’re using the Python API

If possible, please share a link to Colab/Jupyter/any notebook.

The output from the converter invocation

Also, please include a link to the saved model or GraphDef

Failure details

Cannot convert a Tensor of dtype

resourceto a NumPy array.According to my analysis, this might be caused by some HashTable Ops, which create table handles. And my additional question is: whether tfliteconverter could convert model contains ops of initialize hashtableV2 and LookupTableImportV2? Thank you.

Any other info / logs: Full logs

The text was updated successfully, but these errors were encountered: