-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Tensorflow operations : Invalid data type according to Tensorflow Profiler #45946

Comments

To resolve the out of memory error, please try any one of the methods to limit GPU memory as shown in this guide. Also, please go through these guides for tensorboard profiling tool and memory profile summary for more information. Thanks! |

I have already tried to allow memory growthing but there is still the problems.

That's what I've done and that's what I've explained in the first post with in addition a minimal code sample to reproduce the results with the profiler, you may have missed it. I'm curious to know if people get the same results with this code (run the code and access the dir my_logdir with the profiler). |

|

I did not face any out of memory errors on running the code, however I did get similar results with the memory profiler on TF v2.3, TF v2.4 and TF-nightly. Please find the gist of it here. Thanks! |

|

@RocaVincent, |

|

@rmothukuru |

|

Hi, |

|

I have this issue with Conv1D also. My model should be using under 200MB, but I have INVALID shape when profiling and the heap usage spikes to 4GB. |

|

Was able to replicate the issue in TF v2.5,please find the gist here..Thanks ! |

Got almost exactly the same. With Conv2D. It causes that 4go of memory are fried, even if the network need no more than 200Mo to run... Does anybody have found a solution or an idea of that ? |

|

Has similar issue, any update? @ckluk-github |

|

Running into the same issue as well. |

Hi,

I use Tensorflow 2.5 installed from source with Cuda 11.1 and Cudnn 8 on Ubuntu 20.04. My GPU is a Nvidia Quadro RTX 6000.

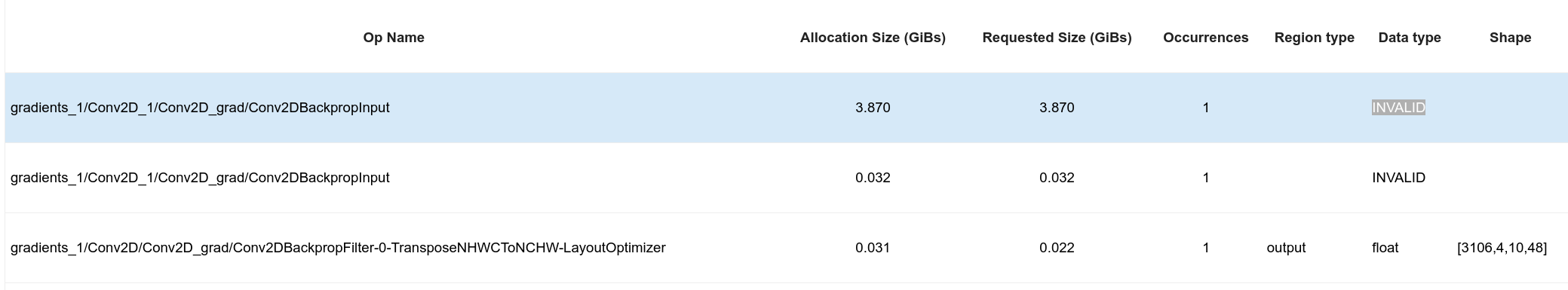

I have got Out of Memory problems with the GPU and the training of a CycleGAN model. In order to track memory leaks in my code, I have run it with profiler trace and I can now see with Tensorboard strange results in the memory breakdown table (memory profile tab). Indeed, some Tensorflow operations have "INVALID" data type, no region type and no shape. This suggests there are bugs with some Tensorflow operations.

Above I put a minimal code sample which reproduces this kind of error.

With this code, I get the following results in the memory profile tab :

How do you interprete these results ?

The text was updated successfully, but these errors were encountered: