-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BatchToSpaceND and SpaceToBatchND ERROR_GPU_NOT_COMPATIBLE #59870

Comments

|

This is my model. You can do conversion with my code and reproduce my result. |

|

Hi @ShashmurinSergey, I was able to replicate the issue in Colab using TF v2.10, TF v2.11 and tf-nightly(2.13.0.dev20230305). Please find the gists here(2.10), here(2.11) and here(tf-nightly). Thank you! |

|

@synandi Yes, replicate is correct, Thank you! |

|

Sorry for the delayed response. The Also, as given in the documentation,

The list of ops in the model compatible with GPU can be found using Analyzer. Please find the gist on the usage of the tool for your use case. Thanks. |

|

Hi @pjpratik! Is there any way for me to convert this model to TFLite with GPU support? Can I somehow remove these layers? |

|

They are commonly used in conv2d transpose operation AFAIK. Have you tried in latest TF 2.11 and TF nightly with dilation!=1 and see if issue still exists? Thanks. |

|

@pjpratik But I am not using Conv2d transpose, the error occurs on Conv2d layers with dilation=2, 4, 8. |

|

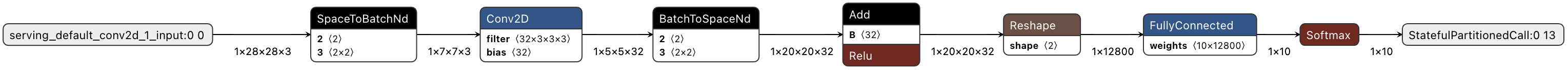

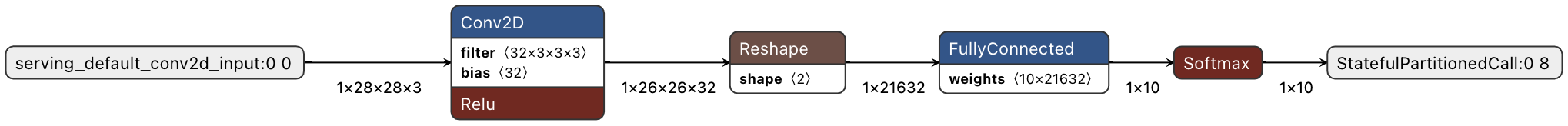

Thanks for the information. I have created a toy model with dilation = 1 and dilation = 4. Please find the gist here. The Conv2D adds the BatchToSpaceND and SpaceToBatchND ops when the dilation rate !=1 for appropriate padding to avoid holes in the output. Model with dilation = 4 Model with dilation = 1 This can also be observed at tensorflow/tensorflow/python/ops/nn_ops.py Line 1465 in d5b57ca

as a result those ops are added which are not compatible for tflite GPU support. Thanks. |

|

Hi @pjpratik! |

|

@ShashmurinSergey That could be on roadmap. I am not sure about it. @sachinprasadhs Could you please look into this. Thanks. |

1. System information

2. Code

3. Failure after conversion

'BatchToSpaceND' ERROR_GPU_NOT_COMPATIBLE

'SpaceToBatchND' ERROR_GPU_NOT_COMPATIBLE

5. (optional) Any other info / logs

Hi everyone! I am having issues converting the U-2-Net model from PyTorch to TFLite for running on Android. My conversion path is PyTorch(pth) -> ONNX -> TensorFlow -> TFLite. An important requirement is that the TFLite model should support GPU execution and needs to be quantized. The main conversion path works fine, but I encountered an error during the TFLite conversion. After some investigation, I found out that if I change the layers with Conv2D parameters dilation > 1 and padding > 1 to dilation=1 and padding=1, the conversion works without any issues. However, this reduces the model's quality. Obviously, if GPU support is disabled, the model can be converted. I have tried using QuantizationDebugOptions and QuantizationDebugger, but it did not yield any results, the error remains the same. Can you please suggest any way to perform this conversion without compromising the model's quality?

The text was updated successfully, but these errors were encountered: